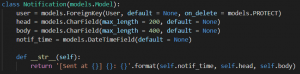

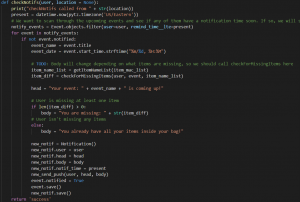

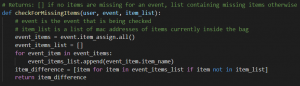

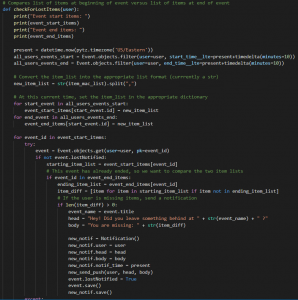

This week, I completed the features for missing item notifications (i.e., an event is coming up but at least one of the required items for the event is not currently in the backpack) as well as lost item notifications (i.e., the item list at the beginning of an event is not consistent with the item list at the end of an event). A demo of this feature can be found here [with no missing items] and [with missing items]. Two new functions were written for these new features, which can be viewed below:

Additionally, since our team members are all working to finish the remainder of our project, I picked up the schedule learning feature task for our web application. Currently, I’ve collected sample data for what our web application may need for this feature, which consists of large dictionaries with timestamps as keys and an item list snapshot of what items are inside the user’s backpack at that timestamp. This data would then be continually compared against events stored in the database and items would be automatically assigned to the user’s events based on this data. However, this feature is not a requirement of our MVP, and a fully fleshed-out version of this feature would require persistence (thin native client + webview) to be completed first. I’ve also started working with my teammates on our final presentation.

Currently, our schedule is a little bit behind. The components left involve:

- Persistence (implementation of thin native client in Android + WebView)

- Schedule Learning (not part of our MVP, but dependent on persistence)

- Integration with image recognition component (currently depending on Joon to set up an API endpoint for the model)

Save for the integration with the image recognition component, our MVP is already completed. However, implementing features like persistence and schedule learning would greatly increase the cases in which our product can be most useful as well.

To catch up to the project schedule, we’ll be using this final week of development (week of 5/2) to complete the remaining components. Aaron and I will work on Persistence, I will work on Schedule Learning, and Joon will work on setting up an API endpoint for the model. These are the deliverables we hope to complete in the next week.