This week, I presented our team’s project proposal to Section C and Professor Kim. Thanks to my group members’ support, the presentation went really well. From the feedback I have received from the classmates from Section C, I was delighted to hear that the presentation delivered was clear and very easy to understand. I am also looking forward to hearing some feedback on the technical content of the presentation and discussing this feedback with the teammates.

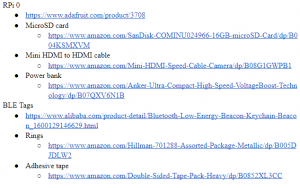

I did more research on the computer vision and image recognition modules and datasets for the scope of our project. I have read the documentation of the OpenCV to get familiar with the module. Aside from OpenCV that I found last week, I also found that TensorFlow is another module for working on image classification. TensorFlow website also provides a list of the machine learning models (MobileNet, Inception, and NASNet) for image recognition on the smartphone application with accuracy and processing time. (Link: https://www.tensorflow.org/lite/guide/hosted_models). I read some papers related to MobileNet and hopefully next week, I can determine what models to train and build for our project. From Professor Kim’s comments this week, I developed a list of items that the student would carry in the backpack. Having this comprehensive list of items will help me determine the items to identify and classify while the student registers an item with the smartphone camera.

Our team and I are currently on schedule.

Next week, I plan on finally deciding the modules and software libraries to use for the camera identification so that I have a finalized pipeline for image processing. Since I am in Korea right now and other teammates are completely remote, we need to set up the version control system (Git) so that each other can work together in the online/virtual environment. I also need to work on the Design Review document with my teammates next week.