This week we finished the final presentation in which Evann presented. We all worked on the final demo video. The script for the final demo was written and most of the footage that we are using has been filmed. The final demo video takes place in the car where the program audio and alerts and be heard and assessed in real time. I also worked on the hardware setup to put the board on the car dashboard along with nicely placing the camera at a high enough angle to get footage of the driver. Lastly, I worked of the poster for the final demo which is almost complete.

Progress: I am currently on schedule. This project is wrapping up nicely and we are almost complete.

In the upcoming days I hope to finish:

- Edit the video

- There are also some more details that we need to add to the poster

- More testing that we are all planning on doing to include in our final report.

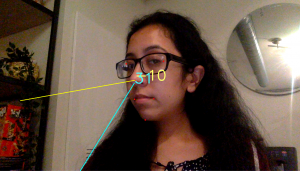

Once we designed this. We simply coded up the connection between pages and connected the UI to our current calibration and main process code. We needed to work on connecting the calibration because this step required the real time video whereas for the main process we are not planning on showing the user any video. We only sound the alert if required. After we completed the UI, I started working on tweaks for the calibration and classification code for the board. When we ran our code some small things were off such as the drawn red ellipse the user is attempting to align their face with. For this I adjusted the red ellipse dimensions but also made it easier for us later on to simply change the x, y, w, h constants with out go through the code and understand values. Once I completed this, I started working on adjusting our classification to fit the board. I also started working on the final presentation Power Point slides.

Once we designed this. We simply coded up the connection between pages and connected the UI to our current calibration and main process code. We needed to work on connecting the calibration because this step required the real time video whereas for the main process we are not planning on showing the user any video. We only sound the alert if required. After we completed the UI, I started working on tweaks for the calibration and classification code for the board. When we ran our code some small things were off such as the drawn red ellipse the user is attempting to align their face with. For this I adjusted the red ellipse dimensions but also made it easier for us later on to simply change the x, y, w, h constants with out go through the code and understand values. Once I completed this, I started working on adjusting our classification to fit the board. I also started working on the final presentation Power Point slides.