- What did you personally accomplish this week on the project? This week we focused on fine-tuning our project. I focused on playing around with some thresholding variables so that our classification is slightly better. But this was given one lighting condition so I need to test with different lighting conditions. After this, we built the case for our device. We used a Kleenex box and placed the Jetson board inside. I cut out the cardboard so that it was usable positioned the camera such that it is not too intrusive for the driver. Once we complete putting the device together, Evann and I tested the project to make sure everything was still working. Meanwhile, we also started writing a script for our video including what shots we wants with which voiceover. Evann and I also played around with the screen direction in order for the device to fit well in the car. Once we completed this, we took our device out to the car to film clips for our video.

- Is your progress on schedule or behind? We are currently on track with our schedule.

- What deliverables do you hope to complete in the next week? Next week we should complete our Public Demo and work on our Final Report.

Team Status Report for 5/1

- Significant Risks: At this stage there is not many significant risks that can jeopardize our project. There are small things such as not being able to classify the eyes accurately enough. We are still working on best improving this algorithm depending on the distance the user is from the camera / the lighting in the car.

- Changes to the Existing Design: No changes for this week

- Schedule: There are no changes to the schedule

- Short demo Video:

https://drive.google.com/file/d/1gHNVyxSBz6iphaPCnnBXD8wDqWc6w8aR/view?usp=sharing

This shows the program working on our touchscreen. Essentially, after calibration, there is a sound played (played on the headphones in this video) when the user has their eyes closed / mouth open for a longer period of time.

Jananni’s Status Report for 5/1

- Accomplished this week: This week I first worked on the UI with Adriana then focused on tweaking the calibration and classification code for our device. For the UI, we first did some research into integrating UI such as tkinter with our OpenCV code but we realized this may be difficult. So we decided to make buttons by creating a box and when a click occurs in the box an action should occur. We then designed our very simple UI flow. I also spent some time working on a friendly logo for our device. This was what we designed. Our flow starts at the top left box with our CarMa Logo then goes on to the Calibration step below. Then tells the user it is ready to begin.

Once we designed this. We simply coded up the connection between pages and connected the UI to our current calibration and main process code. We needed to work on connecting the calibration because this step required the real time video whereas for the main process we are not planning on showing the user any video. We only sound the alert if required. After we completed the UI, I started working on tweaks for the calibration and classification code for the board. When we ran our code some small things were off such as the drawn red ellipse the user is attempting to align their face with. For this I adjusted the red ellipse dimensions but also made it easier for us later on to simply change the x, y, w, h constants with out go through the code and understand values. Once I completed this, I started working on adjusting our classification to fit the board. I also started working on the final presentation Power Point slides.

Once we designed this. We simply coded up the connection between pages and connected the UI to our current calibration and main process code. We needed to work on connecting the calibration because this step required the real time video whereas for the main process we are not planning on showing the user any video. We only sound the alert if required. After we completed the UI, I started working on tweaks for the calibration and classification code for the board. When we ran our code some small things were off such as the drawn red ellipse the user is attempting to align their face with. For this I adjusted the red ellipse dimensions but also made it easier for us later on to simply change the x, y, w, h constants with out go through the code and understand values. Once I completed this, I started working on adjusting our classification to fit the board. I also started working on the final presentation Power Point slides. - Progress: I am currently ahead of our must recent schedule. We planned to have the UI completed this week. We completed this early and started working on auxiliary items.

- Next week I hope to complete the final presentation and potentially work on the thresholding. I am wondering if this is the reason the classification of the eye direction is not great. We also need to complete a final demo video.

Team Status Report for 4/24

- One risk that could potential impact our project at this point is the buzzer integration. If there is an issue with this aspect of the project, we may need to figure out a new way to alert the user. This could potentially be addressed by alerting the user with an alert on the screen.

- No, this week there were no changes to the existing design of the system. For testing and verification, we more precisely defined what we meant by 75% accuracy against our test suite.

- This week we hope to complete the integration of the buzzer and the UI interface of our project. Next week we hope to create a video for the head turn thresholding to showcase the accuracy and how it changes as you turn your head. We also need to potentially work on pose detection and more test videos. Finally, we are going to be testing our application in a car to obtain real world data.

Jananni’s Status Report 4/24

- This week I first worked on including the classification that if a driver’s eyes are pointed away from the road for over two seconds then the driver is classified as distracted. In order to do this, I first took the computed direction values of the eyes and if the eyes are pointed up, down, left or right for over two seconds, I send an alert. After committing this code, I worked on changing our verification and testing document. I wanted to include the Prof’s recommendations about our testing to not take averages of error rates because this implies we have a lot more precision that we actually have. Instead I recorded the number of total events that occurred and the number of error events. Then I took the total number of errored events for all actions and take the percentage. Now I am working on looking into research for our simple UI interface to integrate it with what we currently have.

- I am currently on track with our schedule. We wrote out a complete schedule this week for the final weeks of school, including the final report and additional testing videos.

- This week I hope to finish implementing the UI for our project with Adriana.

Jananni’s Status Report for 4/10

- This week I worked on finishing up integration with the main process of our project. Once that was completed I worked on getting more videos for our test suite of various people with various face shapes. Finally I started researching how to improve our code to optimize the GPU. This will ideally bring up our frames per second to higher than 5 frames per second as in our original requirement. I also worked on putting together material for our demo next week. Here are some important links I have been reading regarding optimizing our code specifically for the GPU: https://forums.developer.nvidia.com/t/how-to-increase-fps-in-nvidia-xavier/80507, and more. The article (similar to many others) talks about using jetson_clocks and changing the power management profiles. The change in power management profiles tries to maximize performance and energy usage. Then the jetson_clocks are used to set max frequencies to the CPU, GPU and EMC clocks which should ideally improve the frames per second in our testing.

- Currently as a team we are a little ahead of schedule, so we revised our weekly goals and I am on track for those updated goals. I am working with Adriana to improve our optimization.

- By next week, I plan to finish optimizing the code and start working on our stretch goal. After talking to the professor, we are deciding between working on the pose detection or lane change using the accelerometer.

Team Status Report for 4/10

- The most significant risk that could happen to our project is not meeting one of our main requirements of at least 5 frames per second. We are working on improving our current rate of 4 frames per second by having some openCV algorithms run GPU, in particular the dnn module which detects where a face in the image is. If this optimization does not noticeably increase our performance then we plan on using our back-up plan of moving some of the computation to AWS so that the board is faster.

- This week we have not made any major changes to our designs or block diagrams.

- In terms of our goals and milestones, we are a little ahead of our original plan. Because of this, we are deciding which stretch goals we should start working on and which of our stretch goals are feasible within the remaining time we have. We are currently deciding between pose detection or phone detection.

Team Status Report for 4/3

- This week we focused on integrating with the board. We finalized our calibration and main software processes. We did more testing on how the lighting conditions affect our software and optimized our eye tracker by using the aspect ratio. One big risk that we are continuously looking out for is the speed of our program. On our laptops the computation speed is very quick but if it takes longer on the board our contingency plan is to use AWS to speed it up.

- For the board we are now using TensorFlow 2.4 and python 3.6 which changes a few built-in functions. This is necessary for the compatibility with the Nvidia Jetpack SDK we are using on the board. There are no costs for this only minor changes in the code are necessary.

- So far we are keeping to the original schedule. We will be focusing on optimization and next start working on our stretch goals.

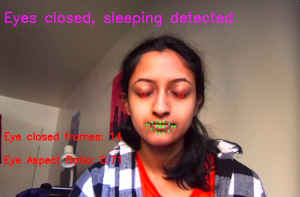

- Eyes closed detection:

Jananni’s Status Report for 4/3

- This week my primary goal was working through and flushing out the calibration process, specifically making it more user friendly and putting pieces together. I first spent some time figuring out the best way to interact with the user. Initially I thought tkinter would be easy and feasible. My initial approach was to have a button the user would click when they want to take their initial calibration pictures. But after some initial research and coding and a lot of bugs I realized cv2 does not easily fit into tkinter. So instead I decided to work with just cv2 and take the picture for the user once their head is within a drawn ellipse. This will be easier for the user as they just have to position their face and this guarantees we get the picture and values we need. Once the calibration process is over I store the eye aspect ratio and mouth height into a text file. Adriana and I decided to do this to transfer the data from the calibration process to the main process so that the processes are independent from each other. Here is a screen recording of the progress.

2. Based on our schedule I am on track. I aimed to get the baseline calibration working correctly with simple user interface.

3. Next week I plan to start working on pose detection and possible code changes in order to be compatible with the board.

Team Status Report for 3/27

- This week we continued integration and the biggest potential issue we ran into was the compute power of the board. Once the camera was connected, we quickly realized that the board had a delay of about 2 seconds. With a decreased frame rate of 5 frames per second we had a delay of about half a second. We are worried that with our heavy computation the delay will get larger. We expected this and we are continuing forward with our plan to optimize for faster speeds and worst case put some computation on AWS to lighten the computation load.

- No changes were made to our requirements, specs or block diagrams.

- This week we have caught up to our original schedule and we plan to continue front loading our work so that integration will have more time.