This week, I worked on breaking up the landmarking function independently to find out where the bottleneck exists in terms of what is taking a long time for the code to run. This lead to me, working on having our landmarking functions, specifically the model that we use for predictions that is loaded from tensorflow to be run on the GPU on our Xavier board. We then turned on the appropriate flags and settings on our board to have the tensorflow-GPU enabled.

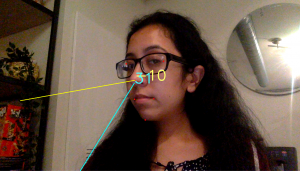

Similarly, I was able to research more into what our threshold for having ourface turned at an angle and still having accurate eye and mouth detection. The mouth detection turned out to be very accurate even when turned at about 75 degrees from the center of the camera. However, the eye detection began giving inaccurate results once one of the eyes was not visible. This usually happen around 30 degrees. Therefore we have concluded that the best range in which our application should work is when the user’s head is +/- 30 degrees from the center of the camera.

Lastly, I worked on the user flow for how the user is going to turn on / off the application and what would be less distracting for the user. For next steps, Jananni and I are working on creating the user interface for when the device is turned on / off. This should be completed by Thursday which is when we are putting a “stop” on coding.

I am currently on schedule and our goal is to be completely done with our code by Thursday. We are then going to focus the remainder of our time to testing in the car and gathering real time data.