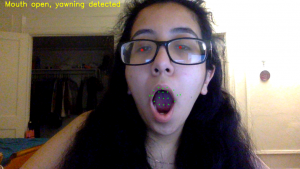

This week the majority of my time went to working on our classification algorithm. Now that we have a working mouth and eye detection implementation we need to know when the user should be notified that they appear distracted. For distraction concerning the eyes, we are looking into when their eyes are closed. Given the eye calibration values that Jananni has worked on, I use that number as a basis to detect when their eyes appear to be about half of that value. Similarly, for the mouth detection, I check to see if their mouth appears to be yawning by using the open mouth calibration value and check if the user is currently opening their mouth at a larger distance. When either of these conditions are met then an alert will sound to warn the user.

This week I also managed to help both Evann and Jananni with putting our eye detection algorithm on the board. We are currently in the process of improving how the code runs on the board and Evann is spearheading that progress. Another portion of the project that I have worked on is integration of our eye tracker and mouth tracker. Before they used to be two seperate algorithm but I have combine them in order for them to be able to run simultaneously. This involved refactoring a lot of the code that we had but it is something that will be very useful for us. My current progress is on schedule and I will be looking into how to incorporate an actual sound for an alert instead of text on the screen. I also hope on starting to work on improving our algorithm in both accuracy and speed since putting this on the board will degrade the performance of our algorithms.