Over the past few weeks, I worked on developing a final model for fruit detection. I was working with an already built YOLO model and also tried developing a custom model. However, I noticed that the custom model did not perform much better in terms of accuracy and it did not reduce the time required for computation either, so I decided that we’d continue with the YOLO model. I tested the fruit detection accuracy with this model using a good Kaggle dataset I found online with images similar to the ones that would be clicked with our lighting conditions and background and 0% of the bananas were misclassified, around 3% of the apples were misclassified, and around 3% of the oranges were misclassified out of a dataset consisting of around 250 images. The fruit detection seemed to be taking a while, around 4 seconds for each image. However, I looked up the speed up offered by the Nano, so this should work out for our purposes.

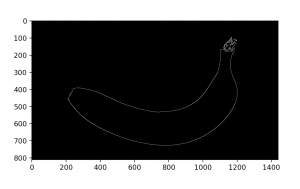

I also worked on integrating all of the code we had so now we have the code ready for the Nano to read the image, process it, pass it to our fruit detection and classification algorithm, and be able to output whether the fruit is rotten or not. I also integrated the code for analyzing whether the fruit is partially or completely in the frame along with our code.

For the apples and oranges, I tested the image segmentation and classification on a very large dataset, but it did not seem to perform too well, so I plan on playing with the rottenness threshold I’ve set while Ishita Kumar improves the masks we get so that we can improve accuracy.

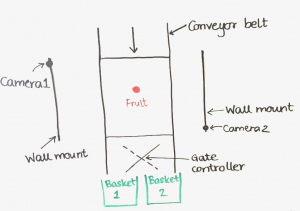

As for the integration, we plan on writing up the code for the Raspberry Pi controlling the gate in the upcoming week. We plan on setting up the conveyor belt and testing our code in a preliminary manner with a temporary physical setup soon, without the gate for now.

The fruit detection seems to be detecting apples as oranges sometimes and for a few instances it seems like it is not able to detect extremely rotten bananas, so I’ll need to look into that to see if it can be improved.

For future steps for my part, I need to work on testing the integrated code with the Nano and conveyor belt with real fruits. Once that’s working, we will start working on getting the Raspberry Pi and servo to work. In parallel, I can play around with the threshold for rottenness classification for apples and oranges. The AlexNet classifier isn’t urgent since our classification system currently seems to be meeting timing and accuracy requirements, but I’ll work on implementing that once we’re in good shape with the above items.