Hello! This week, I did some tweaking with the testing. To start, I am now doing around 20 fps for the testing video. I am still changing around some parameters, but I think I am going for this particular setup.

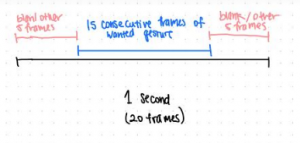

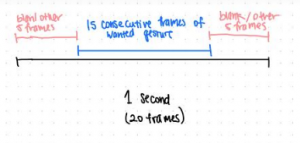

I want to switch away from the one gesture straight to next approach I was doing before to simulate more of the live testing that I talked about with Marios. If we go for 20 frames per second (which we might tweak depending on how good the results are, but we can go up to 28 fps if necessary), I want at least 3/4 frames to be the correct gesture consecutively for the gesture I am trying to get the Jetson to read. The five frames before or after can be either blank or another gesture. That way, it should be guaranteed that there will be no consecutive 15 frames of one thing at any point of time no matter what. Obviously, real life testing would have more variables, like the gesture might not be held consecutive. But I think this is a good metric to start.

Here is a clip of a video that has the incorporated “empty space” between gestures.

From this video you can see the small gaps of blanks pace between each gesture. At this point I haven’t incorporated other error gestures into it yet (and I want to sort of think more about that). I think this is pretty much how we would do live testing in a normal scenario – the user holds up the gesture for around a second, quickly switches to the next one, etc.

Next week, I plan on getting the AWS situation set up. I need some help with learning how to communicate with it from the Jetson. As long as I am able to send and receive things from the AWS, I would consider it a success (OpenPose goes on it but that is within Sung’s realm). I also want to test out the sampling for the camera and see if I can adjust it on the fly (i.e. some command -fps 20 to get it sampling at 20 fps and sending those directly to AWS).