Hello world!! ahahah i’m so funny

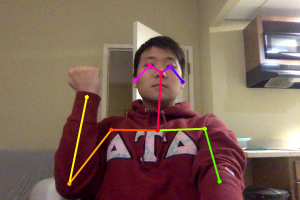

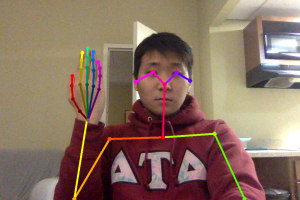

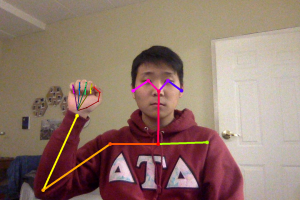

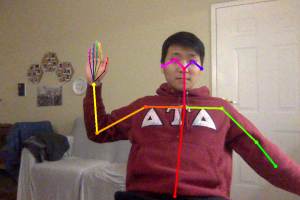

This week, I worked a lot of trying to collect data for our project. There is a bottleneck in collecting data, as OpenPose segfaults when I run it with an image directory of more than 50 images. This means that in order for me to train more than 50 images (I would ideally like 100 images per gesture), I need to rerun OpenPose with a new directory of images. 50 images take around 30 minutes to finish, which means that I need to check every 30 minutes and the fact that I have to be awake makes this process a little bit slower than I expected it to be.

Other than data collection, I’ve been working on the classification model for our project. I’ve been working on using a pretrained network, and I am trying to integrate that into our project. I have found some examples where they use pretrained networks to train their new data and model, so I am trying to implement that into this project.

Here is my restructured SOW with my Gantt chart for further descriptions.