Wow!

Author: cjye

Demo Video

Team Status Report for 04/26

Sung is doing good this week. The parts are ready for more extensive testing and demo. OpenPose on AWS with GPU runs a lot faster, and now we have more data thus more accurate training. OpenCV is a lost cause so goodbye OpenCV.

The presentation will be this week (good luck Sung)! We should have a lot of elements filmed by the end of this week for the video.

Claire’s Status Report for 04/26

This week, there was a lot less done because all the classes have everything assigned to now. I have generated three videos for testing, and we will be getting results this week for the presentation and then the demo.

Next week, we will have our presentation. More extensive testing will be done and the demo video will be filmed.

Claire’s Status Report for 04/18

I finished some additional random inputs using mostly our makeshift ASL. I compiled the most popular search queries from the last 12 months based on Google Trends with the keywords who, what, when, where, why, and how. Then, I took out duplicate queries. Now, these queries can be included in the list of randomized normal ones. Here is a gif showing the question “what is a vsco girl” with all the appropriate spaces.

(Nevermind doesn’t seem like gifs work. Try this link.)

Aside from that, I was also able to get the camera working and setup, as per the Wednesday check in.

Next week, I want to make sure that we have all the AWS instances set up and running in conjunction with the Nano.

Claire’s Status Report for 4/11

This week, I got the AWS setup and I think we finalized how we want to have it running in conjunction with the Jetson device. We have scripts ready on both side and we now have a way of SCP-ing images from the device to the server. Then, the server will delete the images as they become processed. The FPS situation didn’t really end up getting resolved – it is still sampling at a much lower rate than I would like (roughly 10 fps at best). However, it can now be adjusted.

Next week, I will be working further on the OpenCV and adding some more commands to the list of testing commands possible. I want to ask some basic who/what/when/where/why + how questions, but I need to think about how to generate them. I want to also be able to adjust the FPS for the testing on the fly while maintaining roughly the same “ratio” of signal and noise. The biggest hurdle right now is really the sampling, which I think would be difficult on a device on the Jetson Nano. I will try some more things and have scripts running with Sung’s OpenPose software by the end of next week for sure.

Team Status Report for 04/04

Hello.

This week, we continued to work on our respective projects based on the individualized Gantt chart. Everything is going well, and we plan on reconvening next week to “integrate” remotely. We are sharing our progress with each other via Github, so that we can take information from each other as needed. We started collecting more data through social media for our hand gestures, but we were not able to get too many replies. Hopefully, next week we will get more submissions.

Claire’ Status Report for 4/4

Hello! This week, I did some tweaking with the testing. To start, I am now doing around 20 fps for the testing video. I am still changing around some parameters, but I think I am going for this particular setup.

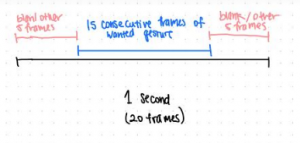

I want to switch away from the one gesture straight to next approach I was doing before to simulate more of the live testing that I talked about with Marios. If we go for 20 frames per second (which we might tweak depending on how good the results are, but we can go up to 28 fps if necessary), I want at least 3/4 frames to be the correct gesture consecutively for the gesture I am trying to get the Jetson to read. The five frames before or after can be either blank or another gesture. That way, it should be guaranteed that there will be no consecutive 15 frames of one thing at any point of time no matter what. Obviously, real life testing would have more variables, like the gesture might not be held consecutive. But I think this is a good metric to start.

Here is a clip of a video that has the incorporated “empty space” between gestures.

From this video you can see the small gaps of blanks pace between each gesture. At this point I haven’t incorporated other error gestures into it yet (and I want to sort of think more about that). I think this is pretty much how we would do live testing in a normal scenario – the user holds up the gesture for around a second, quickly switches to the next one, etc.

Next week, I plan on getting the AWS situation set up. I need some help with learning how to communicate with it from the Jetson. As long as I am able to send and receive things from the AWS, I would consider it a success (OpenPose goes on it but that is within Sung’s realm). I also want to test out the sampling for the camera and see if I can adjust it on the fly (i.e. some command -fps 20 to get it sampling at 20 fps and sending those directly to AWS).

Claire’s Status Report for 03/28

This week, I managed to get the basic functionality of the randomly generated testing working. I have a Python program that can read images from a directory and create videos with it through OpenCV. I knew that it was very likely that this functionality is possible in Python, but it was good to see it working.

Over the next two days I want to refine the script in terms of range of possible inputs for the test. For example, I want to see what range of words I will deem “acceptable” as a part of the command for Google. In particular, I think the “what is?” command (not its own designated command, but a feature we plan on fully implementing) would be the hardest to execute correctly with good inputs. For example, we want to eliminate words like “it”, “she”, “her” – special words that hold no meaning in a “what is” context. It would be nice to include some proper nouns too. These are all just for interest and proof of concept (since no one will be actually using our product, we want to show that it is functional as a normal Google Home would be).

Another concern that came up is the spacing. After some thought, I think it would make sense to put some spacing of “white space” between gestures, as a normal person signing would be. Someone who actually signs in real life will probably do one letter then switch to the next one in less than a second. This spacing could be important – this will help us distinguish repeating letters. I didn’t think of this before, but now that I have I think I will put some randomly timed breaks where the video is just blank (need to explore that in the next few days as well) to imitate that. This could great improve our accuracy and simulation of a real-world situation.

Claire’s Status Report for 03/21

Successfully installed WiFi card for Jetson Nano and tested its speed. The upload speed is around 15 Mbps, which is a little low for what we want to do. This could be a little challenging for the AWS interaction in the future, but we will have to see.

AWS account is set up but instances aren’t started yet so we don’t waste money.

Google Assistant SDK now fully installed on the Jetson Nano as well. Text input and text outputs are both verified to work, but as separate modules. The sample code will need some tweaking in order to integrate both, but this is promising so far. I also found a way to “trigger” the Google Assistant, so we can combine that with the hand-waving motion in order to start the Google Assistant. That might not be completely necessary. Here is the repo for the work being done on that end.

Next week, I will have text input and output into one module. Right now, it is streaming from terminal but I will also add a functionality that allows it to read from files instead (which is how we are going to have deciphered outputs from the gesture recognition algorithms).