Hello from Team *wave* Google!

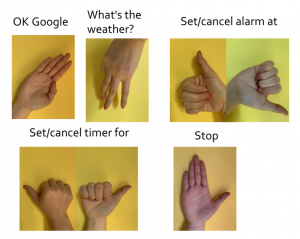

This week we presented our design report (great job Claire!!) and worked on our design report. After our design report, we received useful feedback regarding confusion matrixes, something that would be useful for us to add onto our existing design. We had already decided to individually classify the accuracy of all our gestures, and by combining that information with a confusion matrix, we can hope to achieve better results.

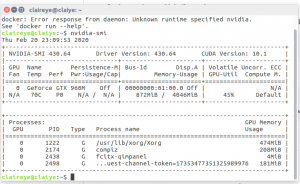

Another important feedback that we received goes along with one the bigger risks of our project that has put as a bit behind schedule, hardware. This week all our hardware components that we ordered finally arrived, allowing us to fully access the Jetson Nano’s capabilities. We had already determined that OpenPose was unlikely to successfully run the Nano given the performance of other groups on the Xavier, and we have thus choose to minimize the dependencies on the Nano instead running on a p2 EC2 instance. We should be able to know much more confidently next week if OpenCV will have acceptable performance on the Nano, and if not we will strongly consider pivoting to TK or Xavier.

Regarding the other component of the project, the Google Assistant SDK and Web Application, we have made good progress on that figuring out the way to link up to two using simple Web Sockets. We know that we can get the text response from Google Assistant and using a Web Socket connection relay that information to the Web Application. Further experimentation next week, will determine in more detail the scope and capabilities of Google Assistant SDK.

All in all, we are a bit behind schedule which is exacerbated by Spring Break approaching. However, we still have a good amount of slack and with clear tasks next week we hope to make good progress before Spring Break.