HI EVERYONE

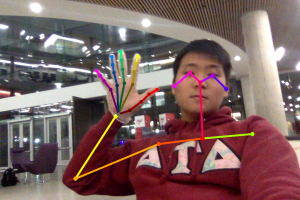

The past week, I spent countless hours trying to get OpenPose running on AWS. After spending about 3 full days and 8 different images, on 5 different machines, I finally got OpenPose working on Ubuntu16. It sucks that it works on nothing else, but Ubuntu16 works so I guess it’s all good. With that, I was able to train a lot of images really quickly, due to the GPU that exists on the AWS EC2 P2 instances. With that, I was able to enlarge the training data to about 5000 samples. I’m training more data this week, and doing confusion matrix implementation on some of the few misclassifications.

One thing about the speedup I found is that, we wanted OpenPose to run as fast as possible, and that happens if the quality of the image is low. An image that has size about 200KB will run on 2 seconds on AWS, whereas an image of 2 MB will run on 14 seconds on AWS. Because of this, we will have to utilize image compression so that we can run images with lower quality and boost our speedup