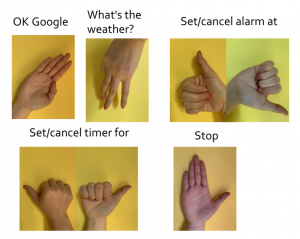

This week, a lot of my work was focused on developing our design review decks, as I am presenting. I think the most important thing I did was fully flesh out our set of static gesture for the MVP.

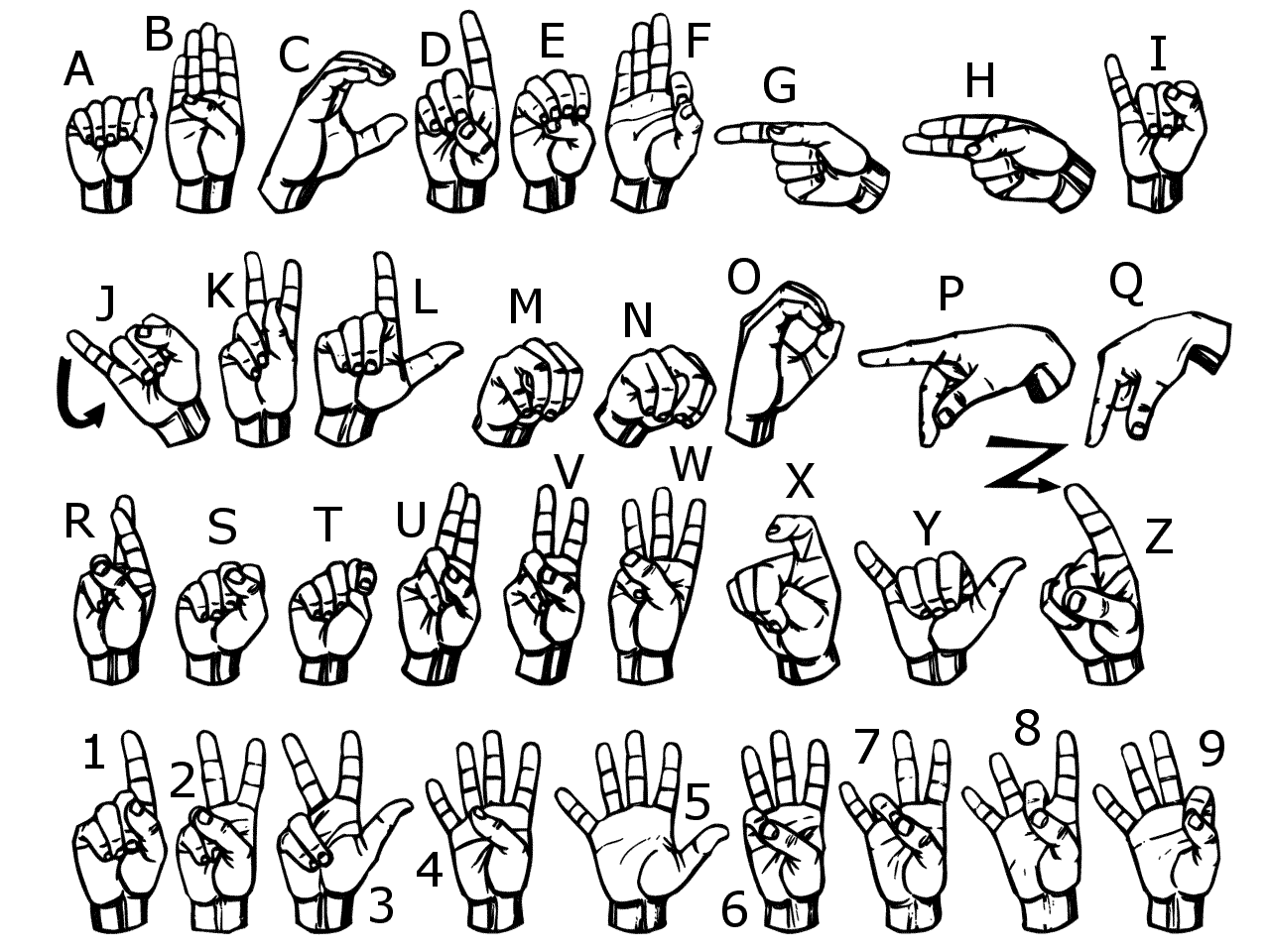

We derived these gestures from ASL fingerspelling. We had to make sure that the gestures were unique from each other (the original set had some overlapping gestures) and if unique, distinct enough for the camera. Some examples of similar gestures are K and V. While they look distinct from each other in the image, we felt that they would not be too different from one person to another given differences in finger length and hands.

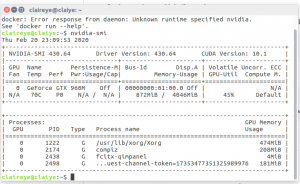

Aside from the decks, I also worked on getting the Nvidia Jetson running. I successfully booted the disk, but because it lacks WiFi abilities, I wasn’t able to get it to run anything too useful. I started a demo, and tried my hands at some basic machine learning setup to prep the Nano for image recognition. I am now learning how to train networks on my personal computer using its GPU.

This was surprisingly difficult on my machine, due to some secure boot and missing dependencies. After a few hours of installing Python libraries, I got to a point where I was not confident in how to fix the error messages.

Aside from that, because our designated camera hasn’t arrived yet I tried to borrow some webcams from the ECE inventory. Both didn’t work. One was connected through GPIO, and another was through the camera connector. Both were not detected by the Nano despite a few hours of tinkering and searching online. This could be troublesome – especially if the camera connector is broken. However, for now, it is most likely a compatibility issue with the Nano, as neither of the webcams were meant for this particular device. For now, we just have to wait for the camera to see.

The progress is still behind, but I feel fairly confident that it will work out. I can start looking into Google Assistant SDK while waiting for the parts to arrive, as those two tasks do not depend on each other.

As I am looking into other tasks to do while waiting for hardware, I think the best use of my time right now would be to start thinking about the Python scripts for automated testing, and to start testing out the Google Assistant SDK and possibly make my first query.

Thus, my deliverable next week are the design review report and a small Google Assistant SDK Python program. I am thinking something that even just takes in a line of input from the command line and outputs in text form would be a good enough proof of concept.