Haohan Shi

This week I primarily worked on the improvement of text-to-speech functionality and the integration of LCD screen for next week’s demo.

In order to correctly send the instruction to LCD screen without waiting in our main control system. I designed a non-blocking structure for LCD control class so that the main control system can send signals to the LCD at any moment and the LCD will react accordingly.

Also, I implemented two major functions, countdown, photo taking, and some simple control commands, and I need to add serial communication to the robot base so that the robot will follow the movement instructions accordingly. In addition, some simple commands will also be added such as displaying current time, etc.

Yanying Zhu

This week I continued working on the movement system. The edge detection system worked out well and the robot is now able to move without falling. I also changed the wait time, turn angle a bit in order to make the turning more smooth.

Next step is to integrate the robot’s movement system with serial command from raspberry pi.

Olivia Xu

- In the process of integration I found out that it’s necessary to combine my individual .py files for each screen-function (countdown.py, loading.py, blinking.py, etc) to a single file because the individual while loops are becoming a real problem with blocking other things, and I don’t want a global “interrupt” variable. So major code edits to get one single giant nested while loop. Some more efforts in self-learning python from scratch.

- completed more / fixed previous cut-scenes/loading scenes and facial expressions to get better fake-gifs

made a time display

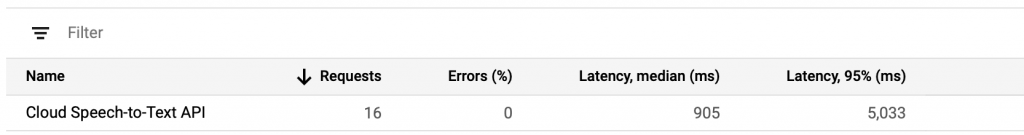

made a time display - Working on transition design from one display image to another, knowing the specific workings, responses, response times of the mic, speaker, API, etc

Team Status

We are currently on the first integration stage so that the demo can run smoothly. But a lot of functions such as motion control and hot word detection still need polishing.

Our camera died suddenly during tests on Saturday, we need to purchase another one next week. We will borrow one from one friend for Monday’s demo.