Haohan Shi

Our Polulu robot kit and Raspberry Pi arrived this Tuesday, so my major accomplishment this week is:

-

- Get familiar with the Polulu Arduino library and figure out its sample code on how to read the input of proximity sensors, print onto onboard LCD screen, and control motors.

- Set up serial communication between the robot kit and Raspberry Pi, I wrote a sample code on basic serial communication, RPi runs a Python script that can receive user input and sent the input as a string to Polulu, whereas Polulu replies back with the instruction received and “execute”. See below video for more information. This is only a test on the successful communication between the two and this method will be implemented in the actual control system.

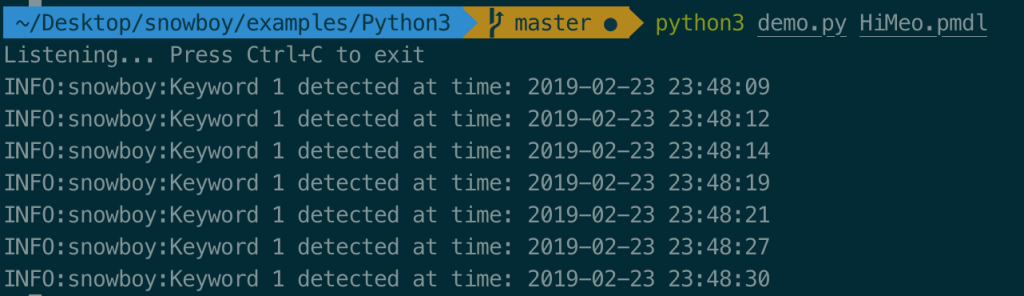

- I ordered the USB microphone and speaker so that I can start working on the actual voice control part next week. While we are waiting for these two to arrive, I started researching on the implementation of “hotword” on RPi, and found out Snowboy currently is a very good option to implement hotword detection on RPi. It is super lightweight and does not require an Internet connection when detecting, it only takes about 5% of CPU power when running on our RPi, so we can have plenty CPU resource to work on the camera image processing. I tried to train a personal model on “Hi Meo” and tested locally on my Macbook and achieved ~70% (7 out of 10) detection rate.

My goal for next week is to implement the hotword detection on RPi when microphone and speaker arrives and start designing block diagram for our complete system for design review, which includes what RPi and Polulu should do on different tasks, when and how does the communication between the two occur, and the requirements for each part of the system.

Olivia Xu

- Got to know the example features that comes with Pololu’s Zumo robot and its custom library. Checked for specific object detection sensors: it can detect proximity of objects from its front, left, and right. There are visible IR sensors in the front, and it effectively turns and follows to face its “opponent” (person in this case) as it moves.

- Worked on obstacle and edge avoidance. Need to decide how to get around objects and whether an A* algorithm is necessary. Also we will need a camera and image recognition for a more precise proximity detection for multiple obstacles.

Yanying Zhu

- Getting familiar with the ZUMO Arduino library. Our team members has implemented the Raspberry Pi to ZUMO robot serial communication script and that turns out to be working effectively. I studied the sample code (boarder detection) in order to better understand how line sensor and proximity sensors works. The library contains many detailed sub-functions some of which I don’t fully understand.

- Next step would be to implement robot moving system using the library functions. The motor library is relatively simple and straightforward. We can already realize moving with different speed and turning around. But the hard portion would be how to detect obstacles and update path planning in real time systems.

Team Status

We are currently on schedule because we started working on the actual implementation of movement control such as edge and obstacle detection this week, and the basic functionality of our control system which is hotword detection is currently ready to test on RPi.

Our main concern this week to our project is the computation power of RPi, especially graphics computation, after some research on facial detection and video recording examples on RPi, we noticed that the framerate on video can be as low as 7-8 FPS when opencv + facial recognition is on, and due to the fact that we have lots of other functionalities and components for RPi to take care of, we need to pay attention to the CPU usage for different components.