Haohan Shi

This week I worked on implementing the facial detection on our robot. The complete procedure consists of four parts: facial detection, data gathering, training, and prediction.

The first step is to use openCV to capture the camera image and try to find the faces that are used for recognition. I used openCV’s built-in Haar Cascade Classifier for facial detection:

cascadePath = "haarcascade_frontalface_default.xml"

faceCascade = cv2.CascadeClassifier(cascadePath)

The pre-trained classifier turns out to work very well when the room is bright enough so that the faces can be clearly detected in the images.

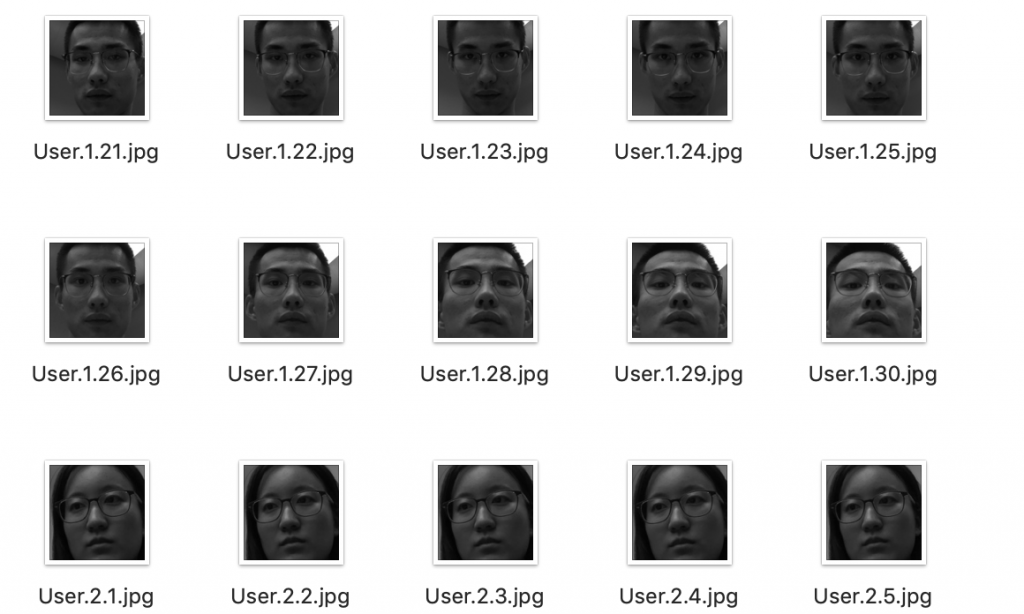

The second step is to gather training data for our prediction model. Based on the previous step, I write a script that can continuously capture 30 images and crop out the non-face part of the image. Thus the data set looks like this:

The third step is to train the model on our dataset to learn our faces. For training, I am using a simple and effective algorithm, LBPH (Local Binary Pattern Histogram), which is also included in openCV.

The last step is to use the same LBPH recognizer, loaded with our training result, to predict the who the faces in the image belongs to. My algorithm is to run prediction on 30 consecutive frames, and if there are more than 15 frames with >15 confidence that has someone’s face, it means that person is in front of the camera. The whole prediction process takes about 3-4 seconds, and the result is stable and meets our expectation.

Yanying Zhu

This week I worked on finalizing the following state and moving state. Due to the limitation of the sensor on the robot, it cannot follow in a very precise way, what it can do is roughly follow a very close object in front of it (the object cannot move away suddenly), turning left or right at a speed while avoiding falling down the table. I have implemented these two states and waiting to integrate these states with voice command through serial.

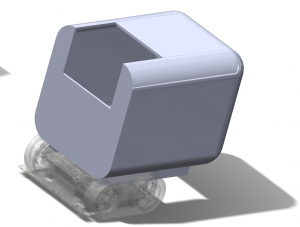

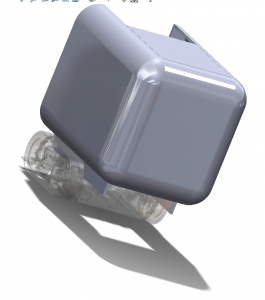

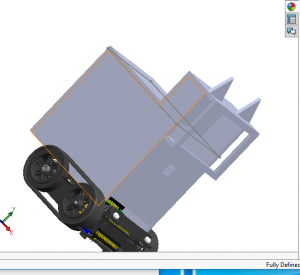

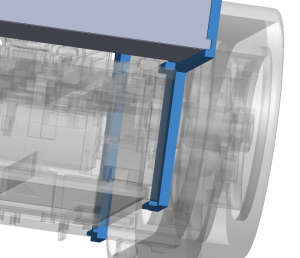

We also integrated Meobot’s movement with face recognition. Meo would slowly turns around to search for the people calling his name and stop. There are two major issues that we are currently solving: one is the latency between facial recognition executes and Meo actually stops. The other is that we are also trying to put all devices (raspberry pi, battery, microphone…etc) on Meo’s back. This greatly affects the motor speed that I previously set.

Olivia Xu

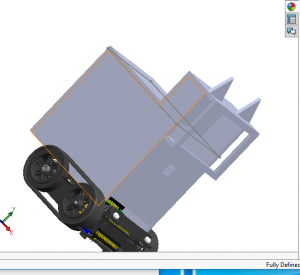

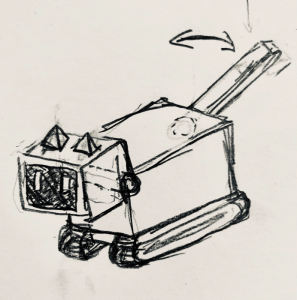

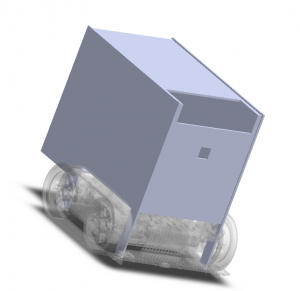

Drew more images for showing dynamic weather. Not sure if this will slow down performance by too much. Tried 3D printing multiple times, was not fully prepared for unfortunate circumstances like the shop failing/losing parts without notification. Had to redesign laser cutted model for inlab demo.

Team Status

We are almost done with our coding part. We are still waiting for our cases to be printed since the makerspace staff lost a few parts that we previous taken to print. The next step is to further calibrate the parameters such as hotword detection sensitivity, input gain, movement speed, etc after we assemble everything together. Also, some procedure refinement needs to be done such as directly asks for user input again when the command can’t be understood so that the user doesn’t need to call the hotword again and again for the same command.

made a time display

made a time display