Haohan Shi

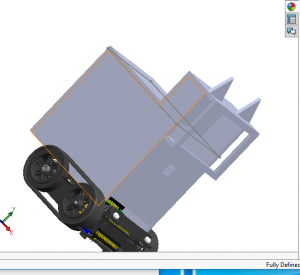

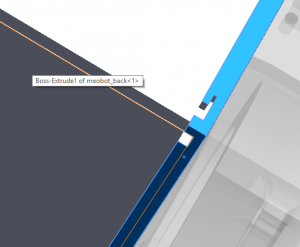

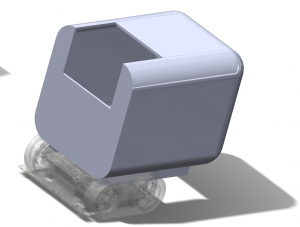

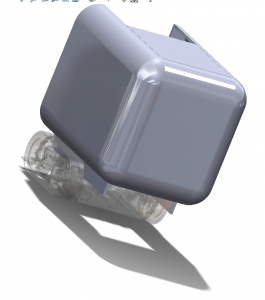

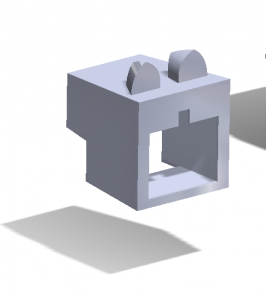

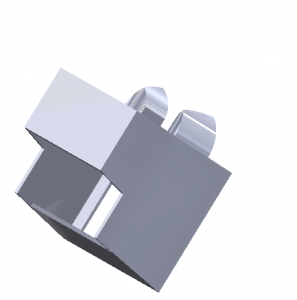

This week I mainly worked on improvement and assembly of our case. The initial design of our outer case lacks mounting mechanics and relies only on mechanical hooks, and it turns out to be fairly unstable since the 3D printing isn’t accurate enough, and the laser-cut material such as wood or acrylic sheets are too heavy and thick. In addition, laser-cutting cannot make curvature surfaces easily so that the initial design doesn’t look very nice when we assembled.

For our second edition, we added the mounting holes which matched the screws holes on the robot platform and used glue guns to seal all the connection parts.

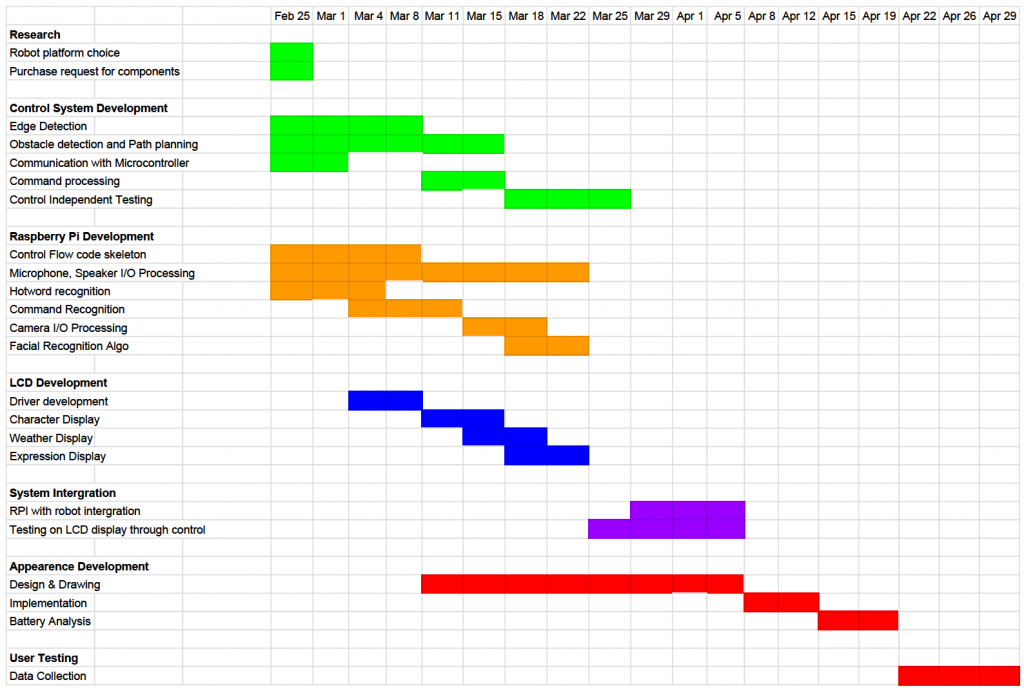

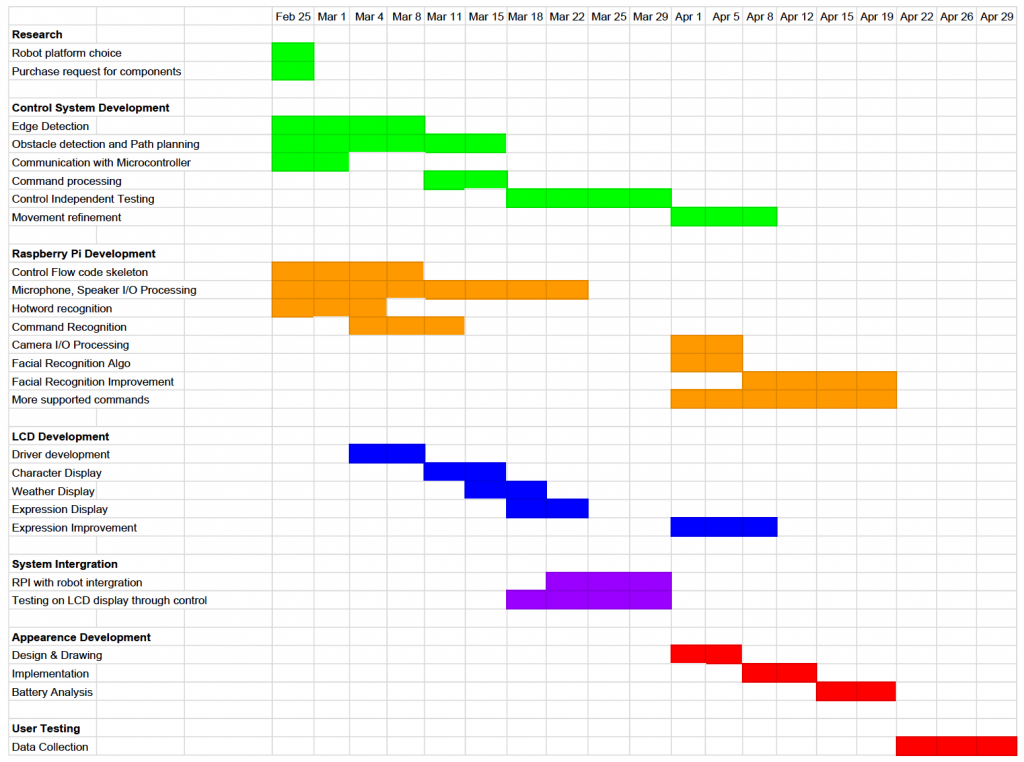

Then I conducted several tests with my teammates to find out what parameters work best for our hot word detection.

| Sensitivity | Gain | Distance (from mic) | Noise | False Alarm time | Hotword Success | Speech-Recognition Success |

| 0.4 | 1 | 5 | None | 0 | 0% | – |

| 0.4 | 1 | 10 | None | 1 | 66.7% | 100% |

| 0.4 | 1 | 15 | None | 0 | 80% | 62.5% |

| 0.4 | 1 | 25 | None | 0 | 0% | – |

| 0.5 | 1 | 5 | None | 4 | 50% | 50% |

| 0.5 | 1 | 15 | None | 3 | 10% | 100% |

| 0.5 | 1 | 25 | None | 0 | 10% | 100% |

| 0.4 | 3 | 5, 15 | None | 0 | 0% | – |

| 0.4 | 3 | 25 | None | 0 | 71.4% | 70% |

| 0.4 | 3 | 35 | None | 0 | 90% | 66.7% |

| 0.4 | 3 | 45 | None | 0 | 0% | – |

| Sensitivity | Gain | Distance (from mic) | Noise | False Alarm time | Hotword Success | Speech-Recognition Success |

| 0.4 | 5 | 5, 15 | None | 1 | 0% | – |

| 0.4 | 5 | 25 | None | 1 | 29.4% | 71.4% |

| 0.4 | 3 | 5, 15 | Noisy | 0 | 0% | – |

| 0.4 | 3 | 25 | Noisy | 0 | 66.7% | 58.4% |

| 0.4 | 3 | 35 | Noisy | 0 | 38.5% | 70% |

| 0.4 | 3 | 5, 15, 35 | Very Noisy | 0 | 0% | 0% |

| 0.4 | 3 | 25 | Very Noisy | 0 | 9.1% | 100% |

The result provides a lot of insights that we didn’t think of initially. It turns out 0.4 and 3 are optimal parameters currently because the ambient noise may be very loud and we are ~25 inches away from the robot during the demo. But more data needs to be collected during a noisy and very noisy environment.

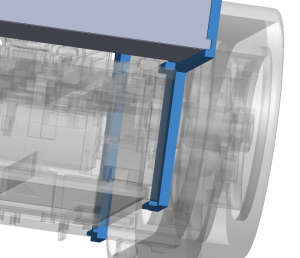

Olivia Xu

Designed new case for the robot with all the information from test runs from last week. Need a good way to mount things and need larger space. So I made a bottom with tabs that have hole for mounting with screw onto the robot, this also allows a much larger design above. Surprisingly weight isn’t much of a problem when the speed is turned up.

Notable constraints:

- Have to adjust camera angle with different table height. The head has to stay movable since the demos take place on different tables.

- 3D printing is expensive and support materials tend to be a lot, and often times the support materials fail to print well. So I sliced up the model I made into pieces that could be printed with close to zero support material.

- 3D printers tend to break too quickly for the staff to service all of them, and there are a lot of people trying to use them during final weeks

- Need to make sure weight distribution is ok for the rear to protrude by this much. will place portable battery relatively close to front, and the head has torque.

still needs paint

Yanying Zhu

During this week I worked on conducting structured tests with teams and preparing for the final presentation. For movement system, the testing metrics for obstacle avoidance algorithm and edge detection are basically letting it run on the table and record the failure number. The goal for movement system is to achieve 100% success rate on avoiding obstacles and prevent falling. And I think test result shows that we are pretty close to our goal. For speech recognition and hotword detection, we tested on different sets of parameters: distance from mic, gain, sensitivity level and noisy environment to figure out best set of parameters that we would choose and also to document data for future analyzation. We would do test on facial recognition next week. Facial recognition hardly have any parameters to play around because the altering frame rate would greatly affects processing time, so we might just record success rate and see how it performs. Finally after we glue the case and every hardware component within, we would probably run demo tests as an entire process.

Team Status

We are almost done with our project. The next thing to do is to make sure everything is configured and runs smoothly with our case and parts assembled together. In addition, we need to run more tests to check the success rate of each functionality, both the software side and the hardware side.