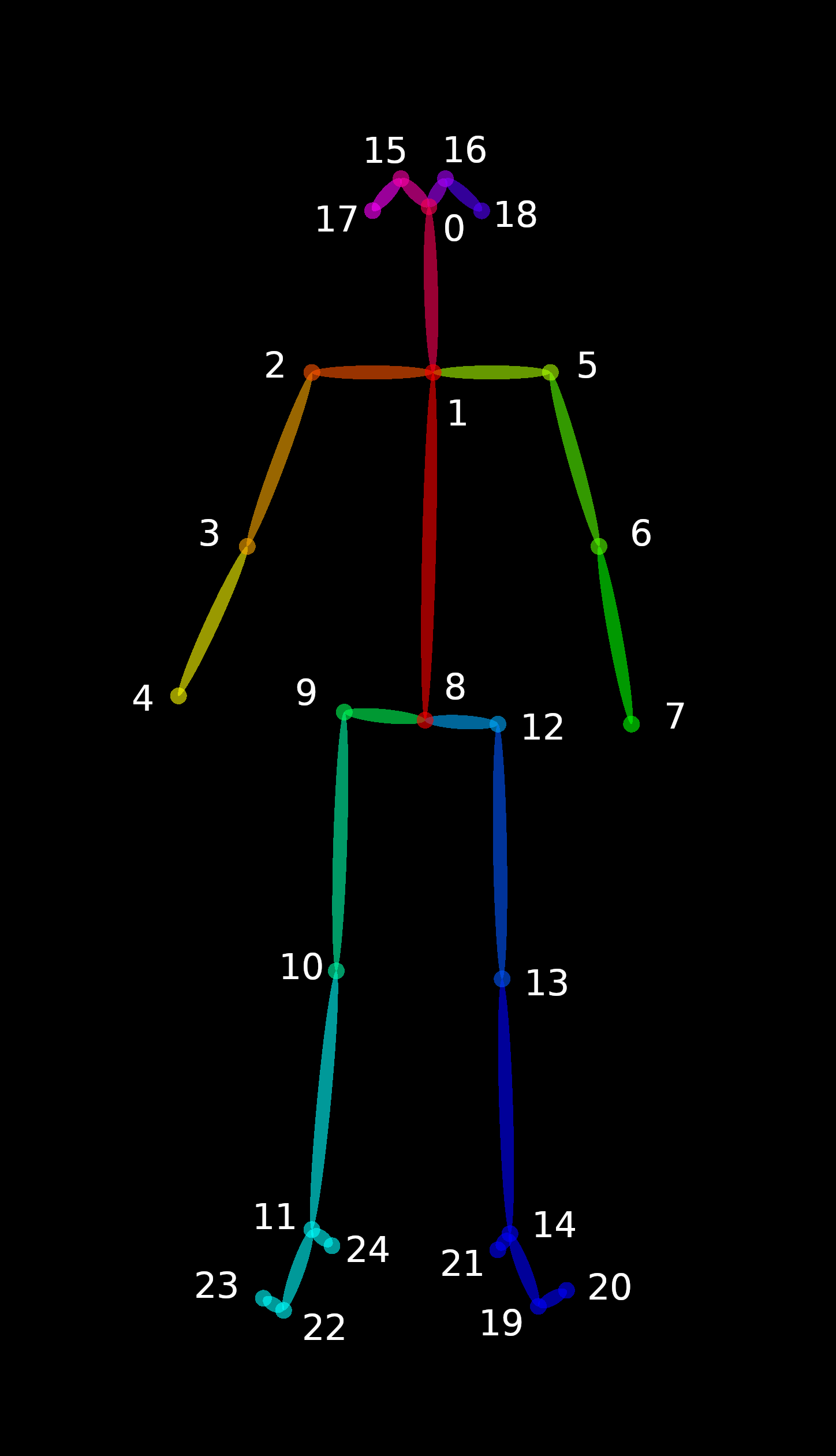

My work this week mainly focused on comparison algorithms. Using the json data generated from Openpose thanks to Eric, I was able to craft my python script for comparing two different postures. There was some confusion at the beginning since there were some extra outputs than I previously expected, but it was figured out after communicating with Eric and looking at the documents from Openpose. The keypoints were narrowed down and point representing positions like eyes and ears were eliminated to improve the accuracy of determining overall body posture.

Only point 0-14 are used in judging posture right now for efficiency, I’ll see how certain keypoints (on the feet) impact the correctness of posture in testings following the implementation.

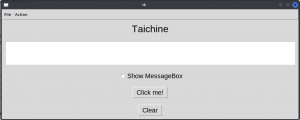

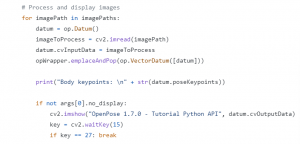

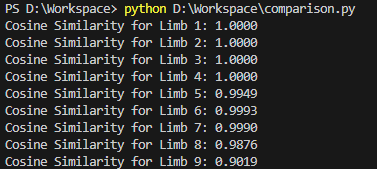

Using packages like numpy and json, I was able to pull files from the locally designated folder and compare the sets of data within. I added a few steps to process the data including the mentioned reduced datapoints, but also reforming vectors from the given limb datapoints. The reformed ‘limbs’ were then passed into the cosine similarity comparison. The response of the algorithm was quick, and results were easy to understand. The way we wanted to display the results and the mechanism is still to be determined, so I will display a raw output below from my algorithm to showcase one example of comparison based on two trials of a same image but which I did some minor changes in the output position of the upper limb of the second image.

The next steps will be to connect the comparison algorithm with the TTS engine to invoke vocal instructions and move on the integration steps accordingly.