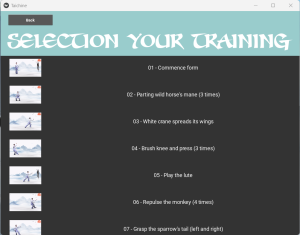

I had made substantial progress on frontend this week. The first accomplished task is the dynamic insertion of widgets into the selection page is finally up and working. I designed a custom widget to hold both the previews and the names of the postures. Then all the widgets are added into the menu using python code instead of hard coding it with kv language. Below is the result:

Clicking the row that previews the pose will bring you to the training page and begin the training on that pose. This is, in my opinion, optimal in terms of both aesthetics and intuition.

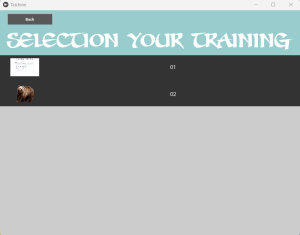

The other thing I implemented is the mode page. Since we have both integrated postures of 24-form Tai Chi and custom postures from users, I created the mode page where the user can choose which set of postures they want to play with.

Clicking the upper block will bring the user to the same page as what the first image in this post shows. Clicking the lower block will bring the user to this page instead:

Here, the custom user poses imported through Jerry’s pipeline are displayed. Implementing this new page took a while since a lot of the page connection logic needs alterations.

I also started the frontend-backend integration with Eric and we have cooperated and agreed on variables and features that needs introduction into the frontend. This will extend into next week and will be my focus on the project.

I believe I’m well on schedule and our team would see completion of the MVP quite soon. Still, a lot of effort is still required for reaching that goal.

ABET: On design requirements, we need to make sure the system meets the minimum performance in detecting body part degree differences, the fps of the application, the accuracy of the verbal instructions, and the correctness of the user interface. Shiheng Wu and Hongzhe Cheng have conducted tests on the backend to make sure the first three design requirements are met. For the correctness of the user interface, Jerry Feng and I have checked the interconnection of the pages and made sure that the user are able to easily navigate through each of the pages, and has ensured that when users uploaded images through the customization pipeline, they are displayed correctly in selection page and can be used as references in the training page. All tests for the subsystems went well.

We will redo all of the above tests on the full system with communication between the frontend and the backend correctly and robustly integrated. We will further conduct tests on user requirements, including testing the posture detection accuracy, verbal instruction latency, and accessibility of the system. In particular, we will use the systems ourselves and see if it meets our expectation for the system’s functionality and smoothness. We will also each invite three of our aquaintances to try the system out and receive feedback on all three user requirements from them. We can then improve the user experiences of our system based on our own evaluations and the testers’ suggestions.