This week as a team we worked on integration. We got the pip line working for a single-camera but needed to test the 2 camera pipeline. We found after running simple tests that our machine learning model requires new training as it is overly sensitive. Maehara and Aditi met on Saturday to work together on the remaining tasks. We need to complete the 2 camera pipeline testing by porting our code to Tensor RT. we also need to do the special accuracy testing as well as taking full pipeline metrics.

Aditi’s Status Update 12/10

This was a very eventful week for me. I spent over 24 hours this week working on various aspects of the project.

On Monday, I gave our final presentation. Later in the week, I tested hosting both the web server and camera on the Jetson which worked as expected. Once I had completed that I tested our pipeline with live video footage. I found that our object detection algorithm was quite good at detecting chairs but was too sensitive and mistakenly marked a backpack as a chair. Keeping this in mind, I wrote a script to capture images with extra chairs to serve for test images for later. I was able to do this as I knew how many chairs I expected it to find. After this, I brainstormed how we might fix this issue. I looked into some inference parameters first and tried playing with the confidence threshold, NMS IOU threshold (which dictates how closes 2 bounding boxes can be before being considered as the same object) and lastly the model classes. Playing with the confidence threshold and NMS IOU did not make a significant impact but using model classes increased the confidence in chairs and people which was a helpful asset. That being said, the output classes for our custom trained model only looked for people, chairs and tables. I had a feeling that the algorithm was still identifying objects as we had frozen most of the earlier layers but since it only had 3 classification options it was trying to fit the object to one of the classes. With this in mind, I re-annotated our custom dataset to match all the classified objects in COCO including backpacks and laptops. I additionally tried to see if there were other dataset with less classes but had the classes we were looking for and found that possibly using PASCAL’s VOC dataset may be a better fit. I tried training with an increased number of classes with the relabelled data but my initial results gave undesirable results. I also wrote our sample thresholding algorithm.

Later on in the week, I tried integrating the 2 cameras. I got both cameras working with the pipeline separately but when I ran them at the same time the Jetson was taking a very long time to load the model. I used a resource called jetson stats to monitor the activity on the Jetson and found that the CPU’s and GPU’s were not being overloaded but the memory was maxing out. After reading a little bit more I figured I might have to switch from PyTorch inference to TensorRT or DeepStream. I spent 5+ hours on Saturday working on this but managed to get YOLOv5 working with TensorRT. I will need to port our code to work with TensorRT but I am quite proud of this accomplishment along with everything else I managed to get done this week.

Team Status Report

After our talk with Professor Tamal, we decided to restructure our implementation. Originally, this was an element that we had overlooked. We had the webserver calling the CV algorithm. In this scenario, the time elapsed between a user requesting a room’s status and seeing the change was quite large. This system was also not scalable – what if multiple users wanted to access multiple rooms? This implementation did not include multithreading so users would have to wait until their request was serviced. Now, the CV algorithm and the webserver run as separate processes. The CV algorithm interacts with the webserver via post requests. Every 10 samples, it takes the best sample and sends it to the server as a JSON file. When a user requests a room’s status, the webserver will send the entire database of room and filtering will be done on the front end. This way there is less load on the webserver for both pre and post processing and its ability to service requests from a user is not affected by the overall traffic. A majority of our discussions in the last 2 weeks have pertained to this. Mehar and Chen have been adapting their code for this change and Aditi has been working on measuring metrics and testing various methods to speed up these processes on the Jetson.

Aditi’s Status Update 12/3

On my last update I was having issues running the Django webserver, since then I have been able to debug the issue and have been successful in running the webserver and taking images and running inference on them. That being said, due to implementation choices, the entire pipeline is not working yet. The CV and Web modules are in the process of being reworked to work independently of each other so that this will not be an issue. This week I worked on measuring some of the metrics for the Jetson like YOLOv5 inference and time for image capture.. I have also been playing around with various parameters on the Nano to see if it affects inference time and image capture. This includes running inference using the cpu vs gpu, and capturing images of different dimensions. Interestingly, using larger dimensions did not change the inference time. I am still in the process of debugging, but I am trying to see if doing inference using the NVIDIA DeepStream will speed up inference. I am also planning to look into differences in inference time between batch processing and single image inference. I also spent time planning what tasks we needed to get done before the presentation and report and assigning each team member with a task. In the week prior to Thanksgiving we had spent a lot of time restructuring our implementation to have a more modular code structure and a more cohesive story which as a team we spent many hours on.

Team’s Status Update 11/19

This week the team was working on individual parts of the project. Mehar labelled the images we captured on Friday and began doing custom training on AWS. Chen began implementing the filter feature for the UI and Aditi finished integration between the camera and CV code. On Friday we revisited our goals with the MVP and a discussion with Prof Tamal has started a conversation about how our MVP would reflect a scaled version of our project and what narrative/ explanation will we have when asked about the scalability/adaptability of our MVP.

This may result in some internal restructuring of our project, like adding in a cloud server for the website or Raspberry Pi for running the server on the local network. Additionally this would affect how our database would function.

Aditi’s Status Update 11/19

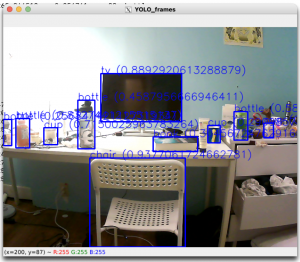

This week I continued fo work on integration. The Jetson has continued to give me trouble. After exhausting my options for install of pytorch vision that would work at runtime, I decided to start from scratch. I had already followed the instructions for install from scratch Pytorch.org, YOLOv5, NVIDIA Developer website and the NVIDIA forums with no luck. I sat down with Adnan and Prof. Tamal to help me debug my current issues. I had a feeling there were some compatibility issues between library module versions, versions of python and versions of jetpack. After reflashing I decided to use the latest version of Python that was supported by Jetpack 4.6.1 which comes preinstalled when you flash the Jetson. I was very meticulous with reading the installation pages and finally managed to get YOLOv5 inference to work on the Nano! This is a big win for me as I have been trying to set this up for weeks. It can take a photo with the USB camera, do inferencs and output the bounding boxes and object labels. Unfortunately we had run into a new road bump. Our Django app is written for version 4.1 but Python 3.6.9 only supports 3.6 of Django. Our existing code will need to be rewritten to support 3.6.

Output from USB Camera and YOLOv5 — all done on Jetson

Team’s Status Update 11/12

This week our group as a whole was working on integration and data collection. On Sunday – Wednesday we worked on getting our various components working together. We refactored some of the existing code and created a virtual environment to ensure our code did not have unknown dependencies. Later in the week, we worked on creating the custom dataset for YOLO. We made a set of images we were hoping to capture. We also reworked our Gantt chart to better reflect our tasks and current status.

Aditi’s Status Update 11/12

On Sunday I attempted to connect the Jetson Nano to CMU’s local network. There were a lot of issues while trying to get the Jetson connected. It appears that a device with the same MAC address was registered on CMU’s wired network already . Looking more into this, it appears to be a known Jetson issue, where there was a manufacturing error where multiple Jetson Nano’s were given the same unique MAC address, it’s also possible that this Jetson has already been registered on the network . I tried to remedy this error using various suggestions given by the Jetson blog and stackoverflow but I was unable to solve this issue. I switched to connecting the Jetson to CMU’s local network using the Wifi adapter. After finding the correct drivers and appropriate library I was able to set up a WLAN connection. I was able to register the wireless MAC address with CMU’s network and connect successfully. The web application is now accessible using Jetson’s unique IP on CMU’s network.

During the week I was trying to get YOLO working on the Jetson. There is a known numpy issue with the latest version which results in an invalid instruction. I was forced to downgrade torch and torchvision. Once making these changes, new errors appeared where loading the YOLO weights using torch.load caused a segfault. I was unable to solve the compile time issues but I am now trying out a Jetpack docker to see if I will have more luck with that.

Aditi’s Status Update 11/05

This week I worked on integration of all of the various components onto the Jetson Nano.

- I attempted to create a virtual environment and install the required libraries but ran into errors due to different versions of python3

- I upgraded python3, there were additional steps as it was an Ubuntu system.

- Following this, there were a few more errors regarding specific libraries which took to resolve on the whole

- Once all the requirements were met, I tried to start the django and apache front-end.

- I had to setup a tightserver so that I could headlessly access the local website

- I had to change the port the website was being hosted on as it was on the same port as the VNC remote access

- Setup the website to be accessible via LAN

- Issues with the way we were load YOLO weights which affected front-end, these also needed to be debugged.

Overall, I spent 8+ hours on integration. I had surgery earlier this week so I did not make much other progress.

Website being access via LAN

Team Status Update 11/05

No changes were made to the existing design. We worked mainly on integration this week. Mehar and Chen were able to push all changes to a shared repository and get their parts working together. Aditi ported the code to the Jetson Nano, setting up the environment and getting access to the website through the local network. We are all a little behind when compared to the Gantt chart so we need to be careful of how we spend the following weeks.