Overall, as a team this week – we mainly each worked individually on our assigned sections. As team, we worked on resplitting the computer vision workload to make sure everyone had an equal contribution. Namely, all members will now work on data collection and labeling, Aditi will additionally work on post processing (sample combination/averaging) and will be in charge of the overall code structure to integrate all portions, Mehar will work on preprocessing and model training. This week, we also went through the design review feedback and made notes to work on testing the post processing plan we had noted in the paper, and that we will need to expand our testing for any unintended factors such as lighting changes.

Mehar’s Status Update for 10/29

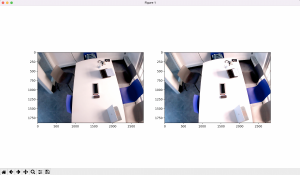

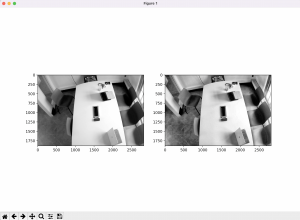

This week, I continued to implement the preprocessing section of the computer vision pipeline. The three main goals for the preprocessing are to denoise and increase the contrast and brightness of the the input images. Initially, I used the builtin denoising methods included in the OpenCV library and a simple scaling function to work on contrast and brightness. I found that simply scaling up the image RGB values for contrast and brightness was very rudimentary. Many areas in the image were at risk of overexposure, and the method leaves little room for the preprocessing to adapt for each image. Researching further, another contrast/brightness adjustment method I found was the clahe method which involves normalization of the image values. This sort of system is adaptive for each image and I found it was much better for increasing contrast without overexposing portions of the image.

This week, overall – I wasn’t able to work as much due to assignments in other classes. So the goals for next week include finishing up preprocessing and working model training.

Aditi’s Status Report 10/29

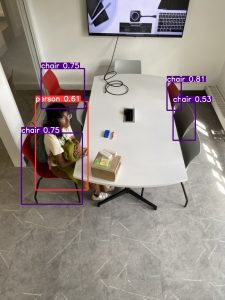

This week I successfully setup the camera and wrote the code to integrate the camera with YOLO. First, I tried using instructions from Jetson website, but the instructions using the nvgstcapture did not work. I looked online to try and solve errors trying to see if I was missing a camera driver or whether there was a display issue. After reading that this camera should be compatible I looked further into display issues. I realized I was getting EGL display connection error. I had initially tried using the JetsonHack camera-caps library. I tried seeing if a VNC would help but it did not. After this I tried to use a library specific to USB Cameras. I was able to get it working but not with the gstreamer. I fixed a forwarding issue by changing -X to -Y and solved the EGL display issue and managed to get gstreamer working. Then, I wrote a simple script to take a picture and run them through YOLO.

Mehar’s Status Update for 10/22

The week before break, most of my time was spent working on the design report – I researched further into feature descriptor-based object detection and did compiled my benchmark results for the report. In the report, I was responsible for the computer vision trade studies and design implementation sections, the introduction, schedule and team member responsibility sections. This overall was about 9+ hours of work.

Beyond this, during the break I started implementing the preprocessing and overall code structure of our project to integrate the preprocessing/model/postprocessing sections. This took about 3-4 hours to research and formulate overall.

Chen 10/22 Status Report

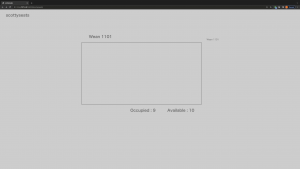

The basic framework has been built, creating a full pipeline image -> yolov5 -> outputs coordinates and size information in txt file (yolov5 default output format) -> the server parses through the file -> data post-processing adjusting the perspective -> display on the website interface

The current server works as whenever the front end sends a post request, the server parses through the output file. This will be changed to whenever the front end sends an http request, the server responds with the information of the room it’s requesting in a later update with the completion of the database, fulfilling the purpose of scaling the project to multiple rooms.

Additionally, the distance threshold will be applied in the later update to calculate availability.

This report also covers the week before fall break.

Aditi’s Status Report 10/22

Before fall break, I spent most of my time working on the design report. I was in charge of writing up the use-case requirements, design requirements, hardware design studies, bill of materials, summary, testing, verification and validation and adding relevant implementation information. Writing these various parts of the report took 6+ hours as we were iterating and reworking various parts along the way.

Mehar’s Status Report for 10/8

This week, my main goals were to look into preprocessing for the model, to decide the type of data to collect and to start data collection. This changed a bit after Monday once the question around image subtraction as an alternative to deep learning-based object detection came up. I didn’t have much knowledge of non-deep learning methods, so I switched gears a bit to research more into it. I found that what we are looking for is background subtraction and looked into different feature detection methods and classification models we could use in tandem with it.

I went through a textbook for a bit to learn more about non-deep learning based object detection and some comparative studies as well. I mainly found HOG, SIFT, SURF, ORB and BRISK. So far I am finding that ORB and BRISK seem to have a good tradeoff between computational complexity and being able to pick up features in an image. Out of classification models to run on the feature extraction output, Naive Bayes and SVM, along with a few others are popular. As far as data collection, I found a dataset we can use preliminarily to train the model before moving into using footage of the study space itself.

With some midterms and coming up, I didn’t get to work as much to get a model of one of these systems up and running, so I’ll be getting to that next week along with starting data collection of the study space itself.

Aditi’s Status Report 10/8

This week, I worked on setting up the Jetson Nano. I was able to successfully flash the microSD, connect the Jetson to my computer and complete the headless setup. I was quite busy this week, so I did not manage to complete the camera setup for the Nano. I plan to work on that this coming week as I have more time. I also decided what additional purchases we will need for the Nano like ethernet cables and researched and decided on a WIFI dongle that is compatible with the Jetson.

Team Status Update 10/8

The implementation of our project did not change much this week. We worked on our individual parts this week. Integration is definitely a worry for the team so we are going to try and get some initial integration done by next week. We have switched to the Jetson Nano and was able to bring it up. After getting some questions from our presentation this week, we researched more into image subtraction and discussed our motivation for using hardware versus AWS. This was a slower week for our team as we all had other deadlines.

Chen’s Status Reports for 10/9

I was the presenter this week for design review, so that was part of my focus. During the preparation, we were able to determine the details of implementation. This includes determining whether or not to use overhead cameras versus side cameras, as overhead cameras are easier to transform into coordinates but might not be accurate in terms of cv algorithm, while side cameras might be more accurate in recognizing seats, but really hard to translate into 2d maps, and we decided on the latter, as accuracy is the most important. For this week, my main progress if building up the environment for the webapp, and finishing front end, and part of the back end.

I expect to finish and have some psuedo map showing for next week, and I expect to finish displaying seats and tables assuming we have the coordinate data soon. Further work after this would be assisting Mehar with CV algorithm and data processing.