This week I have been focused on the final integration steps for the web app, and in particular, the transfer of arrays from the note scheduler (that describes what notes to play at which sample time) to the front-end piano such that the correct set of key audios can be triggered all together. In this vein, I have worked to set up the infrastructure of receiving these 2-D arrays and playing through them with the corresponding piano audio files. I have tested the audio playback on several arrays and been able to reproduce the proper sounds. With the stream of data from the notes scheduler, we will be able to play the audio files necessary to recreate the audio.

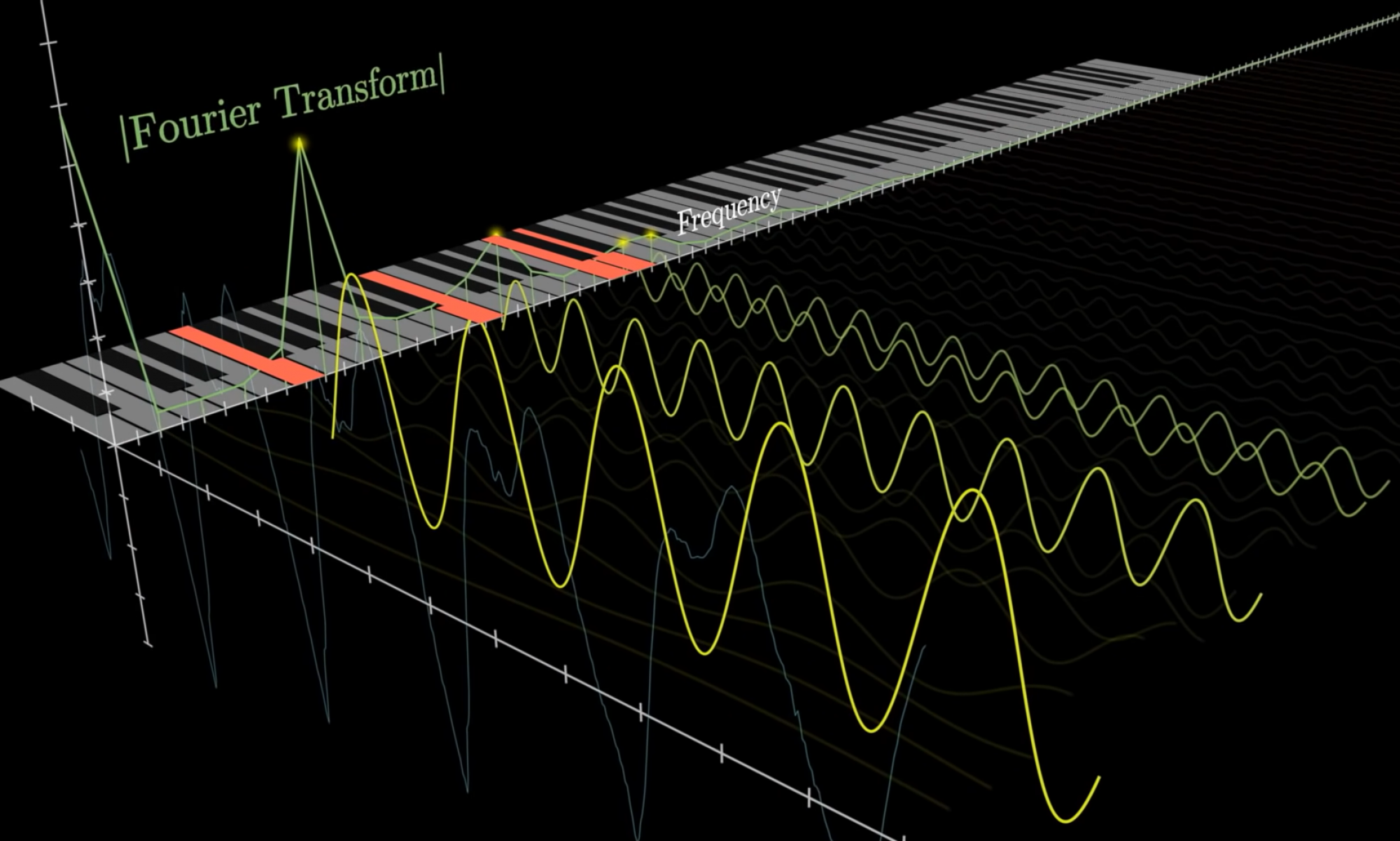

I have been also working on the visualization aspect of the virtual piano such that users can see which keys are being played to create the sounds they are hearing. Here is a snippet of the piano playing a subset of a large array of notes:

With good progress in the audio processing parts, we have been able to listen back to audio files that have been processed to only include the frequencies of piano keys. With these audio recreations, we have a good baseline to compare our piano recreated sounds with that help us guide our optimizations.

Lastly, I have been looking at AWS instances to push our finished product to and have been researching good E2C instances that will allow the webapp to have access to several strong GPU and CPU cores to perform computation rapidly. Once set up, we will be able to conduct processing and audio playback very seamlessly and efficiently. We are planning to use an instance with at least 8 CPU cores as to be able parallelize our code through threading.

What is next is to polish the integration and data transfer between the 3 main sections of our code to optimize the user experience and push everything to the AWS instance.