This week, I focused on attempting to recreate the input audio using piano key audio files. To do this, I needed several things: audio files for all keys on the piano (in order to reconstruct the sounds), a piano design for visualization on the web app, and JavaScript functions to interpret the note scheduler list and play the correct notes at each sample.

In terms of the audio files, I finally found all the necessary notes from a public GitHub (https://github.com/daviddeborin/88-Key-Virtual-Piano). This has allowed me to extract each note and utilize them for playback. With these files, I can play many in parallel, choose what volume to play them at (using information encoded from the notes scheduler), and whether I need to repress them or not on any given sample.

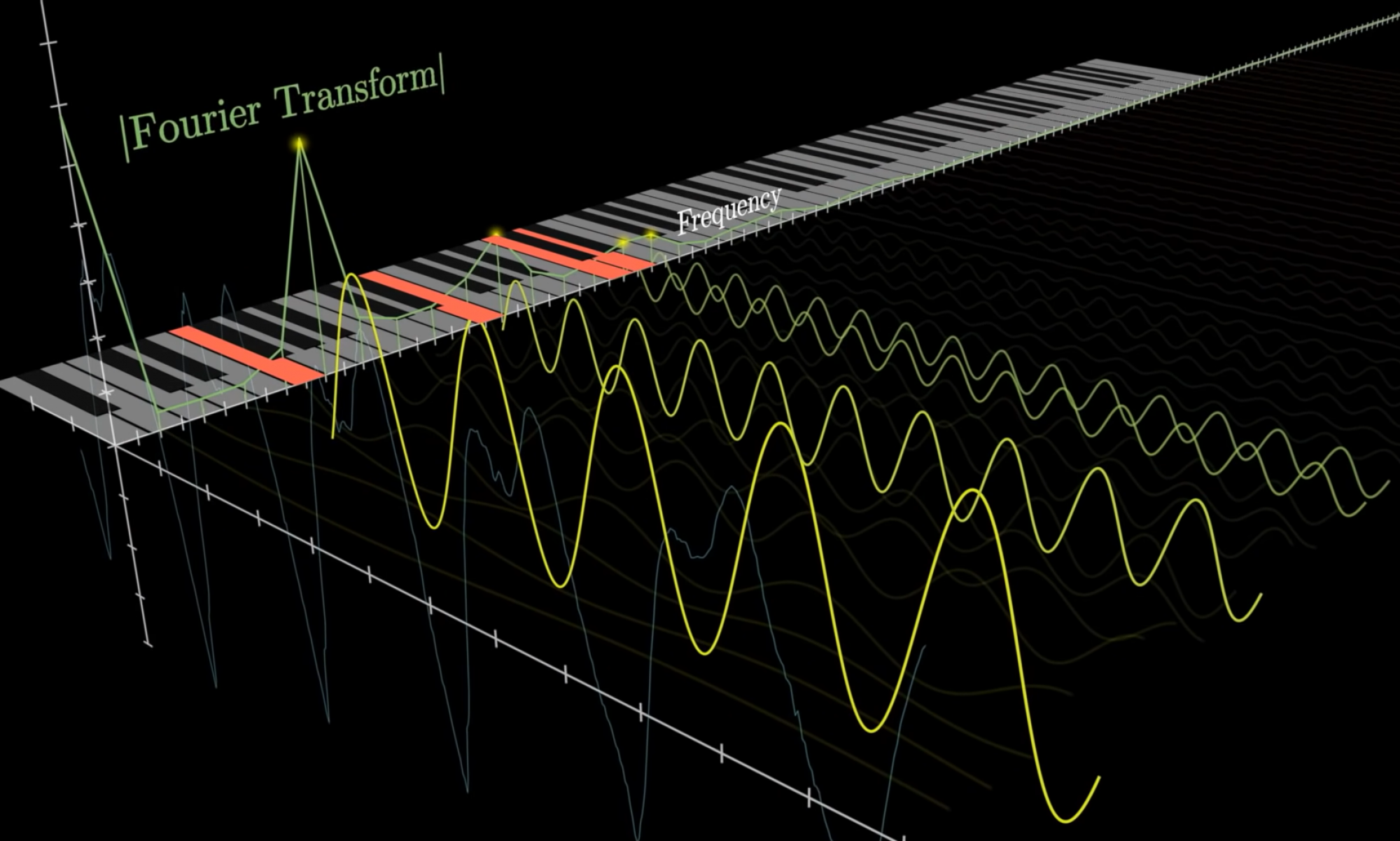

In terms of a piano visualization design, I was able to get a 69-key piano formatted for our webapp after drawing inspiration from and wrestling with the HTML, CSS, and JavaScript code from the Pianolizer Project (https://github.com/creaktive/pianolizer). Now, we are able to see a piano on our web app as the sounds are being played.

Up next, I am planning to tie everything together with the proper functions that allow for playing the notes in parallel for each time sample to recreate the audio. I will be using the piano design to change the color of the keys currently being played so users can visualize what notes are being played as they hear them. Lastly, I will be working with Angela to incorporate a speech-to-text analyzer for both the input and output audio to see if the program can capture the word being ‘spoken’ by the piano.