Self playing pianos have been around for a very long time, dating as far back as the 1900’s with pneumatic player pianos. Our project uses an electro-mechanical self playing piano to reproduce human speech using piano notes. Our speech is composed of sound waves of various frequencies. A piano key, when played, produces a note of a specific frequency. Multiple keys combined can evoke the phonemes of human speech, and allow a piano to speak and be understood.If you’re interested in hearing what something like that even sounds like, check out this video from Mark Rober, where he demonstrates his talking piano. If you’d like to learn more about what goes in to building a talking piano, then make sure to visit our developer blogs during the semester!

Here’s a brief overview of everything we’ll be working on:

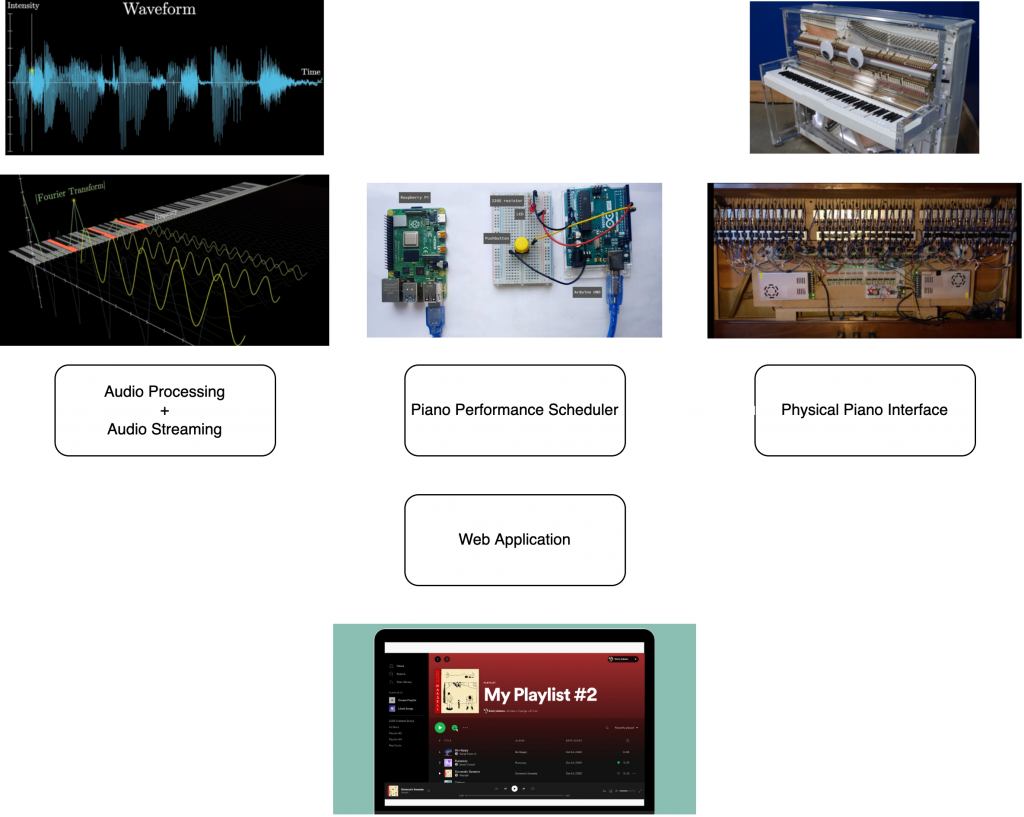

The project has 4 main areas of development.

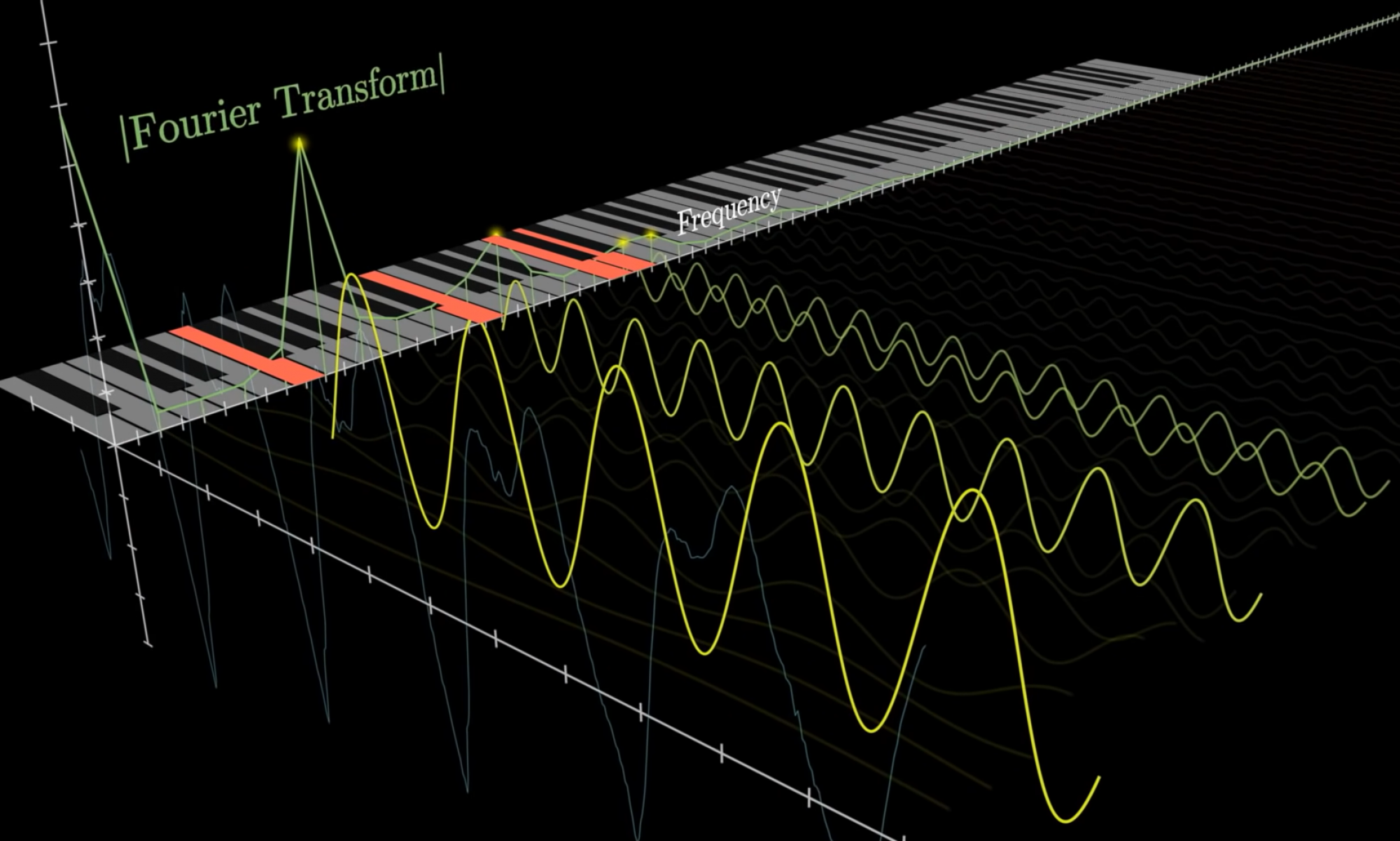

Audio Processing and Streaming: Handles all the ‘math’ involved with turning your voice into piano notes. At a really high level, every key on a piano corresponds to some frequency and our voice is also made up of several frequencies. Our goal is to find all the possible keys on a piano that match the frequencies that make up our voice using signal processing techniques like the Fourier Transform and filtering.

Piano Performance Scheduling: Takes care of a manipulating all the circuitry on-board the piano, and reproduces piano performances.

Web Application: This is where all the controls for the piano are hosted. Here you can upload speech recordings, convert speech live, replay old recordings, and do many of the things you’d normally do on a music app like Spotify.

Physical Piano Interface: This is the electro-mechanical system that presses all the keys on the piano!