Early this week, I was focused on polishing the web app for the demo. I was able to integrate the audio processing code such that when users upload an audio file, this file is accessed by the python audio processing code in order to perform the processing on it and output the audio with the piano note frequencies. In the process I realized some issues with file formatting of the recorded audio from the web app. The python processing code expects the audio to be in a .wav format with a bit depth of 16 (16 bits used for the amplitude range), however, the web app recorded in a webm format (a file format supported by major browsers). I attempted to configure the webm file as a wav directly using the native Django and JavaScript libraries, but there was still issues getting the file header to be in the .wav format. Luckily, we discussed as a group and came across a JavaScript library called ‘Recorderjs’ (https://github.com/mattdiamond/Recorderjs). This allowed us to record the audio directly to a .wav format (by passing the webm format) with the correct bitdepth and sample rate (48 kHz). With this library I was able to successfully glue the webapp code to the audio processing code and get the webapp intaking the audio and displaying all the graphs of the audio through the processing pipeline.

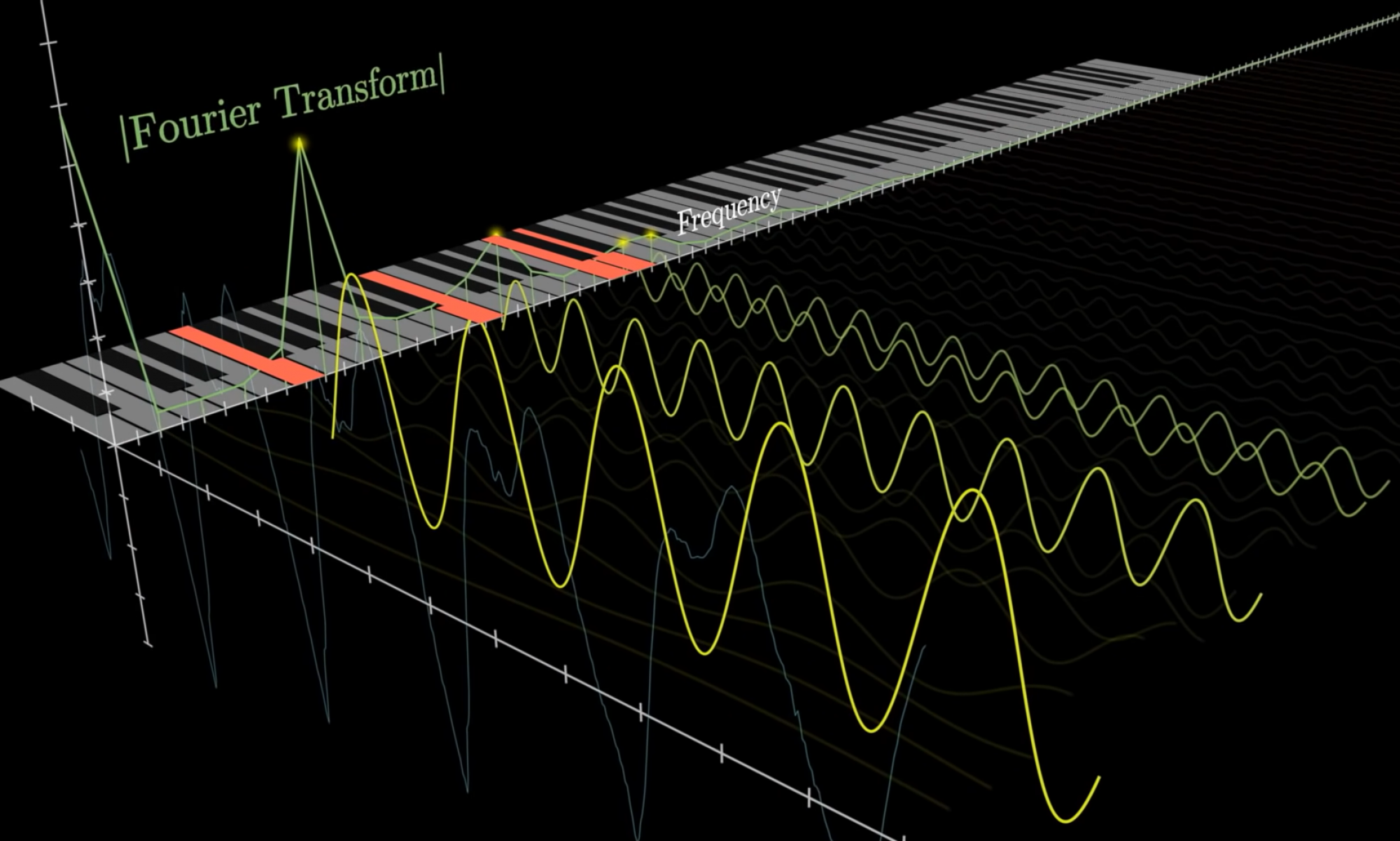

We were not able to get the final processed audio played back due to difficulty in performing the inverse Fourier transform with the data we had. In an effort to better understand Fourier transforms and our audio processing ideas, I talked to Professor Tom Sullivan after one of classes with him and he explained the advantages of using Hamming windows for the processing and how we could potentially modify our sampling rate to save processing time and better isolate the vocal range for a higher resolution Fourier transform.

With this information, we are in the process of configuring our audio processing to allow for modular changes to many parameters (fft window, sample rate, note scheduling thresholds, etc..). I am also fixing the audio playback currently so we can successfully hear the audio back and have an idea of the performance of our processing.

My plans for the upcoming week is to work with the group to identify how we will set up with testing loop (input audio with different parameters, hear what it sounds like, see how long the processing takes, evaluate, then iterate). I will also be integrating the note scheduling code with our backend such that we can control the stream of data sent to the raspberry pi via sockets.