This week, we collected data from each of our team members and integrated the glove with the software system so that the classification can be done in real-time. We found that some letters that we expect to have high accuracy performed poorly in real time. Namely, the letters with movement (J and Z) did not do well. We also found that different letters performed poorly for each of our group members.

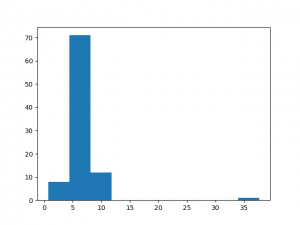

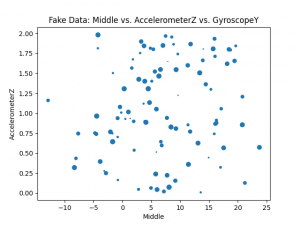

After our meeting with Byron and Funmbi, we had a bunch of things to try out. To see if our issue was with the data we had collected or perhaps with the placement of the IMU, we did some data analysis on our existing data as well as moved the IMU to the back of the palm from the wrist. We found that the the gyroscope and accelerometer data for the letters with movement are surprisingly not variable– this means that when we were testing real time, the incoming data was likely different from the training data and thus resulted in poor classification. The data from the IMU on the back of the hand has a 98% accuracy from just the data collected from Rachel; we will be testing it in real time this coming week.

We also found that our system currently can classify about 8.947 gestures per second, but this number will change when we incorporate the audio output. This rate is also too high for actual usage since people cannot sign that fast.

We are also in contact with a couple of ASL users who the office of disabilities connected us with.

We are still on schedule. This week we will work on parsing the letters (not continually classify them). We are also going to take data from a variety of people with different hand sizes, ideally. We will also experiment with capturing data over a time interval to see if that yields better results. We will also be improving the construction of the glove by sewing down the flex sensors more (so that they are more fitting to the glove) and doing a deeper dive into our data and models to understand why they perform the way they do. We will also hopefully be able to meet with the ASL users we are in contact with.