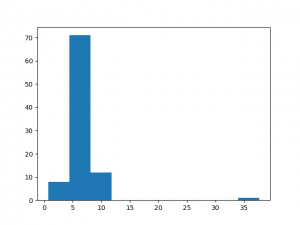

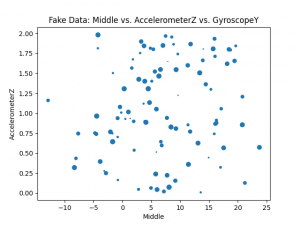

This week, I mainly focused on collecting more real-time data to test our glove’s performance under different conditions as well as creating the slides in preparation for our final presentation. I tested the glove’s performance by changing the number of consecutive predictions that the glove must see before outputting the signal as well as seeing our glove’s performance under different signing rates (speed of user). In doing so, I realized that the amount of consecutive predictions we require and how much buffer we allow between signs are both going to have to be dependent on our target user– if our target user is a novice learner, we would probably allow for more buffer time while if our target user is someone who is well-versed in the ASL letters, this buffer time should be reduced so that they can sign smoothly. I also created other plots (such as points of two entirely overlapping signs) to show in our presentation why our glove performs more poorly on some of the letters.

In collecting data for these graphs, I also found some errors in the serial communication code that would occasionally cause the script to hang, so I also fixed that this past week. This coming week, I will work with the rest of my team to complete the final report as well as create a video for our final demo. The glove also needs to be fixed and the sensors secured, so I will also help with the job of securing the sensors so that the data we get from them remain consistent (which was another problem we found throughout the semester). After securing the glove, I will re-collect data from several people, so that we can train our final model. Since everything we have left is relatively outlined and what we expected to be doing this upcoming week, I would say we are right on track!