This week we implemented the audio portion of our project. Now when a sign is made, the letter will be spoken by the computer. And the audio output is at the rate that we set in the requirements at the beginning of the semester. We also implemented the suggestion we got during interim demos about creating another model to differentiate between letters that are commonly mistaken for others (R, U, V, W and M, N, S, T, E). However, this did not noticeably improve the functionality. We will continue analyzing the data from those easily confused letters to see if we can improve the accuracy. Adding contact sensors may be the best solution.

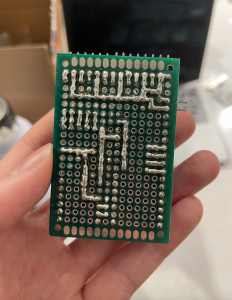

The Arduino Nano BLE arrived and we are working on integrating Bluetooth into our system so the glove can work wirelessly. There have been some issues uploading scripts to Arduino Nano BLE and will hopefully be resolved next week.

The reading for the flex sensor on the ring finger of the glove also stopped varying (it gives roughly 256 degrees no matter how much you bend it), so we replaced the flex sensor. There is also some calibration we should do in the Arduino script to improve the conversion from voltage to degrees. Now that we have repaired the glove, we will need to recollect data.

We are on schedule since the audio integration is now complete. In the final weeks, we will continue refining the system by changing the hyper-parameters of our model. We will also create plots showing performance to add to our final presentation, paper, and video.