This week our team discussed and finalized the hardware components. We composed a bill of materials. With the Intel Realsense taking up almost half the budget cost, our current bill of materials would exceed the limit. We communicated this challenge with the professors, and appreciated greatly their understanding, as well as Tao’s kindness to lend his camera for us to experiment.

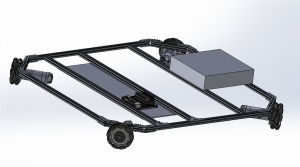

To satisfy the current draw requirements, we choose to use the BTS7960 DC Stepper Motor Drive https://www.amazon.com/BTS7960-Stepper-H-Bridge-Compatible-Raspberry/dp/B098X4SJR8/ref=sr_1_1?dchild=1&keywords=BTS7960%20DC&qid=1633569262&sr=8-1, since the motors draw an 8.5 A current at max. Different from the L298N spark fun motor drivers, only one motor can be connected to the motor driver instead of two. Hence, we would need six motors in total. We analyzed the pin layout of the Arduino and motor drivers, and realized that each motor driver would require 4 digital pins and 2 PWM pins that need to connect to the Arduino. Since each Arduino has 6 PWM pins as well as 14 digital pins, we would need at least 2 Arduino boards to connect to all of our components. Conveniently, the Jetson Xavier has 2 5V USB outputs, which can connect to 2 Arduino boards at max. We finalized our selection of the battery as well. Our design should meet the technical and physical requirements, and we are ready to compose our design report due next week.