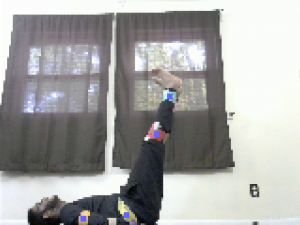

At the start of the week, my laptop’s screen broke and I had to send it in for repairs. I couldn’t make as much progress as I would have liked. I would make more live fine tuning for the posture analysis and HSV bounds in the next weeks. This week I was able to work on dealing with invalid joint positions. When we were integrating the FPGA with the application, my code crashed because I was doing a divide by zero to get the slope. This was caused by the FPGA not being able to detect the joints, so it outputted the origin. Therefore, this week I added further checks to ensure that the joints I received were valid positions. If not, I would output an invalid signal to the application. In the image below the output of the application would be “Invalid: [‘Invalid Joints Detected: Shoulder!’]” as the shoulder cannot be detected.

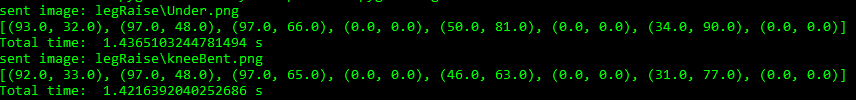

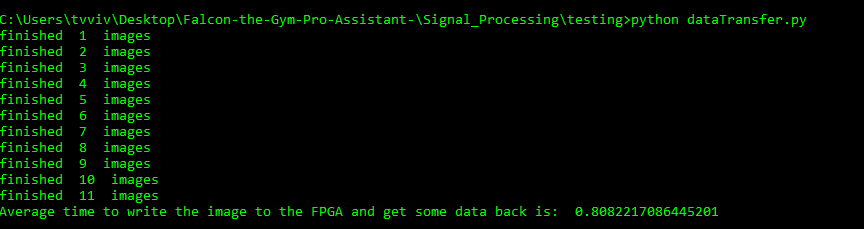

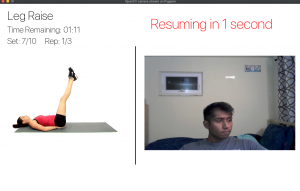

Since we wanted to satisfy our requirement of having a feedback every 1.5 seconds, Venkata measured the time from getting the image to outputting the posture analysis. He concluded that it is best every workout to be limited to 5 joints so that we can get the feedback in 1.42 seconds. Leg raises and Lunges would not need more than 5 joints. For a pushup, we can relax the requirement to provide more feedback on the lower body, find a way to not send portions of the data to the FPGA, downscale the image even more, or take away one of the joints in the lower body.

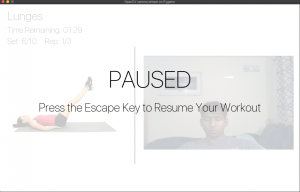

Next week, I would conduct more live testing with the application, webcam, and FPGA integrated. I will also create ways to test the feedback and generate checks for the hardware side as well. I am on schedule in terms of the overall progress.