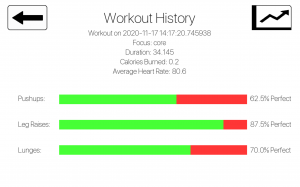

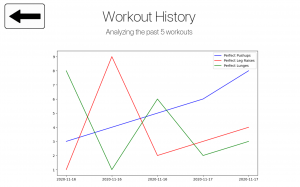

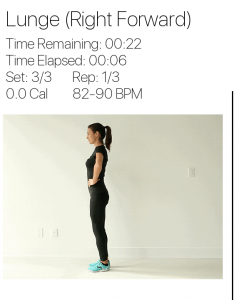

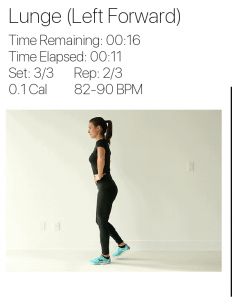

This week I made a lot of progress and headway on the project that has made the overall project a lot more robust. I started off the week refining the changes I made last week for displaying the feedback from the FPGA. It turned out that when the entire project was run together with the hardware as well as the signal processing the timing for the feedback was a little delayed especially for pushups. I had to work on editing the gif’s and reimplementing the timing for the workouts so that the final rep of a set had more time remaining so that the user could properly read their feedback before moving onto the next workout. After fixing up timing for the different workouts and more specifically the pushup exercise I moved onto working on implementing a second type of lunge and fixing up the gif for the lunge.

We originally had the lunge implemented for the right leg forward but we worked on changing it so that two different types of lunges are shown with also a version where the left leg is forward. With the help of Venkata I was able to apply the new gifs since the old ones ended up being too pixelated and did not flow cohesively in the user interface. In order to accommodate both types of the lunge I had to refactor some of the code and implemented new logic.

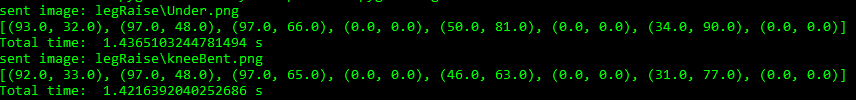

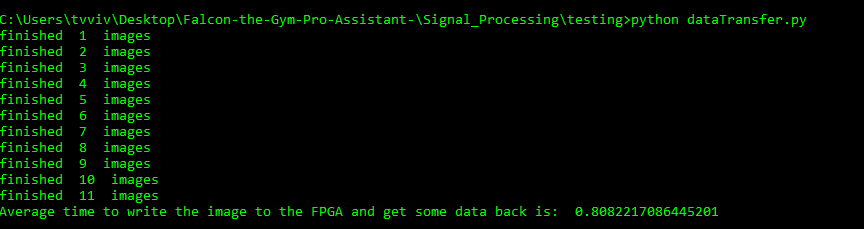

I worked on making sure that the images are captured periodically in a proper manner and made sure the scaling and coloring for them were proper. The frame through opencv was given in blue scale and I had to cast it in order to be consumable by Albert’s signal processing as in the past we have been using photos from a saved folder that was taken by an external webcam application.

I’ve wrapped up this week working with Albert and Venkata to record some workout footage that we will be using in our final demo as we will all be heading home next week for Thanksgiving. We had some issues integrating so I spent a little bit of time making sure those bugs were cleaned up.

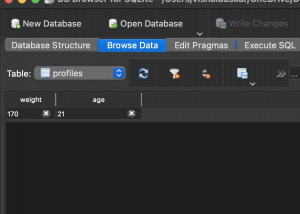

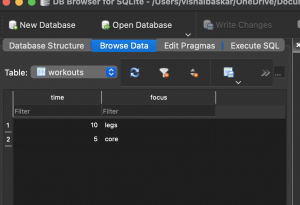

In the upcoming weeks I will be making the main menu which will connect all our different pages together and then I will be integrating a profile customization screen.