Last week, I was able to program the FPGA to be able to stream information from the FPGA to the CPU via UART. I had to make a couple of small changes so that I would be able to stream information from the CPU to the FPGA, which worked well on small sets of values but had issues when I tried to stream large sets of values such as an image. This is because the input buffer for the UARTLite IP block only contains 16 bytes so, the device has to read from the buffer at an appropriate rate to ensure that we don’t lose information. I looked into different ways of reading information such as an interrupt handler and polling frequently and was eventually able to get an implementation where it stores all of the information appropriately. Attached is an image where I was able to echo 55000 digits of Pi ensuring that was able to use UART both ways and able to store the information.

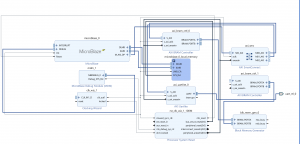

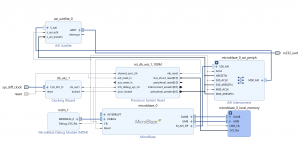

In terms of the block diagram, I realized that the local memory of the MicroBlaze is configurable and invokes BRAMs. So, I simplified the design to the following and tried to store all of the information in the MicroBlaze.

However, I kept running into issues where the binaries would not be created if I used too much memory. I am checking if I am not appropriately using the memory or if we need to downscale the image slightly more (which I have already discussed with my teammates).

Finally, another issue that arose was related to the baud rate. Different baud rates require different clock frequencies. As I was creating different block diagrams, it would sometimes not meet the target frequency and violate timing. In the image above with the digits of Pi, I was able to use our target baud rate.

In terms of the schedule, I was hoping to have most of the design done but ran into quite a few issues 🙁 I have addressed this with my teammates and by next week, I plan on having the ability to stream an image and receive the information (at a potentially smaller image size). I will finish the implementation of the image processing portion with the Vitis Vision library. I will then try to optimize the design to be able to use a high baud rate and the entire image during the weeks that were allocated for slack.