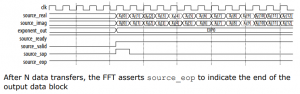

This week I have looked into how we would implement frequency filtering and pitch shifting. I’ve been reading through this document on an FPGA implementation of the FFT to get an idea of how we would implement this in hardware. Additionally, I’ve been reading up on signal processing theory to get an idea of how we can make this block both efficient and high-quality (low latency, preserves low frequencies).

What I’ve found is that there is a fundamental trade-off between the latency that we introduce into our pipeline and the lowest frequency that we can preserve in our signal. The lower the frequency, the larger the delay. This follows from the idea of Rayleigh Frequency. We need to collect enough points to properly represent a low frequency, and collecting those points introduces delay.

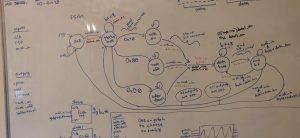

To minimize delay through the pipeline but still preserve the lower frequencies, we should use a window size of 512 points. The total delay through the pipeline would be ~20ms, and we would be able to capture frequencies as low as 94 Hz. Here are some of my notes for this calculation:

Alternatively, we can solve filtering and pitch shifting in the time domain. For filtering, we could implement an arbitrary digital filter in the frequency domain and then use the IFFT to find its impulse response. Then, it would be a simple matter of implementing a convolution with a FIR (Finite Impulse Response) filter. This method should introduce a smaller amount of delay for the same frequency resolution. For pitch shifting, we could use the Shift OverLap and Add method (SOLA).

The final implementation of this effect will warrant a larger discussion about desired tradeoffs.