Here’s the PDF: Final Poster PDF (compressed to be able to upload to WordPress, for a full size see Google Drive link)

Here’s the PPTX: Final Poster PPTX (images are compressed to be able to upload to WordPress, for full size see Google Drive link)

Carnegie Mellon ECE Capstone, Spring 2024 – Johnny Tian, Jack Wang, Jason Lu

Here’s the PDF: Final Poster PDF (compressed to be able to upload to WordPress, for a full size see Google Drive link)

Here’s the PPTX: Final Poster PPTX (images are compressed to be able to upload to WordPress, for full size see Google Drive link)

Out of the three deliverables from the last status update, I made progress on all three two (in orange) but did not finish them. Most of my time was spent getting everything working on the bicycle.

The main deliverables (aside from the class assignments such as video, report, etc.) for this week are essentially the same as last week, except changing “complete more tests” to “complete all tests”:

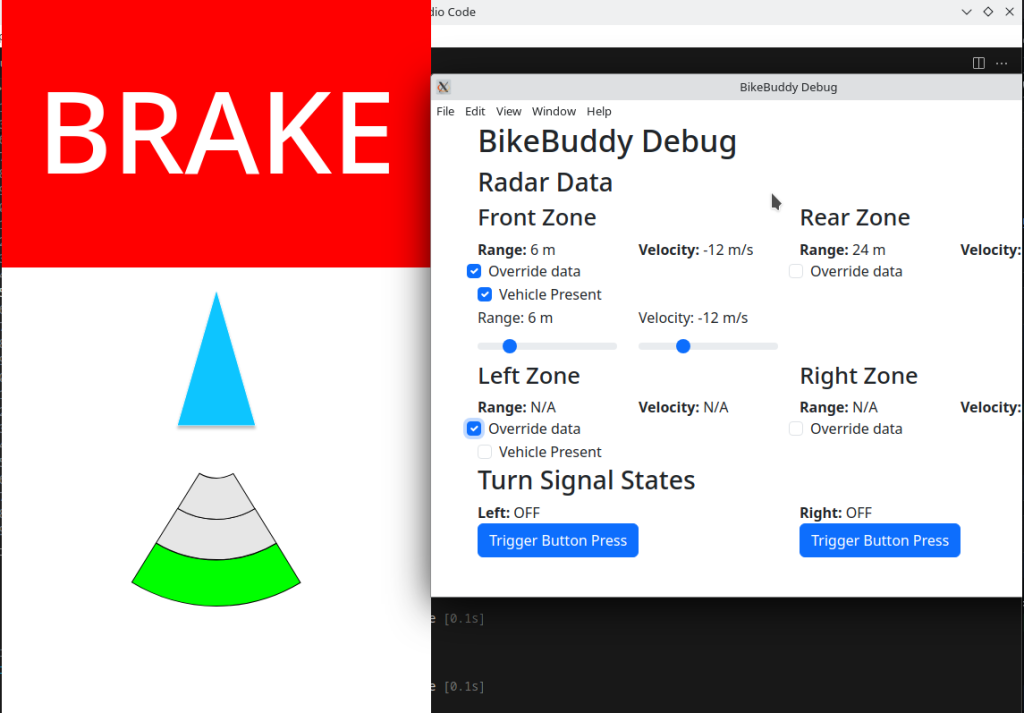

Here’s a more detailed rundown of what the main changes were to the UI since the last update:

As a recap of what this is for: When we were testing, we noticed that the system is very “flickery” in the sense that the blind spot indicators, range indicators, and alerts can change their values very rapidly. For example, the blind spot indicators would show up and disappear pretty quickly which is not a good experience for the user.

I implemented some throttling last cycle, but this week I added an additional change to only show the blind spot indicators if the vehicle is moving towards you (velocity is negative). This seemed to reduce the amount of false blind spot indicators further.

I also disabled the forward/rear collision warnings as they are too sensitive right now. We will need to discuss whether this is a feature we want to keep.

There was a lot of continued frustration this past week getting the magnetometer to work correctly. Despite doing things like comparing my code to what Adafruit is doing in their library and trying different functions in my I2C library, nothing got my code to work.

However, I noticed that with the calibration data, using Python and Adafruit’s Python library for the MMC5603 actually gives good results! I’m still mystified as to why their code works and mine doesn’t, and I suspect that I’ll need a logic analyzer to see the I2C messages to figure out why.

So instead, my new approach is to write another Python script to talk to the magnetometer using Adafruit’s library, and then use a pipe (like we’re doing with the radar data) to send it over to the JavaScript UI code.

Out of the two deliverables from last the last status update, I completed one (in green) and did not finish the other one (in red).

The deliverables for this week are as follows:

As you’ve designed, implemented and debugged your project, what new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks?

Here are some tools that I had to learn for this project:

Some other specific knowledge I got included:

What learning strategies did you use to acquire this new knowledge?

In general for tools, I learned things very informally (e.g., without watching a tutorial or reading a textbook). For Figma, I essentially just messed around with it and looked up things as needed. Figma’s official documentation was useful along with videos.

For Electron, I used their documentation and Googled things when I got stuck. Reading Github issues and StackOverflow answers was also very helpful.

For learning how to use the magnetometer, reading the MMC5603 datasheet and looking at Adafruit’s C++ library implementation was really helpful. And of course, lots of trial and error. For calibration, reading blog posts and seeing sample code proved to be very useful

For Raspberry Pi-related issues, I read posts from peoples’ blogs, the Raspberry Pi forums, questions and answers on the StackExchange network, and reading the documentation.

Here’s a more detailed rundown of what the changes were to the UI since the last update

The implementation work was done by the previous status report, but in these two weeks Jack got the front radar working and we verified that radar and UI integration is working for both front and rear radars.

When we were testing, we noticed that the system is very “flickery” in the sense that the blind spot indicators, range indicators, and alerts can change their values very rapidly. For example, the blind spot indicators would show up and disappear pretty quickly which is not a good experience for the user.

To fix this, I implemented a deactivation delay for the blind spot indicator where each blind spot indicator must stay activated for at least 2 seconds after the last detection of a vehicle in the side lane before it is allowed to deactivate.

For the range indicator, I ended up implementing something akin to a throttling system where updates to the range indicator are throttled to 1 Hz (although in testing it before writing this I think there might be a slight bug that occasionally allows faster updates to go through).

The collision warnings are still too sensitive right now. I’ll have to think of some way to make them less sensitive.

In the last status report, I talked about an issue where rotating the magnetometer 90 degrees physically only reports 60 degrees. I spent a significant amount of time the last two weeks trying to calibrate the magnetometer to see if I could improve this.

In my first attempt, I used some Python calibration code that was modified from code on this Adafruit page (plotting code taken from this Jupyter notebook from Adafruit) to determine the hard iron offsets. That gave me some calibration values, except when I applied the calibration values in code the magnetometer broke even more where rotating the magnetometer by a lot only changed the heading by a few degrees.

Then, I attempted to perform a more advanced calibration using a software called MotionCal (which calculates both hard and soft iron offsets). I spent a decent amount of time getting it to build on the RPi 4 and modifying the code to take input from my Python script that interfaces with the magnetometer instead of reading from a serial port as MotionCal originally wants. This StackOverflow answer had code that I used for bridging between my Python script that interfaced with the magnetometer and MotionCal. My original idea was to create a virtual serial port that MotionCal could use directly so I didn’t have to modify MotionCal, but it turns out this solution didn’t create the type of device MotionCal wanted so I ended up having to modify MotionCal too. In the end, I effectively ended up piping data from the Python script to MotionCal.

I also used this StackExchange answer that had sample code that was helpful in determining what to send from the Python script and in what format (e.g., multiplying by 10 for the raw values) for MotionCal to work, and it was also helpful for learning how to apply the soft iron offsets once I obtained them from MotionCal.

With all these changes, I still didn’t have any improvement – rotating the magnetometer a lot would not result in a significant change in the heading :(. So, as of this writing, the magnetometer is still not functional as a compass. Oddly enough, reverting my changes seemingly no longer allows the magnetometer to work as before all this (where rotating 90 degrees is needed to report 60 degrees). I’ll need to spend some time before the demo getting this fixed, but I may just use my workaround mentioned in the last status report instead of trying to get calibration to work.

Personally, I’m starting to think that something’s wrong with our magnetometer. The plots I get when calibrating are very different from what Adafruit gets (see animation in top left corner), where my code draws three circles very far apart instead of overlapping like they get.

It turns out I actually have an IMU (MPU6050) from a previous class that I took so I could’ve used that instead of the magnetometer, but I think at this point it may be too late to switch. I’ll have to look into how much effort it’ll take to get the IMU up and running instead, but from what I remember from trying to use the IMU to calculate heading in my previous class, I will also need to do calibration for it too which might be difficult.

For details, please see the “State of the UI” section below.

Out of the two deliverables from last week, I partially completed one (in orange) and did not make any progress on the last task (in red).

The only real work remaining for the turn signal stuff on my end is to mitigate the issue where we need to physically turn the compass 90 degrees for it to report a change of 60 degrees – I have a potential workaround (see below)

However, I am behind on the radar implementation task. I will check with Jack on whether this is something he can take on, since theoretically we can reuse the stuff he does for the RCW specifically for the FCW. I’m also realizing it is somewhat ill-defined what radar implementation is – I think a good definition at this point is to have code to filter the points from the radar, identify which of them correspond to a vehicle directly in front (for FCW), and send the distance and relative velocity of that car to the UI.

The deliverables for this week are similar to last week’s, except changing “radar implementation” to “radar and UI integration”.

Here’s a more detailed rundown of what the state of the UI is as of the time of this writing.

I implemented code to drive the actual turn signal lights through GPIO pins which in turn control transistor gates. There is a minor issue where the on-screen turn signal indicator isn’t synchronized with the physical turn signal light, but for now it should be OK.

For our turn signal auto-cancellation system, we are using the Adafruit MMC5603 Triple-Axis Magnetometer as a compass. As a quick refresher, the idea of using the compass is that when we activate a turn signal, we record the current bicycle heading. Then, once the bicycle turns beyond a certain angle (currently targeting +- 60 degrees of the starting heading) we automatically turn off the turn signals.

Adafruit provides libraries for Arduino and Python, but since the UI code is written in Javascript there weren’t any pre-written libraries. Therefore, I ended up writing my own code to interface with it.

As mentioned last week, I’m using the i2c-bus library to communicate with the MMC5603 chip over I2C. With help from the MMC5603 datasheet and Adafruit’s C++ library for the MMC5603, we now have support for obtaining the raw magnetic field measurements from the MMC5603.

To convert those raw readings into a heading, I implemented the algorithm described in this Digilent article.

There is an issue where rotating the magnetometer reports 60 degrees of angle change only when it’s actually rotated 90 degrees. We can work around this in code right now by setting a lower threshold to auto-cancel the turn signal (perhaps 40 degrees so it’ll turn off when the bicycle rotates 60 degrees physically), but we may need calibration. From what I briefly read online though, this won’t be a simple process.

I also implemented the ability to override the bike angle to some fake angle in the debug menu. Here’s a video I recorded (a CMU account is required) showing the overriding angle feature and the turn signal auto-cancellation.

The UI side is ready to receive real radar data! The general overview is that Jack’s code will do all the data processing, identify one vehicle each in front, behind, left, and right, and send their distances and velocities in a JSON string to my program. We will be using named pipes, created using mkfifo.

On the UI side, I based my code off of this StackOverflow answer. There was a slight hiccup initially when we were testing passing data from Python to JavaScript when the Python side seemed to buffer multiple JSON strings before actually sending them over the pipe, resulting in a crash on the Javascript side as it received a single string with multiple objects at the top level which isn’t valid JSON. We fixed this by inserting a flush call after sending each string on the Python side.

For details, please see the “State of the UI” section

Out of the three deliverables from last week, I completed one (in green), started a task (in orange), and did not make any progress on the last task (in red).

For the turn signal controls, the remaining tasks are:

I am behind on the turn signal and radar tuning tasks. For the necessary schedule adjustments, please see the team posts. Most likely this week I will continue to prioritize the turn signal implementation work.

The deliverables for this week are similar to last week’s, except with the removal of the UI implementation task and changing “radar tuning” to “radar implementation”.

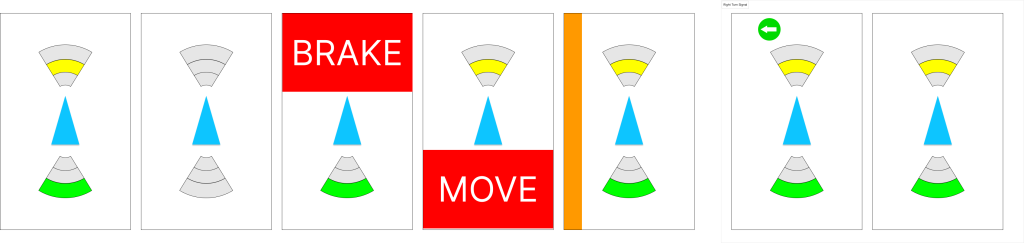

Since the update last week, the UI has received the following major updates:

There was quite an adventure with trying to get GPIO stuff to work with Node.JS. After trying a few different libraries, I settled on using the onoff library.

There are a couple of nuances with using this library:

I’ve also started work on implementing the magnetometer (MMC5603). Since there’s no JavaScript library for this, I’m implementing the code using the datasheet and the i2c-bus library. Currently, I’m still working on the initialization code.

I was unable to complete any tasks from this week:

I’m close to completing the UI, the remaining tasks on my plate are (at least what’s needed for integration):

With respect to the UI itself, I am currently on track although there is a risk of slippage depending on how long these remaining tasks take. According to the schedule, I have until tomorrow to complete the visualizations, so there isn’t much time left.

Because of the delay in starting the other tasks, I’ve shifted the dates for the radar tuning (forward collision tuning) and turn signal implementation to 3/23 – 3/29. This has not resulted in any delays, but further delays (which is a high risk!) in these tasks runs the risk of delaying the overall electrical/software integration such that it results in a cascade of delays downstream. I’m hoping that I can make good progress on these during the lab times this week.

The main deliverables for this week are similar to last week except for removing integration with Jack’s radar code since that task doesn’t start until 3/30, and changing “start” to “complete” for turn signal implementation and radar tuning since their deadlines are soon:

Please see this video I recorded for the current state of the UI: Google Drive link (you will need a CMU account to see this).

Out of the 4 targets from the last update, I’ve completed 2 tasks (highlighted in green) and 2 tasks are unstarted (highlighted in red).

With respect to the software side of the UI, I am currently on track. However, I am behind with respect to the turn signal control software and radar tuning as both should have been started at this point. I plan to discuss with the rest of the team on the radar timeline as I may not have time this upcoming week to tune the radar.

The main deliverables for this week are:

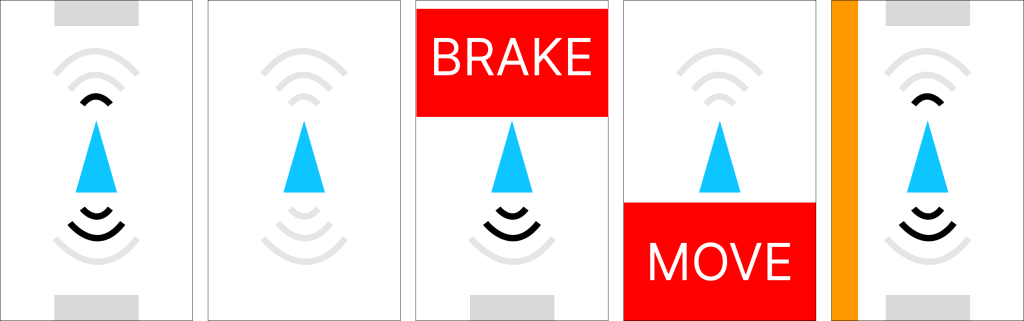

After last week, I realized that the range indicator for a bicycle which looked similar to a wifi indicator could be ambiguous as to the meaning. For example, if the range indicator is full strength, does that mean the car is near or far?

Therefore, I redesigned the range indicator to be similar to what Toyota has for their parking assist proximity sensing system (see “Distance Display” under this page from the 2021 Venza manual). Under the new system, an arc will light up corresponding to the range of the detected car, with further arcs indicating a longer distance. The color of the arc will also serve as a visual cue, with green indicating far, yellow indicating medium, and red indicating close range.

Other changes this week include:

For comparison, here’s the old design:

And here’s the new design:

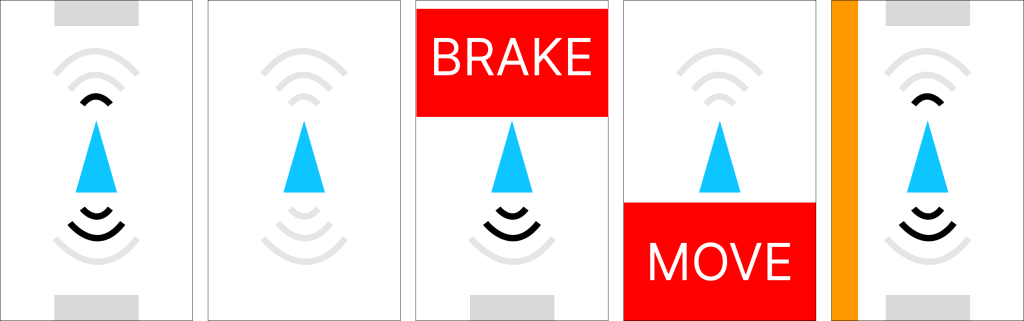

From left to right, the screens display:

Future work will include adding a hazard toggling button and perhaps a debug panel or some other way to show debug information.

Here’s the state of the UI at the time of writing:

The icons are exported from Figma, and the flashing turn signals are driven by the turn signal application logic.

Out of the 2 targets from the last update, I haven’t completed either (highlighted in red):

I am a little behind as I haven’t started on the UI implementation nor completed the UI mockup. I aim to complete the mockup and start the implementation this week.

Therefore, I needed to extend the due date of the mockup from 2/28 to 3/13 (which also pushes the UI implementation start date back), along with changing the UI implementation end date from 3/15 to 3/24 as I’m not confident I can finish it in the original time frame of 1 week (the new allocated time is 1 + 1/2 weeks). This should be OK as I had extra slack time anyways, so there aren’t any delays due to this. There are a lot of tasks going on now so there is a risk of further slippage, but for now this is the plan.

The main deliverables for this week are:

For the UI mockup, I built it using Figma. I’ve heard of Figma but never used it before, but thankfully it seems quite easy to get up and running. Here’s a picture showing mockups of various scenarios:

Note that the UI uses portrait orientation and is inspired in part by Tesla’s visualization (a little bit like the image under “Driving Status” in the Tesla Model Y Owner’s Manual). From left to right, the screens display:

Out of the 5 targets from last week, I’ve completed 4 of them (green text).

I originally slipped behind a bit according to the schedule as software bringup was supposed to be done by 02/21 and I only finished it on 02/24. Nonetheless, I am on schedule as of this moment again, although there is a risk of slipping behind a bit if I don’t finish the UI mockup by 02/28.

The main deliverables for this week are:

I ruled out using a web app simply because we would need to interface with hardware which is more difficult with web apps. I’d need to write native code even with a web app to interface with the hardware, and trying to connect the native code and web app seems difficult to me.

Here are some options that I first considered:

During testing, I also added a Rust framework that is conceptually similar to Electron, called Tauri. I’ve seen it before in my free time and I saw it again when I was browsing Rust UI frameworks at https://areweguiyet.com/. I chose to add it because I saw the high memory usage of Electron and wanted something conceptually similar but hopefully lower usage, and I was curious if Tauri might accomplish that.

Here are the criteria that I used to evaluate things. Note that not every factor is weighted equally.

I created hello world apps in all 4 frameworks and measured their memory and CPU usage. Testing was conducted on a M1 MacBook Air running MacOS Sonoma (14.2.1) with 16 GB RAM. Admittedly the laptop is much faster than an RPi 4 and isn’t even running the same OS, but I’m hoping that the general trends will be similar across MacOS and Linux.

The memory usage was obtained by using Activity Monitor and summing up the relevant threads’ memory usage (e.g., for Electron we have multiple threads running around so we need to account for all of them). For calculating CPU usage, this is admittedly a lot less scientific where I just watched the CPU usage of the threads and grabbed the maximum value (only if it repeatedly hit it – e.g., hitting 1% CPU usage just once wouldn’t count, only if it fluctuated up to 1% a few times).

The code for all of four demo applications are in our shared Github repository.

For the full file with citations, please see the file in Google Drive

For the full file with citations, please see the file in Google Drive

Here are some screenshots of the hello world apps:

All four frameworks are cross-platform so we can ignore that as a deciding factor, although if one of them wasn’t it would have been a major negative point.

For Tauri, I was unfortunately unable to find any applications that I knew of that used it, which made me a little more hesitant about whether it would be as production-ready and usable for real-world apps as the other three. Therefore, I ruled out considering it further.

Between Qt, Gtk, and Electron, Qt and Gtk have similar performance requirements. Sadly, Electron uses 5x the memory and has a little bit more measurable overhead.

However, I feel much more comfortable with using HTML/CSS/JavaScript for building UIs since I’ve used them before for web development. In contrast, I’m not as familiar with how to build UIs with Qt and Gtk (either through code or the GtkBuilder system) which would take additional time to become familiar with. I also was not familiar with Qt’s tooling such as Qt Creator (although apparently it isn’t required, just recommended), so learning that would incur some additional time expense. Language-wise, I feel more comfortable with Javascript/HTML/CSS or C vs. C++. So overall, I feel a lot more comfortable using Electron compared to the other two due to the smaller learning curve.

So in the end, it is a tradeoff between performance overhead and ease-of-development.

Given that consideration, I’d rather use Electron despite the performance overhead simply because the lower learning curve is more important to me. The memory usage, despite being 5x as much, shouldn’t be an issue on the Pi when we have 8 GB (although it will make it more difficult to downsize to a board with less RAM). The fact that Electron can be easily packaged for Linux from MacOS is a bonus whereas packaging the other two from MacOS will be harder (see references).

DECISION: Use Electron for the framework

First, I had to scaffold a basic Electron app. Based on the Electron tutorial which mentioned the create-electron-app command, I decided to use that so I didn’t have to spend time on boilerplate stuff.

Reading the documentation for create-electron-app, I saw I had a choice between Webpack and Vite-based templates. I’ve used Webpack in the past but Vite was completely unfamiliar to me, but based on https://vitejs.dev/guide/why and https://blog.replit.com/vite it seems like it does the job of Webpack (although not all of it) so I’ll go with that. TypeScript is nice because it gives us typing information, so I’ll use the vite-typescript template.

The final scaffolding commandline was:

npm init electron-app@latest ui -- --template=vite-typescript

Here’s a screenshot of the app:

I also wanted to see if I could package this for a Linux system. I spun up an ARM Linux image on my computer (using UTM and the Debian 12 image from UTM), and built a .deb package for Debian using electron-forge. After installing it, I verified I can build packages for Linux from my Mac and have it run!