Major progress in this last week. The flow looks great with the two servers and one server having threaded queue consumption. Implemented a ton of framework classes this week that are POJOs which will wrap our data cleanly and clearly. Also a major question that came up this week is how we are going to decide whether or not we are in a new set or the same set. Trusting the classifier so greatly such that one new workout classification triggers a new set seems foolish, so we came up with an alternate method. We track the last 5 classifications (number subject to change) and if the majority of the last 5 are a new exercise then we close the previous set and open a new set. There are some edge cases handled, but those are still being ironed out.

In the next week I hope to implement actually hitting the classifier on AWS Sagemaker, and sending results to a Frontend. Then also hopefully be able to get some basic form correction going. Schedule will need to be very aggressive the next two weeks to make final demo.

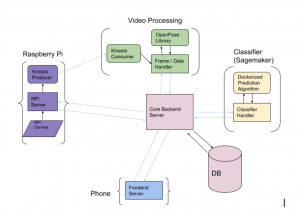

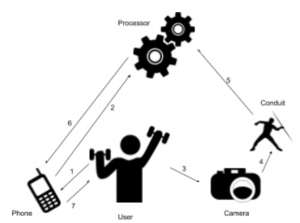

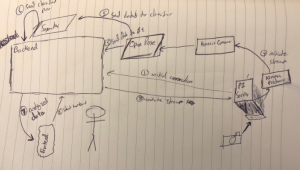

the whole team. I specifically was looking into the high level architecture of our system. This includes what components we need, how they interact, and how they communicate. We had a rough idea of the involved components coming into this week as can be seen in left figure, yet as a team we wanted to take this high-level model and start deciding what will actually fill the role of each part. I looked into how to link the Raspberry Pi to AWS Kinesis thus fulfilling the role of the conduit, and it turns out AWS provides a tutorial on how to do so. The Pi can act as a Kinesis Producer and the AWS Server as the Kinesis Consumer thus streaming our video straight into the processing. Then I made a sketch of the flow of how the components can interact for an average use case. The Pi will need to initially link with the backend and establish its connection to AWS Kinesis. Then the user will initiate a workout through the frontend which will prompt the backend to tell the Pi to initiate a stream. That stream can be sent through Kinesis running OpenPose and the data can be sent both to AWS SageMaker which we plan to use to run our

the whole team. I specifically was looking into the high level architecture of our system. This includes what components we need, how they interact, and how they communicate. We had a rough idea of the involved components coming into this week as can be seen in left figure, yet as a team we wanted to take this high-level model and start deciding what will actually fill the role of each part. I looked into how to link the Raspberry Pi to AWS Kinesis thus fulfilling the role of the conduit, and it turns out AWS provides a tutorial on how to do so. The Pi can act as a Kinesis Producer and the AWS Server as the Kinesis Consumer thus streaming our video straight into the processing. Then I made a sketch of the flow of how the components can interact for an average use case. The Pi will need to initially link with the backend and establish its connection to AWS Kinesis. Then the user will initiate a workout through the frontend which will prompt the backend to tell the Pi to initiate a stream. That stream can be sent through Kinesis running OpenPose and the data can be sent both to AWS SageMaker which we plan to use to run our classifier and the backend for user feedback. This week I spent focussing on ironing out these details with the flow to make sure that the technology we want to use can work together and that the architecture is clear.

classifier and the backend for user feedback. This week I spent focussing on ironing out these details with the flow to make sure that the technology we want to use can work together and that the architecture is clear.