Accomplishments

This week, I didn’t complete as much as I had hoped. I wanted to finish my implementation of the throughput calculating module and testing but I spent a lot of time this week trying to use YOLOv3 to accomplish it. Much of my time was spent trying to figure out how to train a model from the Freiburg grocery store dataset available online (http://aisdatasets.informatik.uni-freiburg.de/freiburg_groceries_dataset/), which has 25 different classes of objects typically found in grocery stores. However, a significant issue I ran into that I dealt with up until Thursday/Friday was that on Google Colab, I was getting many errors trying to run Darknet (the backend for YOLOv3), which I figured out was due to CUDA and OpenCV versions being different on Google Colab than what they should be. These errors were mainly because of YOLOv3’s age as a more legacy version of the framework, and so my attempts to fix them cost a large amount of time. I finally decided to pivot to YOLOv8, which was much less time consuming and allowed me to make some progress. Currently, I have written a rudimentary algorithm for determining the throughput of a cashier: essentially the software module takes in a frame every ⅕ seconds, checks how many new items appear on the left side of the frame (where the bagging area is), and then counts those processed items into a running total (that is divided by the time elapsed). Pictures of my current code are taken below. Since the model is pretrained, it doesn’t work with objects from grocery stores and so I tested to see if the code would even calculate throughput from a video stream using generic objects (cell phone, wallet, computer mice, chapstick):

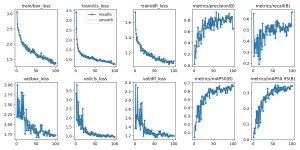

Throughput is being calculated and updated 5 times a second, but I will need to experiment with figuring out if this is an optimal number of updates per second. A huge benefit that I noticed about pivoting to YOLOv8 is that my code is much more readable and straightforward. My Google Colab GPU access was restricted for some of the week due to overuse when trying to figure out YOLOv3 issues, so I am currently training the dataset as of tonight (3/16), I will check back on it every few hours to see when it is finished.

Progress

I am still behind schedule, but tomorrow and Monday I will be working on testing the code after the model is done training, and after that I’ll be able to help Shubhi and Simon with their tasks, as relative fullness and line detection are the most important parts of our project.

YOLOv8 code:

Outdated YOLOv3 snippet for reference: