Accomplishments

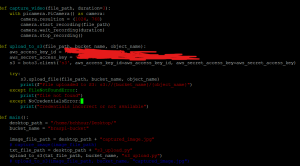

For this week, prior to Wednesday, I spent a lot of time integrating the software modules written in order to prepare for our interim demo. I was able to make the main system run for a single counter using test videos from a trip to Salem’s, but I ran into an issue where OpenCV would not display any of the model predicts (with images) due to an environment issue. I was not able to fix this before the interim demo, but I looked into it after and it seems as though cv2.imshow, which is used to display images in the backend is not thread safe, and so using two separate models for cart counting and item counting led to not being able to see visually what was going on. In the meantime, I worked on setting up a raspberry pi, which I was able to do, and also on our method of choice in uploading and fetching image/video data from cameras. We are using an S3 bucket in order to store real time footage captured from our RPis, and then fetching that data and using it to compute results on our laptops. I set that up and have code that works with uploading generic text and python files, but I haven’t been able to get videos or images recorded from the RPi camera module to upload, because my program doesn’t compile when I import the raspberry pi camera module package.

Here is my code and the S3 bucket after running the code a few times on different files:

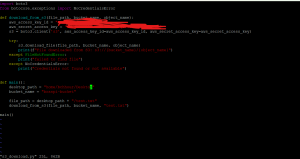

I also wrote some code for downloading the files off the S3 bucket into a laptop, which I haven’t tested yet:

Progress

We have a new day by day schedule with many tasks so we can finish our project in time. I am somewhat keeping up with it for now and hope to finish code for uploading real time footage to the S3 bucket soon (and fetching). I will need to expand the current code today and tomorrow to make it so that it runs and constantly collects real time footage instead of only recording from the camera module for 3 seconds, and also use USB camera footage as well.

Testing

In terms of testing, with throughput calculations I plan on using video footage, calculating the time between the cashier beginning and finishing checking out a batch of items, and then manually calculating the throughput by dividing the number of items in that batch by the time elapsed from start to finish, and looking at the difference between that calculation and the actual throughput.