https://drive.google.com/file/d/1bgp8IbN9lHaDXQtcBOESJFw4Yau5WrV1/view?usp=sharing

This will be replaced with a more stable YouTube link once a suitable account to upload to has been decided on.

By Sun A Cho (sunac), Katherine Dettmer (kdettmer), and Lance Yarlott (coyote)

https://drive.google.com/file/d/1bgp8IbN9lHaDXQtcBOESJFw4Yau5WrV1/view?usp=sharing

This will be replaced with a more stable YouTube link once a suitable account to upload to has been decided on.

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I’m doing a bunch of integration work at the moment. This involves interfacing with the CV code, which is mostly done since it’s just a matter of passing values and reading them. Once I actually know what the final form of the values will be, I can immediately pass them to my own code, in which case they’ll be passed to the Arduino. As for my Arduino code, it’s generally complete, though there are some serial bugs that I’d like to grind out still. One issue I had was with note deaths. In player mode, if a note was played and then immediately replaced with another note, it would be left on forever because of the nature of the player struct. Only one melody note can exist at a given time, and replacing it without culling the note leads to the note remaining on. I had thought this was being properly handled, but for some reason this was because of my ordering. At a high level, I read in data and then update the player struct and manage note times, etc. What I did when reading new notes was immediately setting the note age to maximum before creating a new note. My data reading is non-blocking, but for some reason the player update function wouldn’t work. So, I added it in the first conditional after receiving data saying that I’m reading a new note. This still didn’t work? Then, with only an inkling of intuition, I moved it from the bottom of the conditional code to the top, and then it worked. Notes were properly culled. Thinking back on it, the second code addition should’ve made it so that no notes could play at all, but this didn’t happen. If I were to look more into the issue, I’m sure I could figure out why exactly this was happening, but it doesn’t matter enough since the code works and I have more important matters to focus on. I’m also in the middle of changing the music generation code to create 8th notes instead of 32nd notes. This was really easy on the Python side, but the Arduino is taking a bit of time. This is definitely a load off of my back in terms of data transfer speed, though. For integration with the solenoids, I’ve confirmed with a simple LED array that notes are pushed to the correct pins at the correct times. I’ve also tested delay (without solenoid activation) and when using the Arduino Due’s native USB port, it’s incredibly low. I can’t say that it’s less than 10ms, but it’s low to the point where I can’t notice it with 16th notes being played at 90bpm in player mode. Player mode doesn’t have any sort of sequencer, so if notes are in time, that means that they’re being sent over at consistently quick speeds as well. There was some issue regarding a solenoid activating three times in quick succession every time the Arduino was either flashed, booted, or connected to via serial (which “sort of” restarts the Arduino). Apparently, on boot, the Arduino briefly sets all of its pins to INPUT, meaning that the connected pins are receiving floating voltages. This can clearly be seen when testing with LEDs, as they’re all dimly lit whenever any of the previously mentioned cases occurs. Although the solenoids don’t all attempt to activate at once, I think that must be related to every pin being set to input. When they’re set to their proper state at the end of startup, only pin 13 (the highest pin connected) would activate its solenoid. I am completely unaware of the circuitry on the Due, but maybe it’s related to some flow from surrounding pins being set to OUTPUT. I’m almost certain that if I hooked a measurement device up to the pins and ground I would see a maximum voltage that’s above the turn on voltage for the MOSFET. I’ve mentioned multiple times that a pull-down resistor between the Arduino and the MOSFET would probably solve the issue, so now I’m just waiting for that to be added to the circuit. Then, I can at the very least confirm or rule out whether or not these floating voltages are the cause. In the first place, if the pins are set to OUTPUT, and then immediately set to LOW during the Arduino’s setup() function, wouldn’t one expect them to not activate anything? Either way, once this issue is solved, complete integration is just a matter of hooking up wires to their respective pins. Oh, I also made the switch from the PWM pins to just standard digital pins. I didn’t bother confirming why but the PWM pins inconsistently powered the LEDs, causing them to flicker. The regular digital pins work fine, so that’s what I’m going with.

Also, just in case we lose any more solenoids, I added code that allows us to play MIDI notes only for specific pins. So, if the solenoid at pin 22 dies, I can reflash the Arduino with code that marks the pin with a -1, which can signal for the Arduino to play notes over MIDI instead.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Due to me changing up the minimum subdivision in my music code as per the recommendation during my presentation last week, I’m slightly behind. Plus, my robotics capstone demo is this Monday, so my priorities are a little shifted at the moment considering that system is far larger than this one, and there’s still a lot of final work to be done. However, I plan on completing everything for this well in advance of demo day, and there’s nothing in here that I haven’t done before, I just need to get it done.

What deliverables do you hope to complete in the next week?

I need to finish my planned code changes and that’s about it. Obviously there’ll be debugging involved with that, but I’m predicting that things will go smoothly. Integration should be easy enough, as the serial pipeline is already completed, meaning the most difficult part has been finished for integration. Now, it’s just a matter of passing values between Python code or sending levels to pins.

What did you personally accomplish this week on the project?

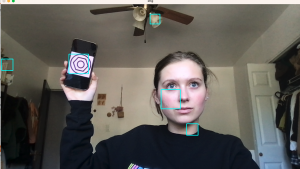

I got symbol detection working !!! This was actually such a difficult journey but I think it will make our project better. It’s accuracy is not great but I think I can make it a lot better with more negative images and training. I also had the idea to combine the color detection and symbol detection to make it really accurate! This would hopefully mitigate issues of detection lips for red, or other things like that. This would also help with not having a 100% accurate symbol detection. I would do this by lining the symbol with red and then test if the color detection and symbol detection has overlap, meaning this is most likely the correct symbol. I also fixed an issue in the fabrication design because when I did the new design with the 6mm I made a mistake on the front plate that makes it tilt upwards. This is a pretty simple fix so I just corrected the file and cut a new one with Suna.

Back to the symbol detection — I had so much trouble getting this to work because opencv has not updated recent versions to include the commands that I needed, because they have been prioritizing other features. Therefore, I had to try to revert to older versions of opencv (below 3.4) but this lead to so many issues. I tried using a virtual environment on my computer, as wall as downloading Anaconda to try and get this older version through them. There was a lot of issues with different packages not being the right version. Finally, I found a video talking about this issue, but they were working on a Windows. I tried to convert what they were doing to Mac commands, but they were using resources that weren’t compatible, so finally I got on to virtual andrew and sent all of my data over. Now, finally, I was able to get the right version of opencv that I needed and run the training and create sample commands. I started with a smaller number of photos than I probably should, just to see if I could get the cascade file, so there is definitely room for improvement. This first image is when I first got it working, and you can see it is detecting a lot of things beyond just the correct symbol.

I began messing with settings on what size it should be detecting, to get rid of some of the unreasonable sizes it was detecting and got this:

Which is a little better. Combination with color and more samples will make this much better.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

On schedule.

What deliverables do you hope to complete in the next week?

Fine-tuning the cascade file and combining it with color, which shouldn’t be very hard, to have better detection for the symbol. Integration with Lance’s part still needs to be done this week as well.

What are the most significant risks that could jeopardize the success of the

project? How are these risks being managed? What contingency plans are ready?

HW: I am a little scared that with all of the testing, we have been doing that our solenoids will burn out (from “too much” use). However, we have some extra solenoids and have been using MIDI in place of the solenoids for testing at home.

SW (CV): I think the biggest risk at this point is integration between mine and Lances part, but it should be fine. We still have a working MVP either way.

SW (Music): Serial pipeline failure. It’s robust and can accurately handle errors, but during recent testing with a robotics capstone project it has had some strange errors where it randomly disconnects (from a Raspberry Pi). A disconnect during our demo wouldn’t be the end of the world, but it would be annoying, especially since both programs would need to be restarted. Integration isn’t much of a concern in my opinion. I just need to know what values to expect on the Python side, and I just need pins on the Arduino side.

Were any changes made to the existing design of the system (requirements,

block diagram, system spec, etc)? Why was this change necessary, what costs

does the change incur, and how will these costs be mitigated going forward?

HW: N/A — but if the solenoids were to burn out, then we will be using MIDI keys as a replacement.

SW (CV): Working on symbol detection, as mentioned before, but thinking of combining it with color detection as described in my post.

SW (Music): Not really a huge design change, but I’m no longer representing music in 32nd notes. Instead I’ve jumped down to 8th notes so that we can play at higher tempos and jump through more chord progressions quicker. This will lead to a smoother user experience in generative mode as well.

Provide an updated schedule if changes have occurred.

HW: N/A

SW: N/A

SW (Music): N/A

This is also the place to put some photos of your progress or to brag about a

component you got working

HW: N/A

(Extra question for this week) List all unit tests and overall system test carried out for experimentation of the system. List any findings and design changes made from your analysis of test results and other data obtained from the experimentation.

HW: when building the circuit, I tested individual solenoids to ensure that they were connected correctly and tested them with the rest of the system to ensure that they were connected/integrated correctly with the entire system. Some of the unit tests I conducted were: just turning on one solenoid at a time, randomizing the arduino keys and playing at most 4 at a time. For “old” solenoids, I noticed that when more than one key was playing concurrently, there was a bit of lagging time, which I roughly timed it as < 100ms — this latency issue was purely from the age of the solenoids. As for the general latency between the arduino code and the solenoids, 40ms latency was observed — which was not significant in our system. I am hoping to measure the latency between the user playing the key using our object-detection system to the actuators playing the keyboard this week — however, in our informal testing, the latency was approximately 150ms (this was measure as soon as we saw in the print statement that a certain key was detected — however, there is definitely some latency in reading/detecting the object itself).

SW(CV): One test I needed to run was the time from when the user puts the color into the right box and when the code recognizes it. This is a test that I expect to change when I am finished with the final implementation of combining symbol detection + color detection, but I doubt it will change very much. I measured about 600 ms on average, which is not bad, but I do think it will change by demo time. The other test I ran was the rate of detection. This can be negatively affected by other objects that have a red hue that the camera picks up. I measured it to be accurate about 92% of the time. However, I do expect to get a better value when I fully integrate the symbol detection in.

SW (Music): I’ve done a lot of unit testing for both music and serial code. For music, my main concern was the generative mode. There’s only one thing to test, and that’s the generation output. I use normal distributions to pick notes and rhythms, so what I did was just generate a bunch of melodies and checked to see if they made sense with the progressions that they were over. This was mostly opinion based, so I just vibe checked all of them. I also checked to make sure that the rhythms were reasonable. I wasn’t really satisfied with those results, but since I’m switched to a slower model, I have a lot of chances to tweak generation probabilities. I did do some brief tests on generating rests, but honestly it just wasn’t really worth it. Notes naturally die out anyways, so there’s not much point in actively “playing” the rests (even though good musicians should do that!) since our project is more geared towards simple things. For serial/Arduino, a huge majority of my tests were done during the heat of debugging, so I don’t really remember them exactly. However, it was things like “stepping through each and every byte I’m supposed to send using the Serial Monitor” and “sending a single byte and tracking every location it goes to.” This evolved into tests with Python interfacing like “sending a sequence of data and having the Arduino report success back” which inevitably evolved to “sending the same string of data and then reading in as much data as humanly possible from the Arduino so that I can figure out where my bytes are going.” Then once the pipeline worked (or was at least half-functional) I started sending melodies off to the Arduino, then chords. For the majority of the semester, I haven’t had any solenoids to test with, so at first I started off with just having the Arduino report what notes it was playing. Then, after Professor Sullivan recommended trying out MIDI output as well, I remembered a MIDIUSB library I briefly used when trying to build an electric vibraphone a few years ago. I shook the dust off of that and made it so that I could have the Arduino interface with FL Studio, and I started testing notes and melodies that way. FL Studio doesn’t really ensure that circuit integration will go well though, so I also set up a simple LED circuit that I could use to test melodies on. It’s currently 7 LEDs, and I was going to extend it to 14, but since we only have about 10 solenoids, I’ll most likely just stick with the 7 and pass extra notes into FL studio. The tests here were just in sending notes, chords, melodies, everything. I also did some simple timing tests by flashing LEDs at various tempos, etc. As long as the Arduino is in time, aged solenoids will sound rubato at best and drunk at worst, which still isn’t terrible at lower tempos. I plan on continuing with these tests throughout integration and up until the demo.

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I am currently working with Katie and Lance to integrate our parts. With Katie, we are working on making sure that we correct the mistake we made in our initial fabrication attempt — one of the sides was a bit long and had to be cut down to be balanced. With Lance, I am helping him to make sure that his ardunio output is not generating random notes in the first few seconds (have not addressed this issue 100%).

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

The integration is definitely taking longer than expected but we are in a good place — working MVP.

What deliverables do you hope to complete in the next week?

finalizing the fabrication + integration.

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

Apparently there were some “issues” with previous status reports, so here’s an overview of my entire set of software that I’ve written, including things I’ve done these past two weeks. Strap in!

I played around with some new chord progressions and added them to the list of possible sets to choose from. These go into the generative framework and will be used to help guide generated melody notes along their path. Progressions are represented as the interval of their root note in the C major scale, so a C major chord is 1, an F major chord is 4, a G major chord is 5, and so on and so forth. The progressions are stored in a 2D array, being n*4 in size, n being the total number of possible progressions. These progressions were picked by hand for two reasons. I want them to resolve back to the 1 somewhat nicely, and I want to avoid strange repetitions or weird patterns that might sound unpleasant to users.

For reference, I made a slight change to generative mode, which hasn’t been mentioned in a short while thanks to dealing with embedded code. As stated, chord progressions are now picked from a list and the program itself isn’t allowed to generate them. Then, melody notes are generated on top of it. There are two parameters that decide what is generated. One decides the tonal mood while the other decides the rhythmic intensity. It should be noted that tonal mood isn’t necessarily saying that the notes should make you feel one way or the other, it’s just saying that the initial vibe of chord progressions and melody are either somewhat bright or somewhat dark (though dark can be hard to achieve in C major without non-diatonic notes). The tonal moods of chords are decided arbitrarily by myself. Rhythmic intensity defines how quickly the piece should be played. The program steps through each possible count, this ranges from 32nd notes to whole notes depending on the intensity, and chooses what count to play on. This is done mostly randomly, since the main randomness comes from what the input parameter is. However, it is also possible to decide on a rolling basis by taking the parameter to be the mean of a probability distribution and randomly selecting a rhythm at each step. If a parameter is centered at 0.5 in the range [0, 1], then it should mean that while half and quarter notes are going to be more common, there’s still a chance for more interesting rhythms to be created. Similarly, if the input parameter is 0.1, then there’s going to be a large number of 16th and 32nd notes (or vice versa, it’s very easy to flip). This process would be repeated for every step along each 32nd note, and after a note subdivision is chosen, then the program will move to the next available count until none remain. The current algorithm does this well. The actual note will then be decided afterwards. Fast rhythms will tend towards smaller changes while slower rhythms will tend to move more freely, though large jumps can happen.

The serial side of things just keeps growing in size, all in the name of optimization. I’ve said before that I’ve changed structs around, and that’s because it’s exactly what I’ve been doing. Frankly speaking, I don’t think that much more needs to be said about that, but it appears that verbosity is the norm rather than the exception. Luckily, I excel in being verbose the exact way I do in being brief. So, here’s an overview of just a few of the various struct changes that I’ve made in the past few weeks. “Oh, I need to know that a note is done playing so that it can be replaced? Got it.” Then I add a field to the phrase struct that’s an array of 256 booleans that determines whether or not a note has been played. At startup, this is marked as true so that any new incoming notes can be added. The struct that stores this is used for generative mode and stores the entire phrase. Then I realized, I can’t block anything, so I need a global variable that tells me what variable to play and when. So, there’s now a global index into this array. Of course, this still isn’t enough to know if it’s safe to change existing notes. Due to the nature of generative mode, new notes may be added before old ones get the chance to be played. However, we’re also working with musical phrases. There’s a few ways to do this. To preserve the current phrase being played, we can only change subphrases that aren’t active. This is just a struct field of booleans. If it’s safe, change it, if not, don’t. Another way to do it is to absolutely change everything on the fly, measure by measure. This involves a counter that marks what measure (of 8) is being played. Then, we pass that measure to a separate struct that goes into a non-blocking player function. We’re then free to change everything else about the music, from its phrase to its melody. Every measure, we take what we currently have, stop updating if really necessary, then pass that measure onto the player struct which takes everything and runs with it. This is explicitly separate from the player mode structure and instead runs off of a global timer that tracks the exact note location in measures and beats down to 32nd notes. It makes use of Arduino’s millis() function and updates every single time loop() is called. The length of 32nd notes is calculated either at the start of the file, or when the tempo is updated (which is a message that can be sent and will be expanded upon in a moment). The function tracks and says, “Ok, where am I?” and calculates its position in a measure based on the start time of that measure. Naturally, this means that there will be times when some notes aren’t selected exactly on their downbeat, but this is still functional enough, as 32nd notes at 120 bpm last for about 15.6 milliseconds. I don’t think the solenoids can handle that, so the tempo will be much slower. The one risk is in reading data. If reading takes too long, it might bypass some notes, which isn’t ideal. So, how can I combat this? There’s a couple ways. The first is simple, I start blocking. Now, I know what I said earlier about blocking and non-blocking, but hear me out. The note isn’t going to change every 32nd note most of the time, so there will be downtime where the pin can hold itself at HIGH for some longer period of time. I would simply account for this by adding a new structure that marks beats as available for updates. This is called at the start of loop(), and based on its result, new data may or may not be read. This seems to be the most simple, since it only involves adding one new array. In fact, I’m pretty sure it is the most simple. A small concern of mine is whether or not the serial buffer will clear itself by the time I get to it since the serial buffer’s size is only 64 bytes. If I send in new chord information, that’s 6 bytes sent in as fast as I can. If my generative phrases are sent in batches, I’ll be sending 128 notes plus a chord progression at around the same pace. That’s a whopping 519 bytes. So, if I start blocking, I might lose data. I could try to send confirmation back, but that just slows the pipeline down and makes everything worse. So, what to do? This actually relates to the second way I mentioned for reading in data without missing notes. One solution is to fill out a bunch of notes before they’re played, then start playing. This gives me a good bit of leeway to fill in new notes and fill notes in behind as well, if I manage to catch up (“What happens if I do catch up?” was one question I had but answered above with the boolean for notes that have been played). “What if you have so many 32nd notes in a row that you can’t read data?” If I get that many in a row, I’ll eat my shoe. The rhythms are based on a sample of a truncated normal distribution. Sure, it’s not impossible to get a lot of 32nd notes in a row, especially with the rhythm parameter maxed out, but the odds of getting so many in a row that I can’t even take a fraction of a second to read in data should be astronomically low. There is one more solution to the problem that I’m not particularly fond of but am willing to implement if necessary, and that’s limiting the speed of data transmission. This opens up a whole new can of worms. I would need to find a good balance between sending enough data that the Arduino can play something, and then sending it at a slow enough rate that it can read new data. The current solution I’m going for is just waiting for a while before starting. The mood is somewhat arbitrary, so it’s output only needs to generally follow the user’s movements. This is going well so far. I also implemented a MIDI framework that allows the arduino to directly interface with a DAW in case we lose any solenoids during our demo. This works great with player mode and just needs a tad bit of tweaking to with with generative mode.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

I’m on track. I just need to finish up generative mode’s serial framework and I’ll be good to go with both full testing and debugging.

What deliverables do you hope to complete in the next week?

The Arduino activates some solenoids on startup and connection and I need to diagnose and fix that. I also need to grind away any delays that might become a problem, either through optimization or changing the speed.

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week, I worked on materials for the mounting system and symbol detection. At first, I was thinking of having part of it be plywood so we could get the material easier, but found 6 mm acrylic in the scrap bin that we can use. I then chose an adhesive that I think will work a lot better than what I tried last time, because it fell apart very easily in the last prototype.

I have been running into a lot of issues with symbols detection, but decided to make my own Haas Cascade again. There are a lot of hard things about this, and I have to find a lot of workarounds for any existing tutorials so this has been pretty time intensive. One issue is just collecting negative images to train the cascade. It seems like there are a lot stricter permissions on huge datasets of images, which makes sense for privacy reasons, but has made my life very difficult. However, I finally found one that worked and was able to create a large directory of negative images. I was then finally able to produce the file I needed for the detection, but it is detecting the symbol very poorly so I am working on trouble shooting that.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Nope!

What deliverables do you hope to complete in the next week?

Tomorrow, I am going to laser cut the mounting system and put it together. The rest of the week, I am going to work on getting symbol detection working well enough to be used.

What are the most significant risks that could jeopardize the success of the

project? How are these risks being managed? What contingency plans are ready?

HW: one of the unexpected risk that we encountered this week was us needing to buy more solenoids. Another thing that I noticed was some latency issues with the actuators. I noticed that some of the old solenoids were actuated with a bit of latency and I think it’s just due to us using it “too much.” I marked it in case this becomes a bigger issue. As long as I just connect everything correctly to the first octave, we should have some extras to replace the “lagging” actuators with new ones.

SW/Fabrication: One risk is that the adhesive we ordered could not work as well as hoped on acrylic and the mounting system could fall apart like last time. Our contingency plan can just be superglue, because I think it will probably work well enough. Worst case, I have some super strong T-Rex tape.. it will just not look very pretty. Software wise, same as last week. If the symbol detecting isn’t good enough, I will fall back on the color detection.

Were any changes made to the existing design of the system (requirements,

block diagram, system spec, etc)? Why was this change necessary, what costs

does the change incur, and how will these costs be mitigated going forward?

HW: n/a — we did have to buy more solenoids due to my carelessness.

SW: no

“ Provide an updated schedule if changes have occurred.

HW: n/a

SW: n/a

“ This is also the place to put some photos of your progress or to brag about a

component you got working.

HW: https://youtube.com/shorts/Cnb2aESx0gw

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week, I got cocky and decided to test my new circuit without testing individual parts — which led me to burning six solenoids. So, after that incident, I decided to spend the rest of the week to rebuild the circuit and testing one at a time. By Friday, I was finished with building the full two-octaves circuit, attaching it below:

https://youtube.com/shorts/Cnb2aESx0gw?feature=share

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Nope!

What deliverables do you hope to complete in the next week?

I am hoping to work with Katherine to build the mounting system and solder and heat shrink new solenoids.