What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

Hardware: the biggest risk that I can think of is buying too expensive of an actuator that I don’t have much left in the budget for other members to use. As of now, I am testing with a less than 10 bucks actuator but if I have to go with an actuator from DigiKey (which is 26 bucks a piece), it will be difficult to plan for contingencies (at least contingencies that would require us to spend money on items that we currently don’t have). If I have to buy 26 bucks a piece actuator, I will have to stick to building one octave.

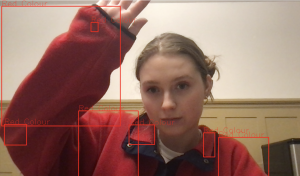

Software/OpenCV: the biggest risk is probably the accuracy of the color detection and how sensitive it is to other colors. I don’t think I have a lot of risks right now because I have a pretty good basic implementation working that wouldn’t jeopardize the success of the project. To manage this risk, I am researching how to fine-tune the color detection and possibly use depth to ensure the right colors are being picked up. The contingency plan would be to just put up a black curtain behind the user so there is no issue with outside color.

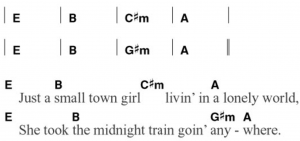

Music Sequencing + Generation: the largest concerns at the moment are timing issues and chord prediction issues. If we can’t consistently send notes at regular rates, the feeling of playing an instrument turns into messing with a buggy mess. On a real instrument, being out of time is the fault of the player rather than the instrument. Although we won’t give our users the option to be out of time, we should ourselves be in time so as to not inconvenience them. As for chord prediction, if our algorithm doesn’t work, we’ll end up with chords that just don’t match what’s being played. Of course, melody is 100% left to user preference, so they may find it more interesting to play non-chord tones. However, it’s our job to ensure the chord matches the notes as best as possible. I’m also considering methods of how to predict what notes will be played next. On one hand, notes in a key have the tendency to want to resolve towards other notes, but on the other hands, players tend to play notes sequentially. If looking ahead doesn’t work, we can always a random chord selection to play for one phrase while analyzing how the users play, and then change the progression for the second phrase, and so on and so forth. There’s definitely a lot to consider.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

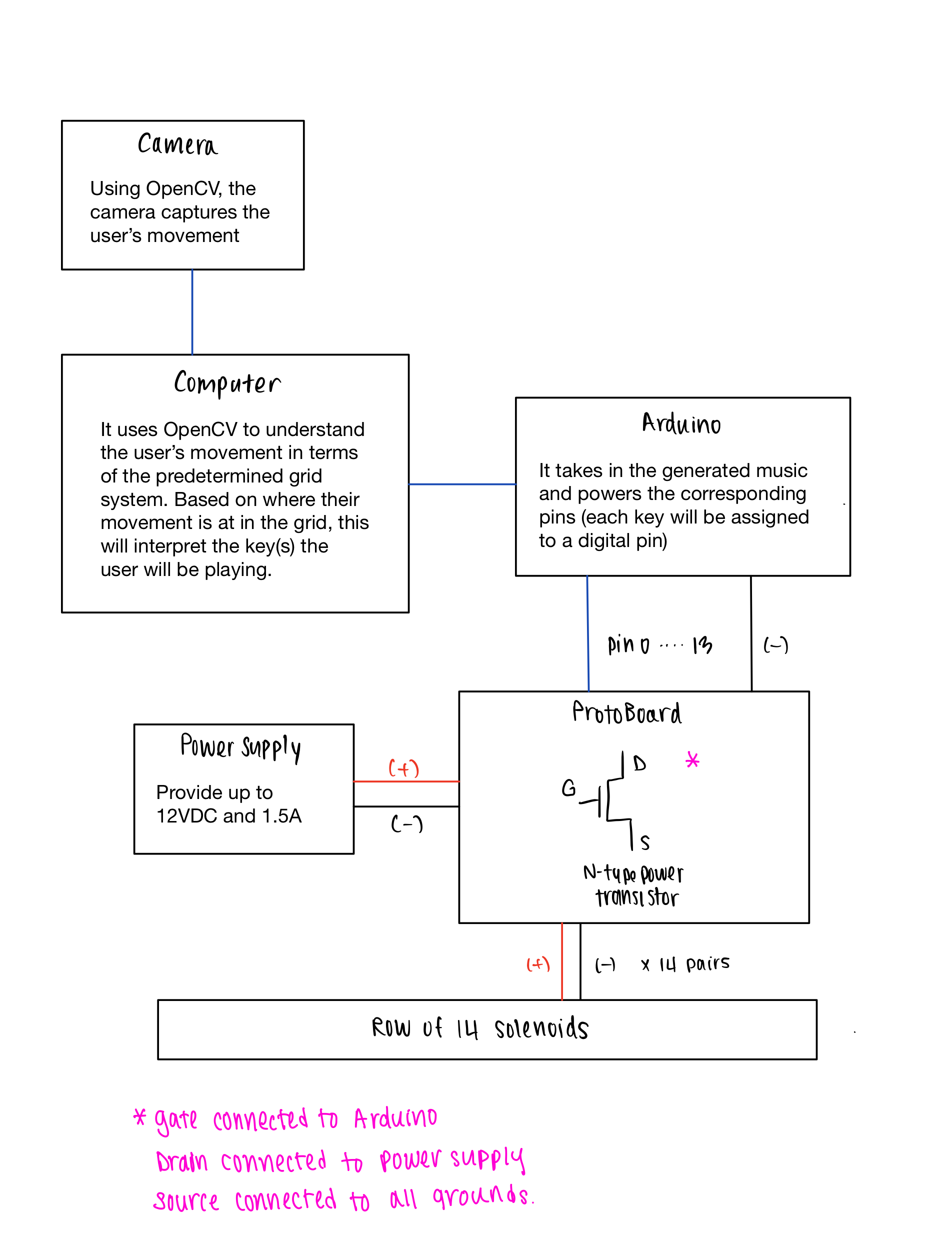

Hardware + Software: no, there haven’t been any changes made to the existing design of the system since I am still testing which solenoid to use. No for software as well.

Provide an updated schedule if changes have occurred.

Hardware: N/A

Software: I (Lance) am considering moving my schedule forward some so that I have time to relax over spring break. Also, sound generation has been renamed to music generation to better fit the direction our design went in. Music generation directly relates to the chord prediction algorithm mentioned above.

This is also the place to put some photos of your progress or to brag about a component you got working.

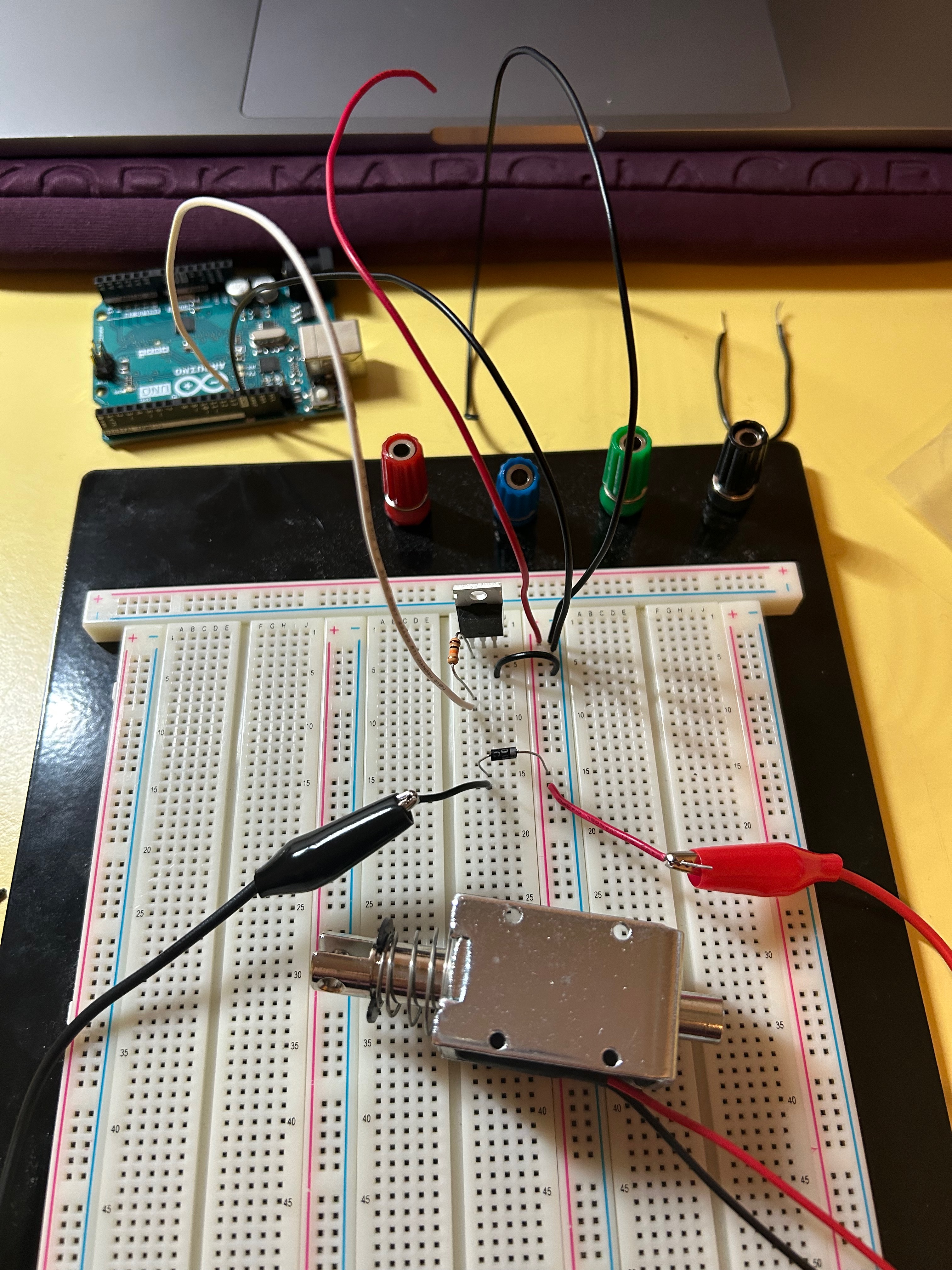

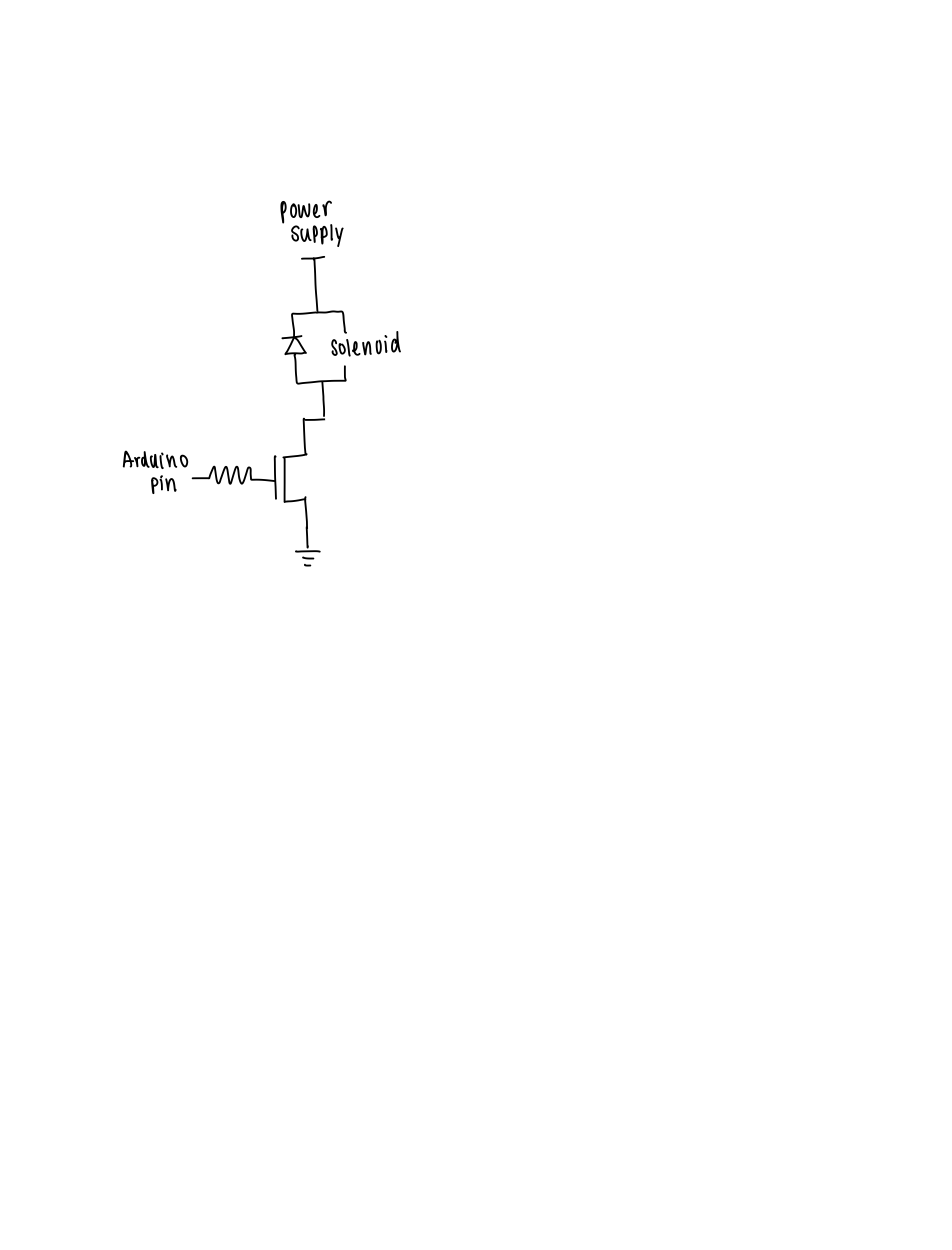

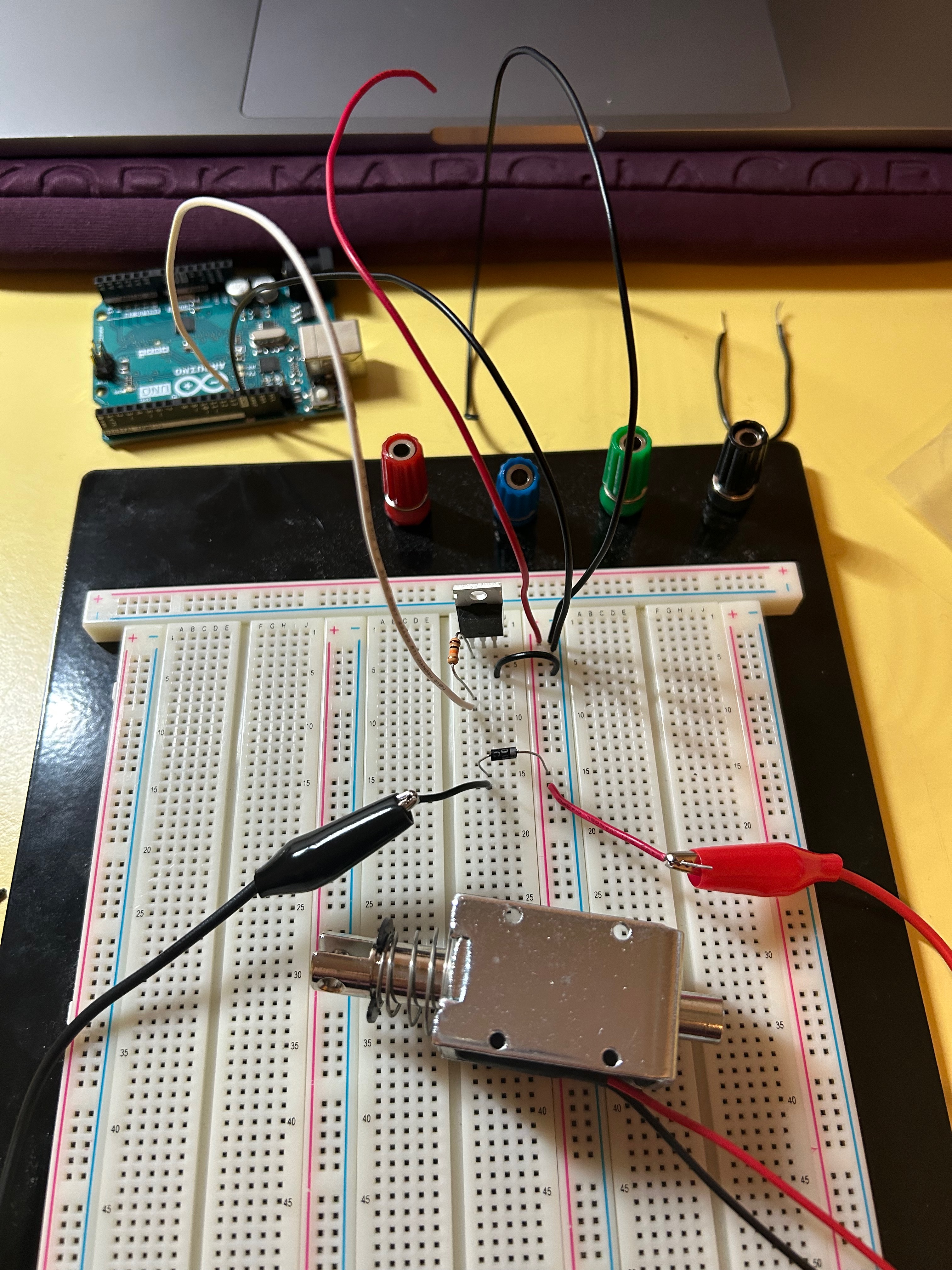

This is the sample / test circuit to test a solenoid:

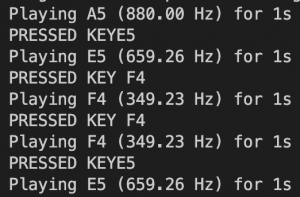

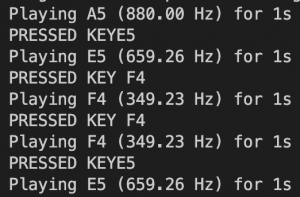

The computer is now playing appropriate piano notes for the user moving into the note which is very exciting. I tried to show it in this picture of the terminal (it is best seen in a video)

\

Enumerate how you have adjusted your teamwork assignments to fill in gaps related to either new design challenges or team shortfalls (e.g. a team member missing a deadline).

Thankfully we have not had a member who did not meet a deadline during the project. However, Sun A had to post Lance’s status report for the first week because he did not have access to WordPress. Thankfully, this was quickly addressed by Prof. Tamal and Lance and now he is able to post his individual report and contribute to the team’s report on WordPress. Other than that, we have not yet had an incident to make adjustments to fill any gaps.

In the future, because Sun A does not have much experience in fabrication, Katherine will step in to help with the actual physical mounting structure for the actuators. And, given that Lance has a lot of experience in Robotics (as it is his additional major), he will be helping out with any difficulties that might rise while building the actuator system.