What did you personally accomplish this week on the project?

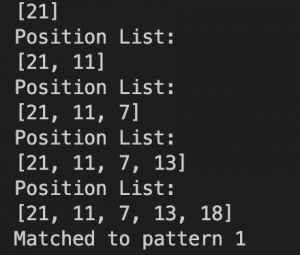

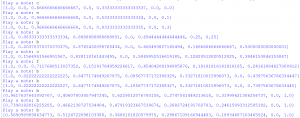

This week, I finished up a bit of platform integration code, and after working with Katherine to discuss software integration I decided that it would be best to go back and rewrite large portions of my own code. This is both for ease of integration, and ensure that the code is easy to maintain, modify, and work on for someone else. I’ve gotten through parts of it, mainly the Python end of things. This involves some code that manages sending messages to Arduino and representing notes in the code. The note representation hasn’t changed much, but it has been refined. For testing purposes, it was mostly just a number, a length, and a checksum. Now, it’s a list that contains a length, a list of notes that are represented as their MIDI values, and finally a checksum. The current implementation has better support for chords, because the previous simply relied on the Arduino interpreting them fast enough to queue them into the same time step. The clocks of the computer and Arduino won’t be synced, so there’s no need to add a start value (rather, it would be difficult to, and it is currently unnecessary due to the speed of the current pipeline). For more info on the representation, notes will be sorted into measures, which are grouped into phrases. There will be one phrase that constantly updates which contains eight measures. The measures have no set length (in Python), but do have a maximum length of timeSignatureNumerator*timeSignatureDenominator*8. This means that in 4/4, you can have four sets of eight 32nd notes, which is generally the smallest subdivision anyone will have to deal with on a daily basis. Measures are ordered, as are phrases, but note lists are not. However, ensuring that there are no duplicate notes is important.

If I decide to make a version that does not enforce strict timing, I can just add a flag to the note list that determines whether or not they will be played immediately or sent to the phrase+measure list set.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I had initially planned on finishing up integration and testing around this time, so it’s debatable as to whether or not I’m on track, but I plan on finishing as much as I possibly can before the interim demo.

What deliverables do you hope to complete in the next week?

I plan on expanding upon what I already have and making it work with Katherine’s code, as well as letting the Arduino sequencer interact with the solenoids.