- What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress.

I rewrote the synthesis section of my synthesizer. Before it uses additive synthesis (adding sine waves of different frequencies). Now it uses wavetable synthesis (sampling an arbitrary periodical wavetable). I also added dual-channel support for my synthesizer. With wavetable synthesis, I only need to perform two look-ups in the wavetable and linear interpolation to generate a sample in the audio buffer. Previously I have to add results of multiple sine functions just to generate a sample. Here’s a demo video.

In short, compared to additive synthesis, wavetable synthesis is much faster and can mimic an arbitrary instrument more easily.

- Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up with the project schedule.

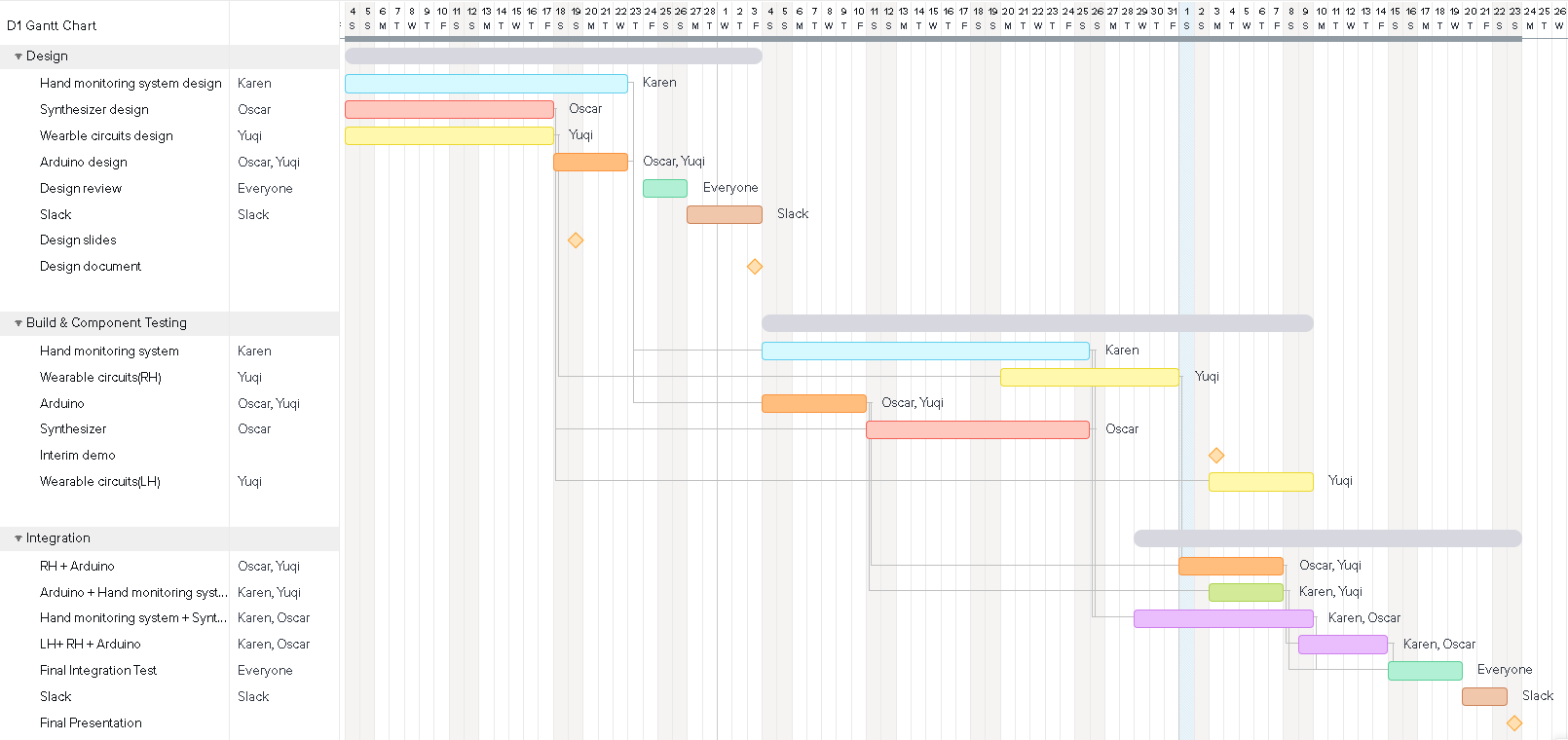

I am a little behind schedule. This week, Karen and I decided to use color tracking instead of hand tracking to reduce the lag of our system, so we are behind on our integration schedule. However, I will write a preliminary color-tracking program and integrate it with my synthesizer tomorrow as a preparation for the actual integration later.

- What deliverables do you hope to complete in the next week?

I am still hoping to integrate the radio transmission part into our system.

- Now that you are entering into the verification and validation phase of your project, provide a comprehensive update on what tests you have you run or are planning to run. In particular, how will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

We ran a basic latency test after we merged our synthesizer and hand tracking program. Because the lag was unbearable, we decided to switch to color tracking and wavetable synthesis. For next week, I will be testing the latency of the new synthesizer. Using a performance analysis tool, I found that the old synthesizer takes about 8ms to fill up the audio buffer and write it to the speaker. Next week, I will make sure that the new synthesizer has a latency under 3ms, which gives the color tracking system about 10-3=7ms to process each video frame.