Team Status Report for 4/29

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk at this moment building the gloves. Originally we planned to sew the chips onto the gloves. Since none of us know how to sew, we end up deciding to hot glue the chips to Velcro tapes, which are then taped to our wool gloves. The tape can also act as a buffer to prevent the pins on the chip from poking through the gloves and causing harm. As a contingency plan, we could ask Karen’s friend to teach us sewing, but I think it was hard to set a time to meet given our schedules.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There was no change to our design.

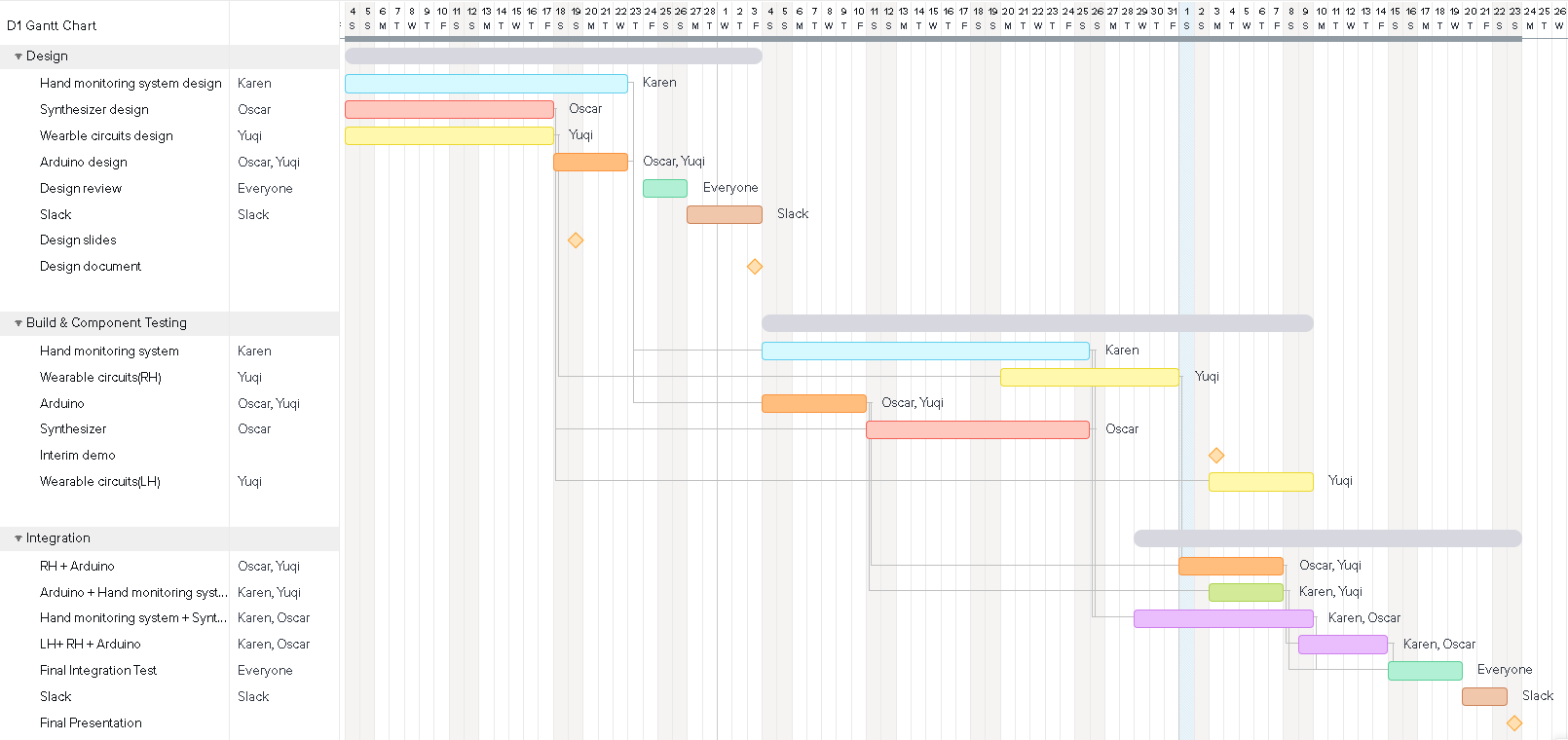

- Provide an updated schedule if changes have occurred.

The schedule has not changed.

- Component you got working.

The radio chips have been integrated with the synthesizer. The left hand’s pitch angle now can control the volume, while the right hand’s roll angle now can control the pitch bend. We will post a video here once we got to the lab tomorrow.

Team Status Report for 4/22

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk at this moment is the radio circuits. According to Yuqi, they work when they are powered by Arduino Unos while connected to the laptop. However, it is not working when I tried to power it using the Arduino Nanos. Yuqi and Oscar will be testing the radio chips with power supplies and power regulators+battery tomorrow. There are two contingency plans. First, we might end up putting Arduino Unos on the gloves to power the chips. Second, we could also buy several power regulator chips and power the radio chips directly.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There was no change to our design, but we might change the way we power our radio chips. This might mean we would either buy extra Arduino Unos or power regulator chips in the future.

- Provide an updated schedule if changes have occurred.

The schedule has not changed.

- Component you got working.

The radio chips are now working. Two transmitters can successfully send one integer each to the receiver. However, the transmitters are all powered by Arduino Unos, which are in turn powered by the laptop.

Team Status Report for 4/8

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk at this moment is our integrated system speed not meeting our time metric requirements. The latency between gesture input and sound output is relatively high, and there is a clear lag that can be felt by users. Currently we are changing to a color-based hand tracking system to reduce the lag of the hand tracking part, and wavetable synthesis to reduce the lag of the synthesizer. Because we are essentially using convolution and a filter to track colors in a video frame, we can lower the resolution of the image and/or search patches of image to speed up the process.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Instead of using a hand tracking model via mediapipe, we end up reverting back to the initial design where we use color to locate the hand. We reduced the number of colored targets from 3 to 1 because it is easier for classification and the user can figure out what sound they are producing earlier. We also bought a webcam so that we don’t have to tune our color filters based on individual laptop webcam. Besides the financial cost, there were no additional costs as the webcam has already been integrated into our system (both on Windows and macOS)..

- Provide an updated schedule if changes have occurred.

The schedule is the same as the new gantt chart from last week.

- Component you got working.

Now we have a basic system that allows the user to produce sound by moving their hands across the screen. The system will track the user’s middle finger through color differences (users will wear a colored finger cot) and produce the note corresponding to the finger location. So far the system supports 8 different notes (8 different quadrants on the screen). Compared to last week, this system now supports sampling arbitrary instrument sounds and dual channel audio.

Team Status Report for 4/1

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk at this moment is our integrated system speed not meeting our time metric requirements. The latency between gesture input and sound output is relatively high, and there is a clear lag that can be felt by users. Currently, we are thinking about using threads and other parallel process methods to reduce the latency created by processing the actual hand tracking command and the write audio buffer function, which contributes to most of the time delay. We are also possibly looking at different tracking models that are faster.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There were no changes to the existing design of the system. All issues so far seem resolvable using the current approach and design

- Provide an updated schedule if changes have occurred.

The updated schedule is shown below in the new Gantt chart.

- Component you got working.

Now we have a basic system that allows the user to produce sound by moving their hands across the screen. So far the system supports 8 different notes (8 different quadrants on the screen). Here’s a demo video.

Team Status Report for 3/25

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk right now is the integration for the interim demo. Since the basic functionalities of the synthesizer and the tracking program have been implemented, we will start early and integrate these two parts next week. However, if there are some issues with python libraries, we will work to support either Windows or macOS first and make both compatible after the interim demo.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There were no changes to the existing design of the system.

- Provide an updated schedule if changes have occurred.

So far there is no change to our current schedule. However, depending on the integration task next week, our schedule might get changed.

- Component you got working.

The gyroscope chip can now control the synthesizer’s volume as well as bend its pitch. Here’s a demo video.

Team Status Report for 3/18

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk right now is the integration process, and the most important link is the radio transmission between gloves and the receiving module. If we met problem with the radio transmission, we will go to search for online tutorial and follow the instruction to solve the problem. We might also ask our TA for help. We don’t think we have any backup plan if it doesn’t work. As a contingency plan, we will have to resort to a wired connection to send angle information from the gloves to the receiving module.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There are no design changes to the system.

- Provide an updated schedule if changes have occurred.

So far there is no major update on our current schedule. Although our DigiKey order hasn’t arrived yet, the major components have arrived (radio and gyroscope IC). Hence, our design process is still on schedule.

- Component you got working.

You can now control the the volume of the synthesizer with the pitch angle of the gyroscope chip. Here is a demo video.

Team Status Report for 3/11

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk right now is the process of incorporating the pre-trained models into our system. Although we have accounted for the possibility that these models don’t fit our requirements and came up with backup models, it is still possible that the integration method that we planned might not work as intended. This brings up another potential issue: the future integration process. Since all of our team members worked on the project during spring break separately, there is not as much communication as if we were to sit inside the classroom. This could potentially cause the integration process to be more complicated than expected. Currently, we are planning to meet more often in our development process in order to better understand each other’s work, and more research is being done at the moment to reduce the confusion in this process.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There are no design changes to the system yet. We did change a metric, the weight of the system is now 4.8 lbs instead of 6 because this number is more reasonable and should better fit user requirements.

- Provide an updated schedule if changes have occurred.

So far there is no major update on our current schedule, several tasks are slightly extended due to spring break, but will not impact the timeline of future items.

- Component you got working.

The gesture-command UI now works with some dummy gestures, it allows matching and save + load the data when python is launched. The synthesizer also supports gliding between notes and customized timbre (demo video).

- Addressing faculty feedback on design presentation

Most of the questions are addressed in the design report, and the rest will be addressed below.

“Use of glove and what it will control and how? How does the battery on one hand connect to the other?”

Each glove mounts an individual circuit that contains 2 LiPO batteries, an Arduino Nano, a radio transmitter, and a gyroscope chip. Since each glove is individually powered, there is no physical connection between them. The left hand’s pitch angle will be mapped to volume in the synthesizer, where the right hand’s roll angle will be mapped to pitch bend.

“Why no Windows?”

After changing some python libraries, our software now runs both on Windows and macOS.

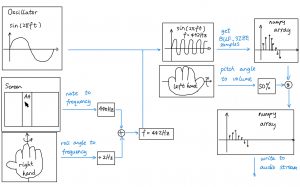

“Details about the synthesizer”

Below is a more detailed diagram of the synthesizer.

- The frequency f is based on the note selected by the user’s hand position on screen and the amount of pitch bend.

- Oscillate the waveform given by the oscillator at the frequency f.

- Samples the oscillating waveform into buffer and multiply it with the volume parameter determined by user’s left hand.

- Write the buffer to audio stream.

Team Status Report for 2/25

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

After resolving the risks with our software last week, we believe that our most significant risks lie within our hardware. Because the quality of ICs (especially the tilt sensor and the radio chip) is not guaranteed, we will start component testing immediately after our chips arrive. If there is a defect, we will order another one (maybe from another seller) within one day.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

We did change the battery for our gloves from alkaline batteries to rechargeable lipo batteries. Lipo batteries are lighter and have a power density, which will in return make our system lighter and last longer. It will also cut down our development costs as we don’t have to purchase batteries all the time. To address the possible (although unlikely) overheating of the battery, we will monitor the power consumption and temperature of the lipo batteries as we develop the system. We will also add an insulator layer to our glove if necessary.

- Provide an updated schedule if changes have occurred.

There was no change to our schedule.

- Component that we got to work.

Oscar continued working on the synthesizer and now it supports real time pitch and volume control and timbre selection. Here is a short demo video.

- Adjustment to team work to fill in gaps related to new design challenge

Although Oscar was in charge of developing the synthesizer, he encountered a major sound card issue that couldn’t be resolved when developing on Ubuntu. He then switched to Windows and wrote everything from scratch using new libraries. In order to make sure the resulting product is still macOS compatible, Karen and Yuqi (both owning a MacBook) both jumped in and conducted a preliminary setup experiment. After changing the code a bit and installing the correct version of libraries, our synthesizer is now both Windows and macOS compatible.

Team Reports for 2/18

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

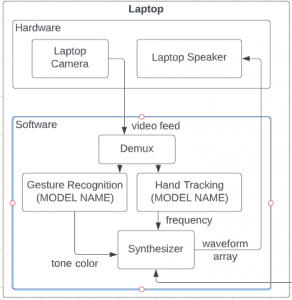

We still believe the most significant risk is the hand gesture + movement detection system. As a result, we decided to use a demux structure so that our program will either perform gesture recognition or hand tracking. The video feed will be fed into two time-multiplexed models, one for gesture recognition and one for hand tracking. Splitting the functionality allows us to access more pretrained models and hand tracking program, which greatly reduces the development time. An abstract block diagram is shown below:

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Instead of training our own gesture recognition model we decide to use a pre-existing one because of time constraints. Since we are not at the step yet this design change did not create any extra cost for us. We also included backup options for most of the parts that we plan to write on our own in case something goes wrong. We believe that risk mitigation is necessary and it is always better to have a plan b on everything.

- Provide an updated schedule if changes have occurred.

There was no change to our schedule. After factoring spring break and other outside events, the schedule is still reasonable for everyone.

- Component that we got to work.

Oscar wrote a basic, cursor-controlled synthesizer that supports real-time playing of 2 notes. The frequency value of those notes were generated lazily using iterator functions and OOP.

- The “keep it simple” principle

The major decision we had last week was to split the gesture control part and hand tracking part into two time-multiplexed programs. That way, we can easily develop the two parts concurrently and make best use of any pretrained/existing programs. There are two modes of our instrument, a sound selection mode (where the user can use gestures to select a particular timbre), and a play mode (where the user plays the instrument with hand positions). We will also include a software demux that allows users to freely switch between these two modes.

Team Status Reports for 2/11

The most significant risk is the hand gesture/movement detection system. Since we plan to use our own data set and train our network, it could be more time-consuming than expected. As a contingency plan, we found two pre-trained gesture recognition models online: Real-time-GesRec and hand-gesture-recognition-mediapipe. However, these models do support more gestures, so we will only use a subset that maximizes the detection accuracy.

So far there are no changes made to the existing general design of the system. However, we did introduce a plan B to some of the subparts in the system. For example, we are considering the possibility of using a pre-trained model in case we are not able to collect enough data in a short period of time and train our own. This a necessary precaution to ensure that a problem in one part of the process would not cause the overall hand gesture recognition component of the system to not function properly.

No updates to the current schedule have been created. Currently, we are still in the process of designing our implementation and finalizing design details.

Although we are still in the research and design stage, we did come across a DIY Python synthesizer tutorial that actually mimics the theremin quite well.

Our project includes environmental and economic considerations. We added an autonomous lighting system so that our instrument can function in a very dim environment. We also want our end product to be significantly cheaper than theremin on the market, which limits our budget to be under $400 dollars.