- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

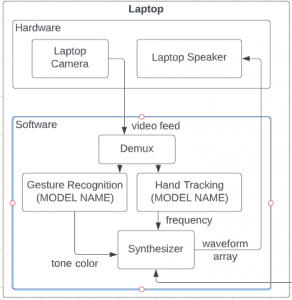

The most significant risk right now is the process of incorporating the pre-trained models into our system. Although we have accounted for the possibility that these models don’t fit our requirements and came up with backup models, it is still possible that the integration method that we planned might not work as intended. This brings up another potential issue: the future integration process. Since all of our team members worked on the project during spring break separately, there is not as much communication as if we were to sit inside the classroom. This could potentially cause the integration process to be more complicated than expected. Currently, we are planning to meet more often in our development process in order to better understand each other’s work, and more research is being done at the moment to reduce the confusion in this process.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There are no design changes to the system yet. We did change a metric, the weight of the system is now 4.8 lbs instead of 6 because this number is more reasonable and should better fit user requirements.

- Provide an updated schedule if changes have occurred.

So far there is no major update on our current schedule, several tasks are slightly extended due to spring break, but will not impact the timeline of future items.

- Component you got working.

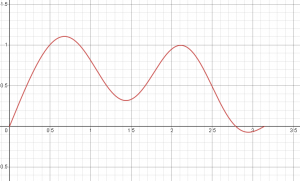

The gesture-command UI now works with some dummy gestures, it allows matching and save + load the data when python is launched. The synthesizer also supports gliding between notes and customized timbre (demo video).

- Addressing faculty feedback on design presentation

Most of the questions are addressed in the design report, and the rest will be addressed below.

“Use of glove and what it will control and how? How does the battery on one hand connect to the other?”

Each glove mounts an individual circuit that contains 2 LiPO batteries, an Arduino Nano, a radio transmitter, and a gyroscope chip. Since each glove is individually powered, there is no physical connection between them. The left hand’s pitch angle will be mapped to volume in the synthesizer, where the right hand’s roll angle will be mapped to pitch bend.

“Why no Windows?”

After changing some python libraries, our software now runs both on Windows and macOS.

“Details about the synthesizer”

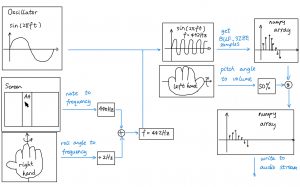

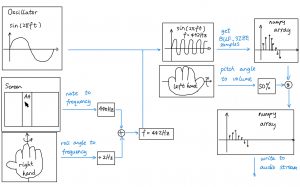

Below is a more detailed diagram of the synthesizer.

- The frequency f is based on the note selected by the user’s hand position on screen and the amount of pitch bend.

- Oscillate the waveform given by the oscillator at the frequency f.

- Samples the oscillating waveform into buffer and multiply it with the volume parameter determined by user’s left hand.

- Write the buffer to audio stream.