- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk at this moment building the gloves. Originally we planned to sew the chips onto the gloves. Since none of us know how to sew, we end up deciding to hot glue the chips to Velcro tapes, which are then taped to our wool gloves. The tape can also act as a buffer to prevent the pins on the chip from poking through the gloves and causing harm. As a contingency plan, we could ask Karen’s friend to teach us sewing, but I think it was hard to set a time to meet given our schedules.

- Were any changes made to the existing design of the system? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There was no change to our design.

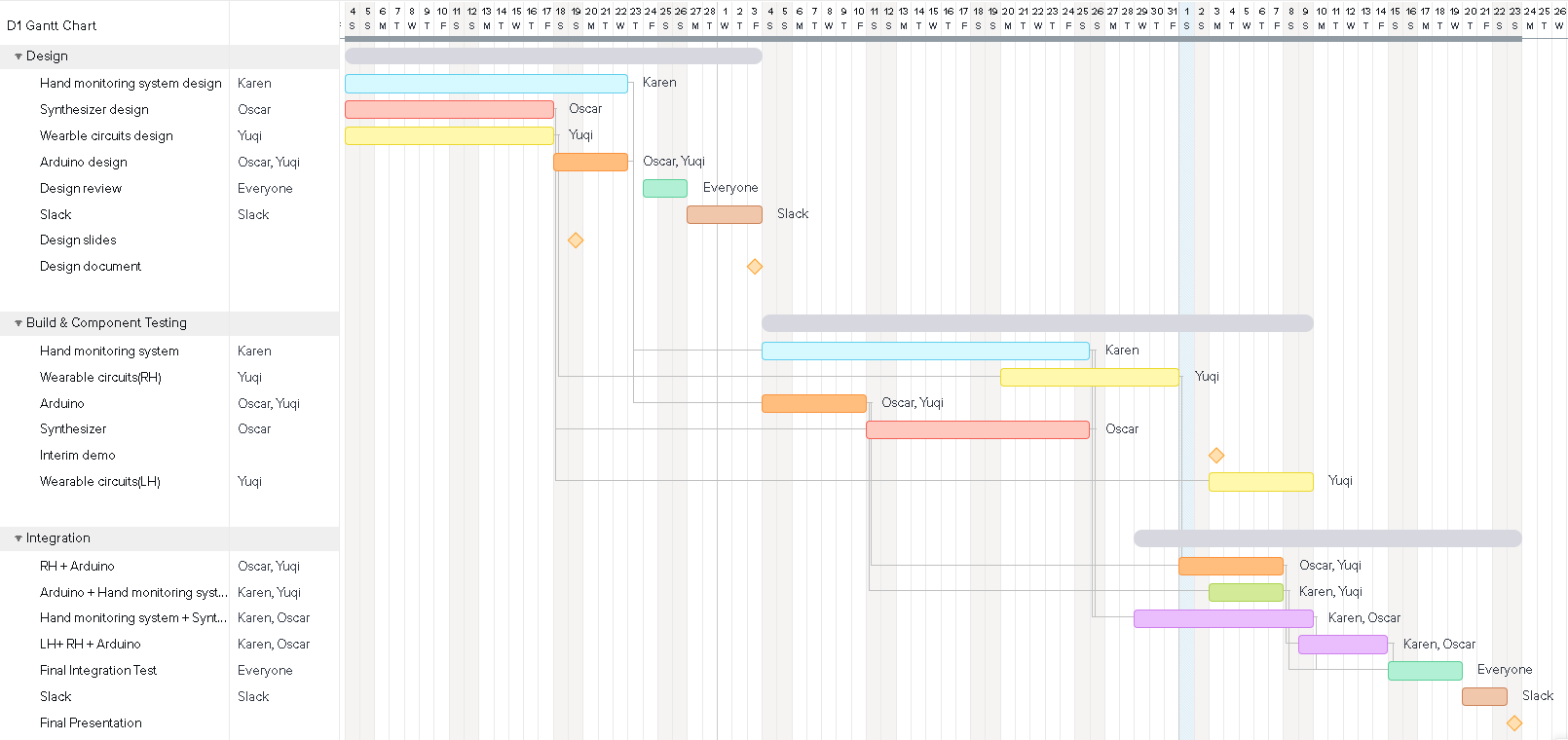

- Provide an updated schedule if changes have occurred.

The schedule has not changed.

- Component you got working.

The radio chips have been integrated with the synthesizer. The left hand’s pitch angle now can control the volume, while the right hand’s roll angle now can control the pitch bend. We will post a video here once we got to the lab tomorrow.