What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

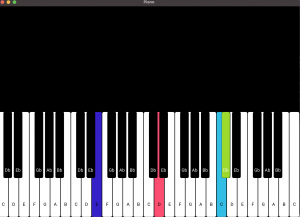

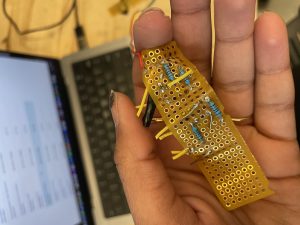

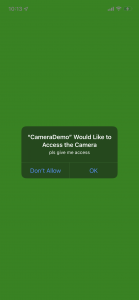

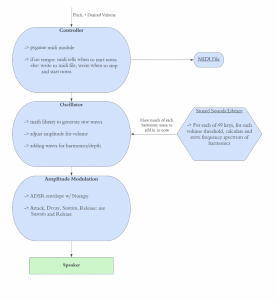

I made the initial frame of the piano app and a camera demo. I made sure that you could hide the camera view will still sending frames, and got buttons to attach to different actions and work. In the frame of the piano app, I worked on pulling up the interface with two octaves on the screen. Currently, you can play the piano with touch input. Later, this touch input will be replaced with bluetooth input that mimics the touch.

Here is a photo of the piano frame:

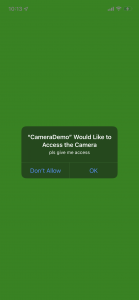

Some images of the camera demo:

” Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Yes, we are on schedule according to our Gantt chart.

” What deliverables do you hope to complete in the next week?

I will merge the two demos into one app in Xcode, which will take some nifty little hacking. Also, I will create a calibration screen and convert the frames into the appropriate format to forward to the Python code.

Now that you are entering into the verification and validation phase of your project, provide a comprehensive update on what tests you have you run or are planning to run. In particular, how will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

In the app, I will be making sure that we have 2 octaves playable (tested by counting the maximum display). We will need to set some user input to allow them to change which octaves are displayed. Other tests are adapted from our design report:

Tests for Volume

– Measure the volume output in Xcode for each note, make sure they are all within the same decibel (inbuilt in Xcode, can also measure with an external phone’s microphone)

Tests for Multinote Volume

– Same test as above, but when playing multiple notes at a time.

Tests for playback

–

Because we will be playing multiple notes at the same time, we want to have a fast enough playback time for the notes played. First, we will test our playback speed, playing at least 8 notes over 2 octaves. We will start the time when the arduino registers a pressed key, and then see how long it takes to reach the app and call the command to play the speaker. All of this should happen in under 100ms. If it doesn’t, we will need to alter how our apps’ threads handle input and prioritize better, or change our baud rate.

Tests for time delay

- We want our product to behave as similarly to a real piano as possible, so we want the way that our note fades to accurately reflect how notes actually fade out on a real piano. We need to make sure that playing successive notes quickly allows each note to fade and layers the next note on top, in addition to adding in the sound levels. We will also compare the sound to a real piano, using a metronome and timer to see how long each note rings out on our piano version of a real piano. Our goal is to have the keys fade out within 0.5 seconds, although our fade may be more linear than on a real piano.