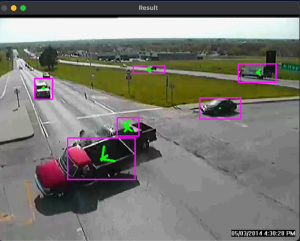

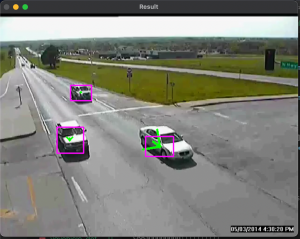

This week I worked with Arvind on further optimizing our crash detection and bounding box algorithms. We followed Professor Kim’s advice on focusing on the crash detection part of the project. The first thing we focused on was adding some live drawings to overlay over vehicles to better get an understanding of how our system was understanding the environment. We added bounding boxes (not shown in the image), tracking objects (the purple/pink boxes shown below) and vector /center points (the green arrows shown below) to all of the cars we picked up.

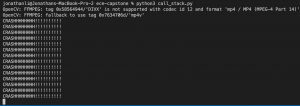

After doing so we were able to fix some minor bugs relating to how we track objects. At one point we realized we had a small bug in which we swapped x,y values during a tracking part of our algorithm. Using the images also helped us notice that certain non car objects were being tracked which led to false positives in our crash detection.

I remedied this by recalibrating how we implement background detection. Essentially we are training a simple KNN algorithm that reads in frames to build a background mask. What would happen is sometimes in some videos cars would be stopped for a very long time and the algorithm would end up defining that the car was part of the background. When the car finally moved, then the road itself would be tracked. To remedy this we used more frames for the background mask and tried using frames with no moving objects.

Finally, we worked on further refining the crash detection modules. Here is a set of screenshots from our example video that we have been using in our status posts.