What did you personally accomplish this week on the project?

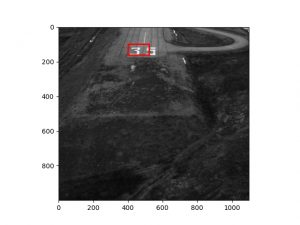

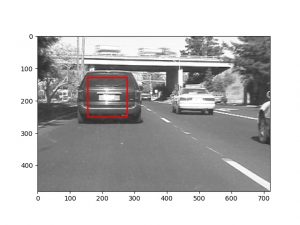

This week I continued to work on integrating the tracker via library calls for the zoom functionality of the camera. I’ve also been preparing for testing with code that takes different video formats and reads each frame for processing. The latter involved reading up on some opencv and skvideo documentation.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am currently on schedule, but our team has yet to get the camera working so we’re still behind. I’m thinking we should speak more with one of our professors colleagues to help us with configuring the camera, as they’ve worked with streaming before.

What deliverables do you hope to complete in the next week?

Next week we will have a fully functional robot 🤖. What’s left is to ascertain that the streaming is adequate and test what real-time detection is like on the Jetson Nano.