Find our demo video here!

Team’s Status Report for April 30

What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

Our most significant risk is not putting adequate time into the presentation of our project. It is easy to spend all our time making marginal improvements on the device, but at this point, our system is mostly finished. The poster, demo, and final report are substantial amounts of work. It is important that we begin working on these assignments in parallel with our current testing and adjustment.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward

After the final presentation, Professor Savvides advised us that one camera would be sufficient for our proof of concept and that we shouldn’t waste resources adding the second camera. This update will save us time and money down the stretch.

Provide an updated schedule if changes have occurred.

- Sunday-Tuesday: Poster + Test

- Wednesday-Saturday: Report + Demo

Justin’s Status Report for April 30

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress.

On Sunday I worked most of the day preparing for the final presentation. On top of working on the actual presentation, I finished the neural network for photo editing. This involved creating the final dataset for the photo editing, training the model, and testing with people outside of the project. During the week, I attended the final presentations in class. I also worked on resolving an installation issue on the Jetson Nano. Our current versions of PyTorch and CUDA were incompatible.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on schedule with the most recent team schedule.

What deliverables do you hope to complete in the next week?

Demo : )

Fernando’s Status Report for April 30

What did you personally accomplish this week on the project?

This week I continued to work on integrating the tracker via library calls for the zoom functionality of the camera. I’ve also been preparing for testing with code that takes different video formats and reads each frame for processing. The latter involved reading up on some opencv and skvideo documentation.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am currently on schedule, but our team has yet to get the camera working so we’re still behind. I’m thinking we should speak more with one of our professors colleagues to help us with configuring the camera, as they’ve worked with streaming before.

What deliverables do you hope to complete in the next week?

Next week we will have a fully functional robot 🤖. What’s left is to ascertain that the streaming is adequate and test what real-time detection is like on the Jetson Nano.

Sids Status Report for April 30th

What did you personally accomplish this week on the project?

This week I focussed most of my work on working on finishing the integration of the system and beginning to procedures needed for testing.

On the integration side, we are debugging the PyTorch and Cuda versions on the Jetson since there was some compatibility issue that arose when we tried running the script that had the detection integrated with the camera simple search. Justin is currently taking a look at this and as soon as that is done we move on to testing the system implementation with our one camera approach we had discussed in the last meeting with Professor Savvides.

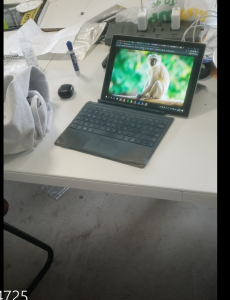

On the testing end, I looked through the WCS Camera Traps Dataset once more and this time looked for images that were not in our dataset and had pictures of different animals in a variety of orientations (all photographed well).

Some of these are attached below:

Once I finish printing out the pictures I can get the cut-outs and find a way to mount and place them so we can place them around the setup for testing. To test out different lighting conditions, I found a place we can use where we can control the light exposure and hence test at different distances and in different light conditions that simulate the real environment.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress on the project is a little behind because I was unwell this last week. In the meantime, I made progress with Integration and Testing as described above and to get back on schedule I am going to focus all my effort on finishing the project in the coming few days. Once we finish debugging, I can spend time on testing and refining the system as needed which should be something that can be accomplished with repeated testing and tweaking of the system.

What deliverables do you hope to complete in the next week?

Finish testing and refining the system as much as we can in order to have a smooth and well-functioning demo

In the meantime, I will finish the deliverables as needed i.e. the poster, report and video.

Fernando’s Status Report for April 23rd

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress.

This week we collectively focused on the integration of the system and our final presentation. Some of the larger tasks involve setting up the ssh server within the Jetson Nano, configuring the requirements for PyTorch on the Jetson Nano, and attempting to run some rough tests on the KLT using images of animals.

![]()

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress is behind, as I would have liked to have tested more videos on the tracker of animals at different distances from the camera , but it seems I could not get the dependencies correct under ffmpeg for using skvideo. With this I could convert regular mp4 videos to npy arrays that are usable for our KLT. Catching up would require getting skvideo to work, recording videos of animals at different distances and making sure the tracker works at an appropriate speed. If not, our plan B would be to use the base KLT offered by openCV. Perhaps there is another way of converting regular videos to npy without skvideo?

What deliverables do you hope to complete in the next week?

In the next week, I’d like to have a fully integrated tracker.

Sids Status Report for April 23rd

What did you personally accomplish this week on the project?

The majority of my work this week involved finishing the process of training the detection model properly and ensuring that this was done properly.

I faced many problems with this process; first, our setup on Colab did not work in time either due to high latency during the scanning of the dataset files from google drive. Following this, I decided to use my teammate’s old desktop for training the model since it had a GPU. After managing to transfer all the files to the desktop using the public sharing URL provided by google drive.

I set up the environment needed for training the neural net on the desktop and this took much longer than I had expected. We ran into many problems and with my team’s help, I was able to modify the Python and CUDA versions on the desktop to be compatible with each other and YOLOv5. We also had to make changes to the CUDA and PyTorch versions that were running on the JESON Nano based on the changes we made.

While the model was training, we worked on the search algorithm for the camera on the JETSON. Once the model was trained we got statistics regarding the recall and accuracy which we were happy with.

Recall ~ 93%

Acuraccy ~ 92.8%

After adding code to the scripts on the JETSON, the detection model can be integrated into the algorithm. We move the trained best weights and call the predict function for every frame the camera sees until the detection model tells us to transfer control to track.

We planned out the testing process and I picked images we are going to print to test the project in the coming days.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

The progress is slightly behind due to all the problems faced while training the detection model and the fact that errors would show up hours into running the training script.

To make up for this, my team helped me with implementing a basic simple search algorithm with the camera for now and we plan to speed up our plans for testing which would put us back on track.

What deliverables do you hope to complete in the next week?

Test results for the detection and integrated system.

Team’s Status Report for April 23

What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We are finishing integration this weekend and working through testing. At this point, our biggest risk is not being able to upgrade our system to two cameras. Because the cameras are not in the same location, an additional alignment code will be necessary. This may be difficult to get operational. As a contingency plan, we can stack the two cameras together. One can be permanently zoomed out (for tracking) and the other can zoom in (for photographing). This approach is simpler and would not be much less effective.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward

After speaking with one of Professor Savvides’ Ph.D. students, we realized that using two MIPI-CSI cameras on our version of the Jetson Nano is not possible even with our multi-camera adapter. This is because the camera stream must be terminated before another camera can be accessed. We found that the best way to troubleshoot these problems is by purchasing a USB IMX219 camera. This is the same model we currently have, so our code will be compatible, but the USB input can be streamed simultaneously to the MIPI-CSI. The camera will fit on our current pan tilt system and is only $35 on amazon. We will cover this expense ourselves and can get two-day shipping.

Provide an updated schedule if changes have occurred.

We will add our second camera this week after our initial round of testing. This will go under the ‘modifications’ time previously allocated.

Justin’s Status Report for April 23

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress.

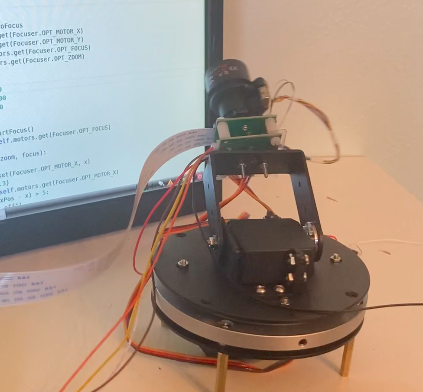

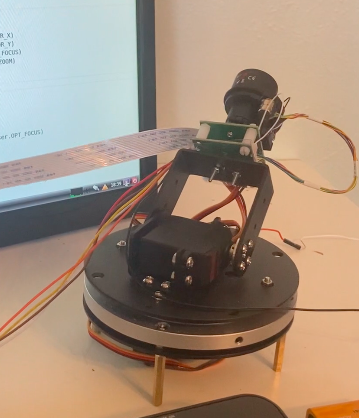

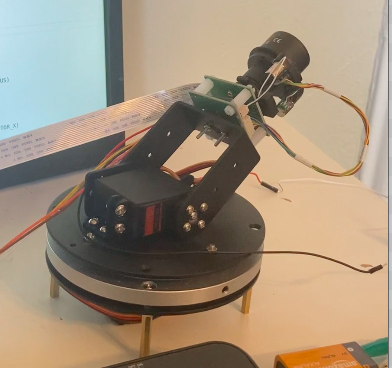

With deadlines upcoming, I contributed to multiple areas of the project. The most crucial area I worked in was implementing the search algorithm on the robotic system.

The second thing I worked on was helping troubleshoot the training of our detection CNN. We tried training this model on a laptop and on google Colab, but neither of these approaches were feasible. So instead we used my desktop. This ended up being more difficult than anticipated due to incompatibility between our CUDA and Pytorch versions. Afterward, I worked with my group members to install PyTorch on the Jetson Nano to use the detection model.

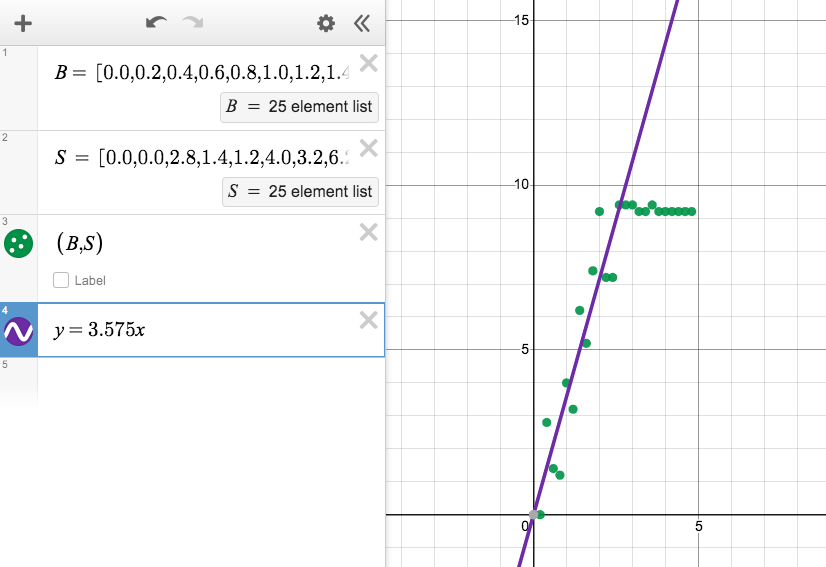

Finally, I continued to make progress on the photo editing algorithm. Our goal is to have a CNN which outputs amounts to apply various image algorithms. To generate data for this training, we need the algorithms to be reversible to determine the target editing modification. Some inverse algorithms (ex. tint inverse) could be mathematically derived. Others (like sharpening and blurring) required experimentally finding a relationship that minimized reconstruction error.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on schedule with the most recent team schedule.

What deliverables do you hope to complete in the next week?

Complete test results and modifications for the system.

Fernando’s Status Report for April 16

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress.

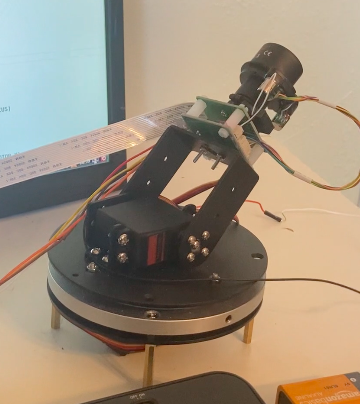

This week we made further progress on the cooperation between the arducam and tracker, which now sends incremental updates of the target’s bound box that are then translated to positive or negative motor steps in the x and y direction of the camera’s pan functionality

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Our progress is currently on schedule.

What deliverables do you hope to complete in the next week?

In the next week we hope to have a further tested tracking camera that moves with its target smoothly.