What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

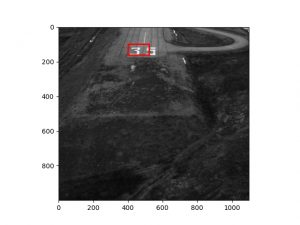

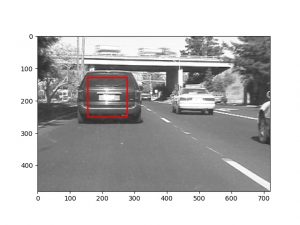

The risk that we presented last week, failing testing in multiple areas, still remains our largest risk. Additionally, we found that the integration and setup needed for testing are larger than expected. It is vital that we are able to integrate detection and tracking into the robot this week. Also, we need to finalize the plans and materials for testing this week as well. This way we will test in time to make adjustments by the project end. We have the slack to adjust if this goal is not accomplished, but this is a very doable goal.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward

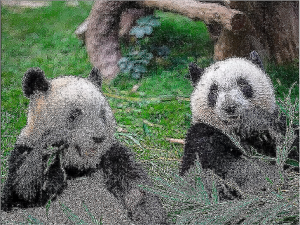

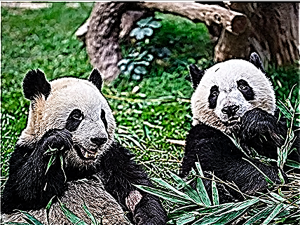

No changes were made to the system. However, we realized that we will have to print color images for testing. And ideally, these images may be larger than the paper size to replicate some animals. We can cover some of these costs with our printing quota. For the larger printouts, we need to explore options on campus but may need to use personal funds for off-campus printing services. Our definitive answer will be provided when we finalize testing plans.

Provide an updated schedule if changes have occurred.

No schedule updates.