What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress.

One of our largest integration problems has been streaming the camera’s video using python 3. The demo code/library for the Pan Tilt Zoom camera was written for python 2 and throws an error when run in python 3. This is a problem because all the code we have written is in python 3. I had previously tried fixing the code by changing the streaming method to write to a pipe or using ffmpeg. After working through the errors in these methods, I realized that the problem was actually with the Threading library. I had been confused by the generic error message combined with a nonfatal error for the GStreamer library which occurred directly before (which I thought was the problem). I have spent the latter half of the week rewriting the JetsonCamera.py file and Camera class to avoid using this library, while still interfacing in the same way.

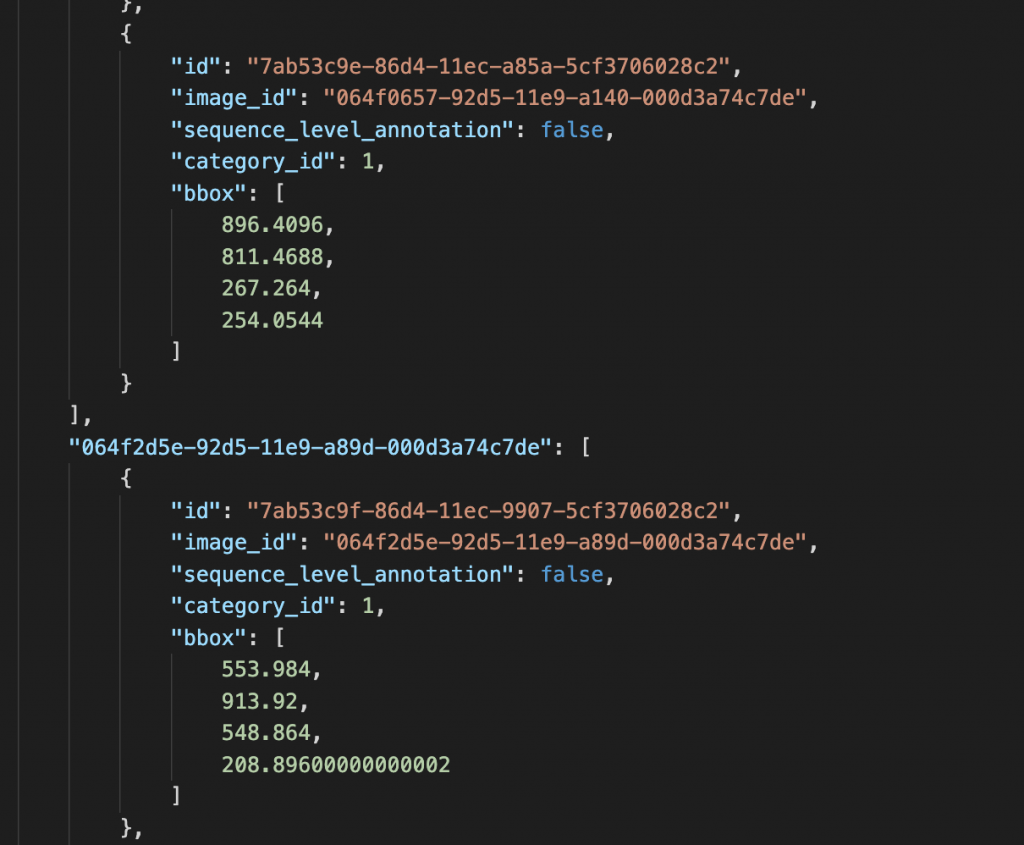

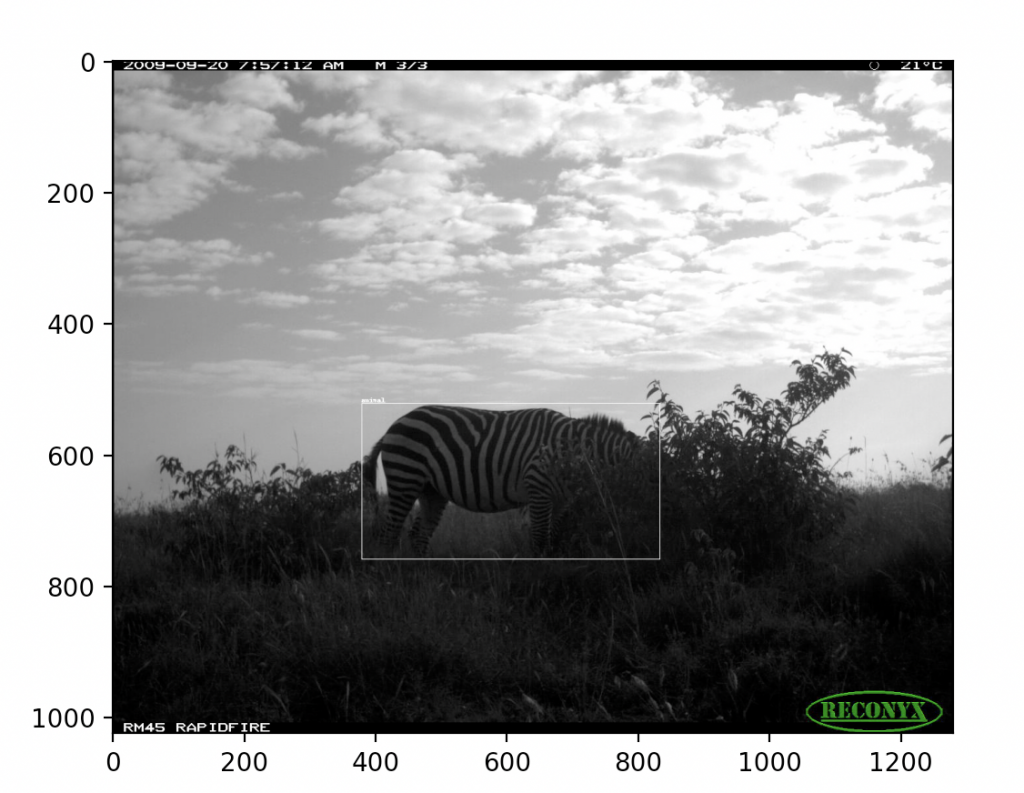

Whenever I was away from my house and did not have access to the Jetson, I continued working on the photo editing feature. I am working on training a photo editing CNN to automatically apply different image editing algorithms to photos. I plan to use the Kaggle Animal Image Dataset because it has a wide variety of animals and also has only well-edited photos. I am still formatting the data, but I do not anticipate this task should take too long. This part is not a priority, as we identified it as a stretch goal.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on schedule with the most recent team schedule. I plan to finish integration tomorrow to present in our meeting Monday.

What deliverables do you hope to complete in the next week?

A full robotic system that we can test with.